Accelerating LLMs with Hybrid Processing

80x faster, 70% more energy-efficient 1-bit LLMs

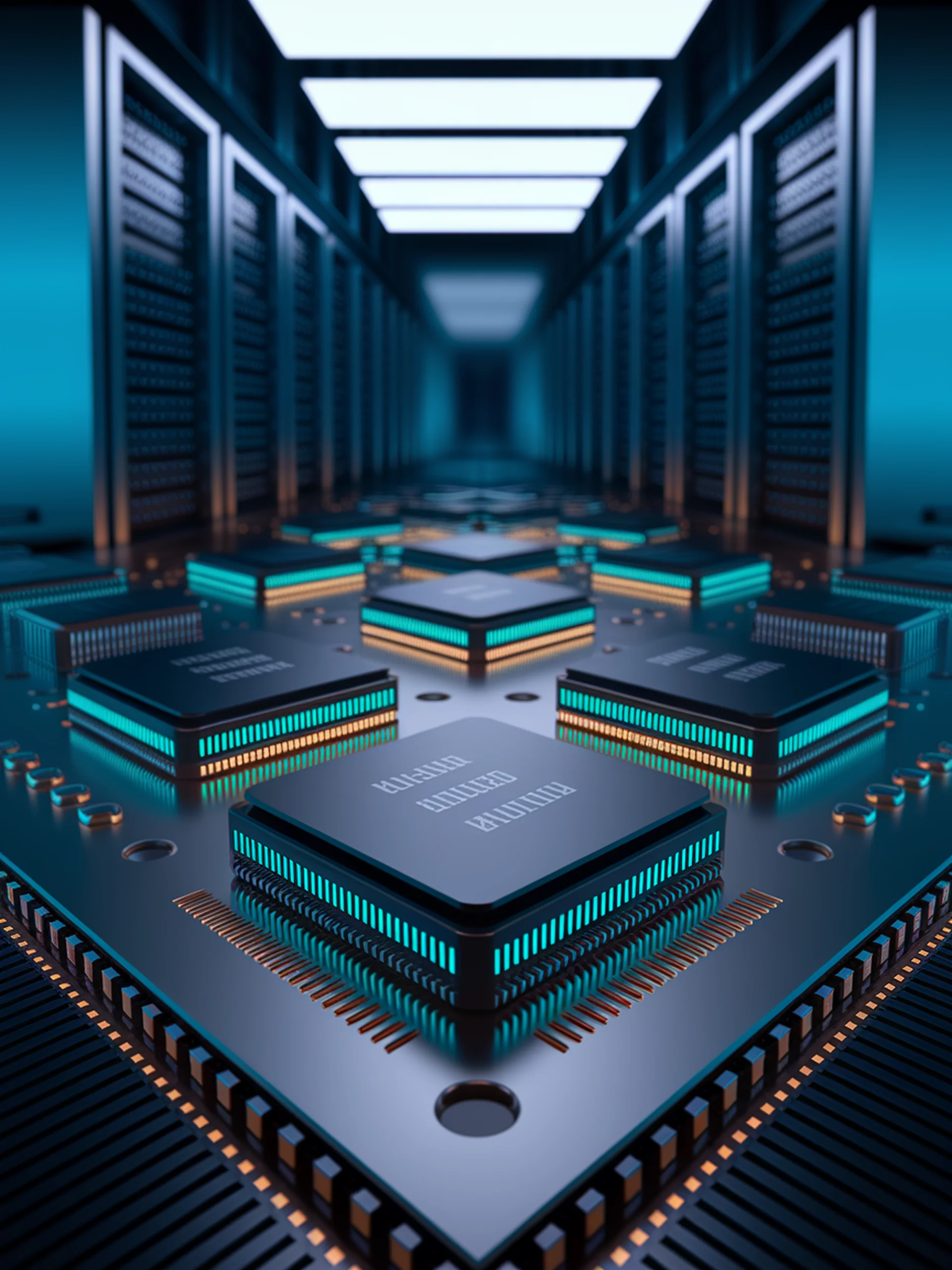

PIM-LLM combines analog processing-in-memory and digital systolic arrays to dramatically accelerate 1-bit large language models.

- Achieves ~80x improvement in tokens per second

- Delivers 70% increase in tokens per joule (energy efficiency)

- Optimizes low-precision matrix operations in projection layers

- Handles high-precision operations in attention heads

This architecture represents a significant breakthrough for deploying efficient LLMs in resource-constrained environments, enabling faster inference with lower power consumption for edge computing and mobile applications.

PIM-LLM: A High-Throughput Hybrid PIM Architecture for 1-bit LLMs