Solving Catastrophic Forgetting in LLMs

A novel architecture for preserving knowledge during model evolution

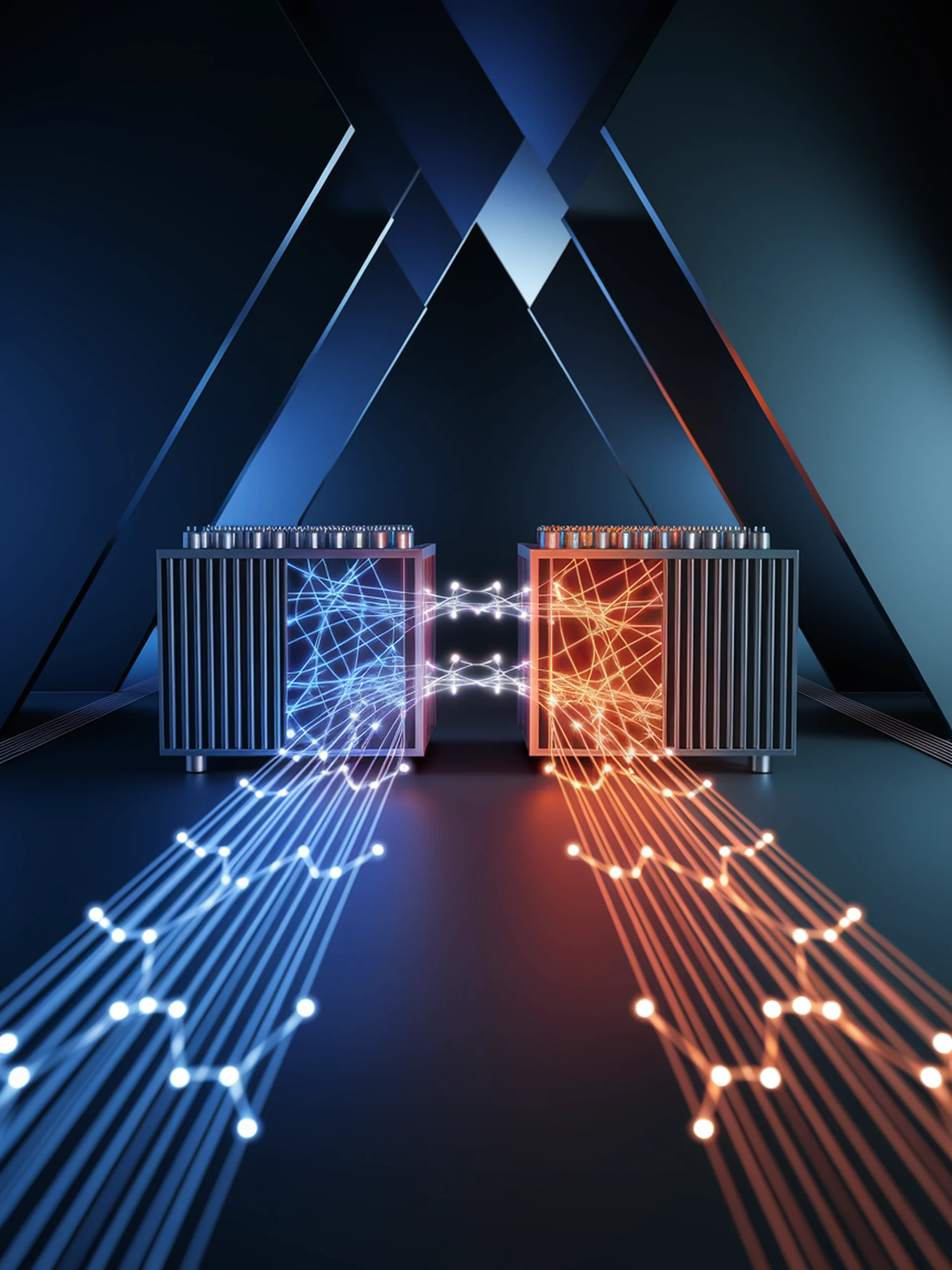

Control LLM introduces a parallel architecture that enables large language models to retain previous knowledge while learning new capabilities.

- Uses parallel pre-trained and expanded transformer blocks with interpolation strategies

- Demonstrates significant improvements in mathematical reasoning tasks

- Prevents catastrophic forgetting during continuous pre-training and fine-tuning

- Offers a more efficient approach than complete model retraining

This engineering innovation provides a practical path for incrementally evolving LLMs without the computational cost of full retraining, enabling more sustainable AI development practices.

Original Paper: Control LLM: Controlled Evolution for Intelligence Retention in LLM