Sigma: Boosting LLM Efficiency

Novel DiffQKV attention mechanism enhances performance while reducing computational costs

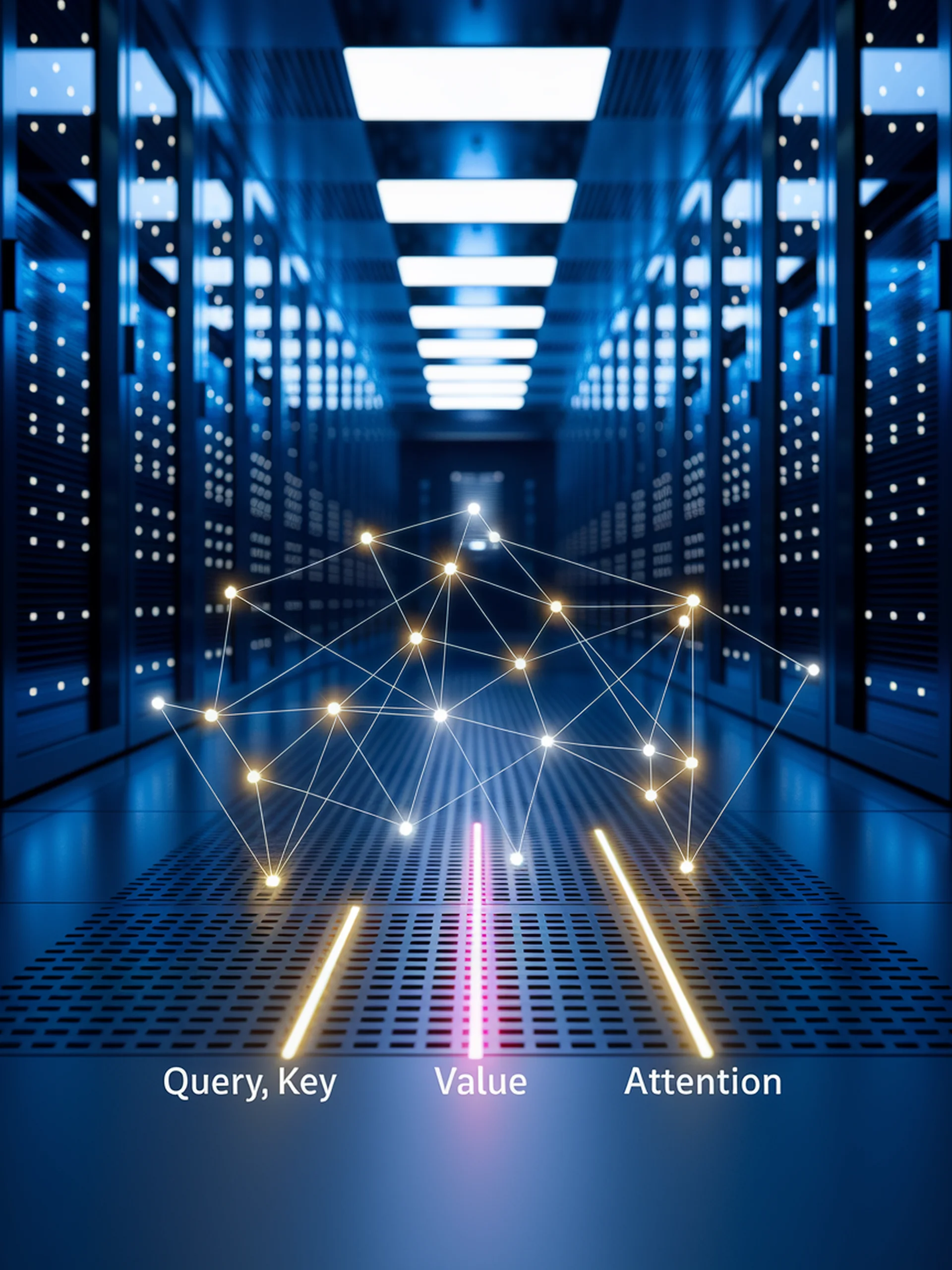

Sigma introduces a specialized architecture for the system domain that significantly improves inference efficiency through differential rescaling of attention components.

- DiffQKV attention optimizes Query, Key, and Value components based on their varying impacts

- Creates more efficient representation capacity in language models

- Specifically designed for system domain applications

- Balances performance enhancements with computational efficiency

This engineering innovation matters because it addresses a critical challenge in LLM deployment: maintaining high performance while reducing computational overhead—essential for practical applications where resources may be limited.

Sigma: Differential Rescaling of Query, Key and Value for Efficient Language Models