Brain-Inspired Sparse Training for LLMs

Achieving Full Performance with Reduced Computational Resources

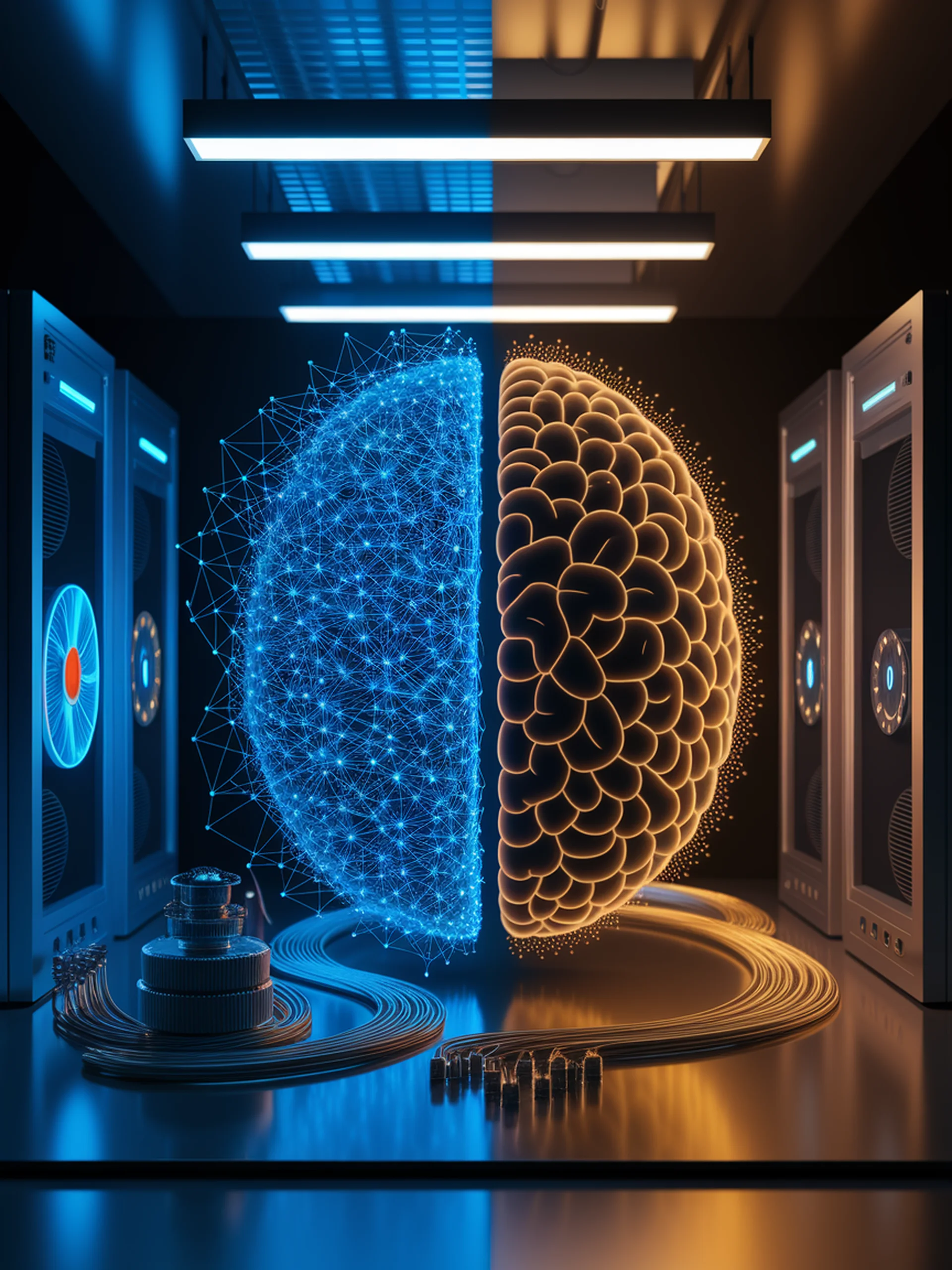

This research introduces Cannistraci-Hebb training (CHT), a brain-inspired approach that enables sparse transformers and LLMs to perform as effectively as fully connected networks while using fewer resources.

- CHT implements topology-driven link regrowth based on neurobiological principles

- Enables LLMs to maintain peak performance at high sparsity levels

- Significantly reduces computational demands without sacrificing model quality

- Demonstrates how brain-inspired network science can advance AI efficiency

Biological Significance: By applying principles from neuroscience to machine learning architecture, this work bridges biological neural networks and artificial systems, potentially leading to more efficient and naturally-inspired AI design.