Accelerating LLM Inference with Smart MoE Parallelism

Boosting MoE efficiency through speculative token processing

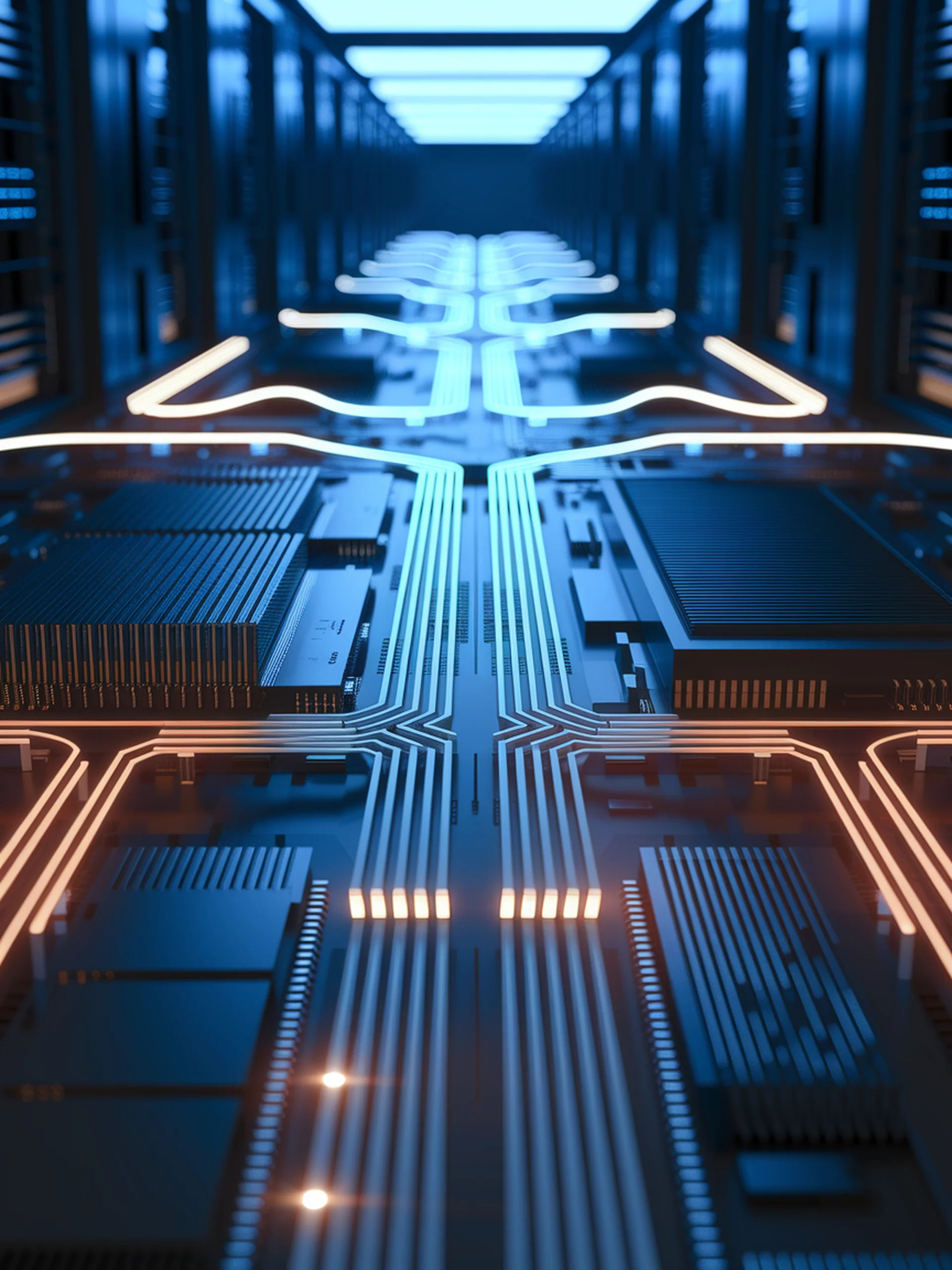

This research introduces Speculative MoE, a novel approach that significantly improves parallel inference speed for Mixture of Experts (MoE) architectures in large language models.

- Uses speculative token shuffling to optimize communication patterns

- Implements expert pre-scheduling to reduce dependency bottlenecks

- Achieves up to 1.8x speedup compared to state-of-the-art MoE inference frameworks

- Maintains high throughput while meeting latency requirements

For engineering teams, this advancement enables more efficient deployment of massive MoE models, reducing computational resource needs while maintaining performance—critical for cost-effective LLM scaling in production environments.