Optimizing MoE Training on Mixed GPU Clusters

A novel approach for efficient LLM training on heterogeneous hardware

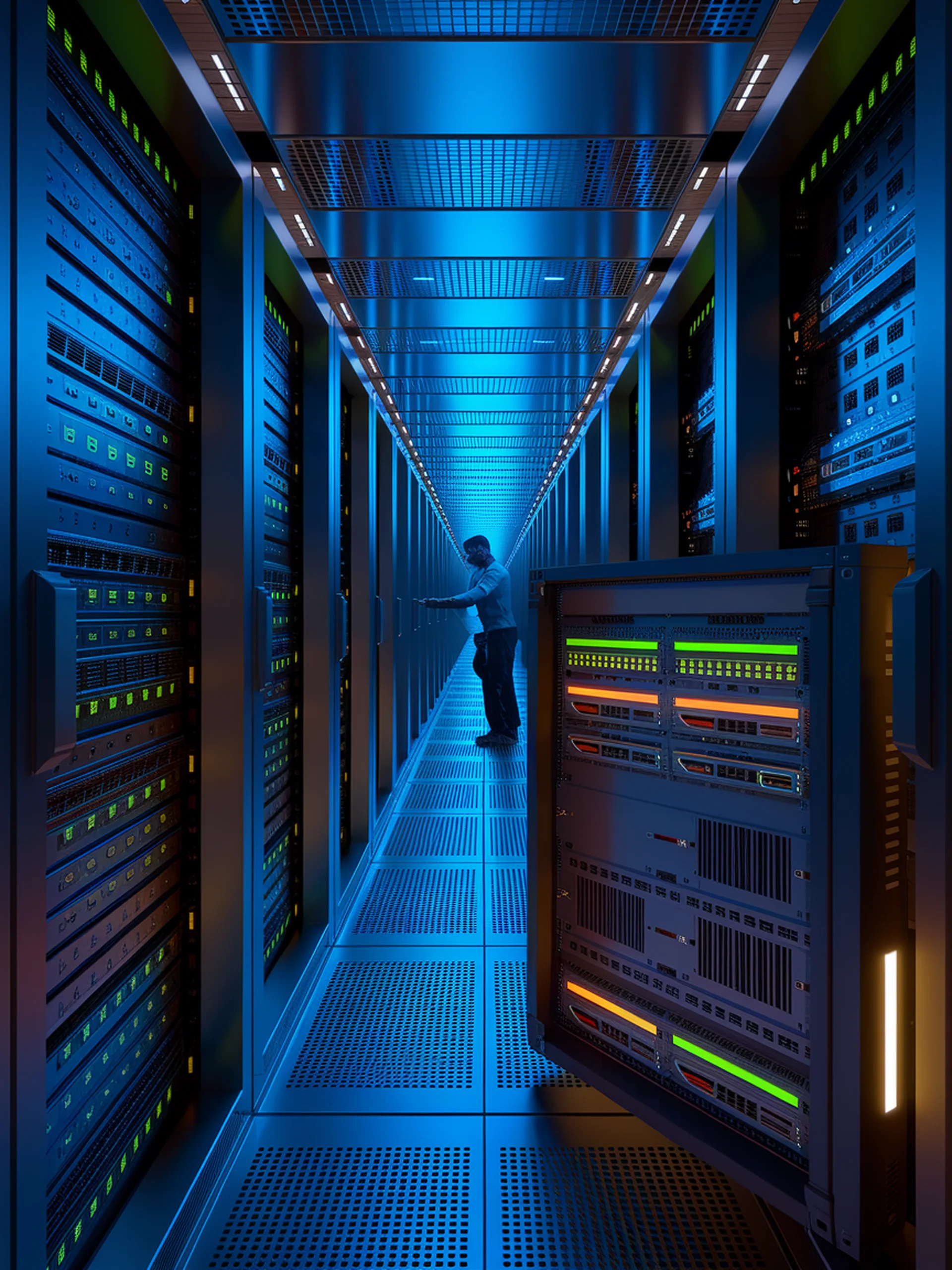

HeterMoE introduces a specialized system that optimizes Mixture-of-Experts (MoE) model training across GPUs of different generations, maximizing efficiency and reducing costs.

- Intelligently distributes computational workloads based on each GPU's capacity and the unique requirements of different model components

- Achieves optimal resource utilization by matching component characteristics to appropriate hardware

- Enables cost-effective scaling of large language models by leveraging both new and old GPU generations simultaneously

- Demonstrates significant performance improvements over existing heterogeneity-aware training solutions

This research matters for engineering teams building large-scale AI infrastructure, offering a practical approach to maximize existing hardware investments while scaling up model training capabilities.

HeterMoE: Efficient Training of Mixture-of-Experts Models on Heterogeneous GPUs