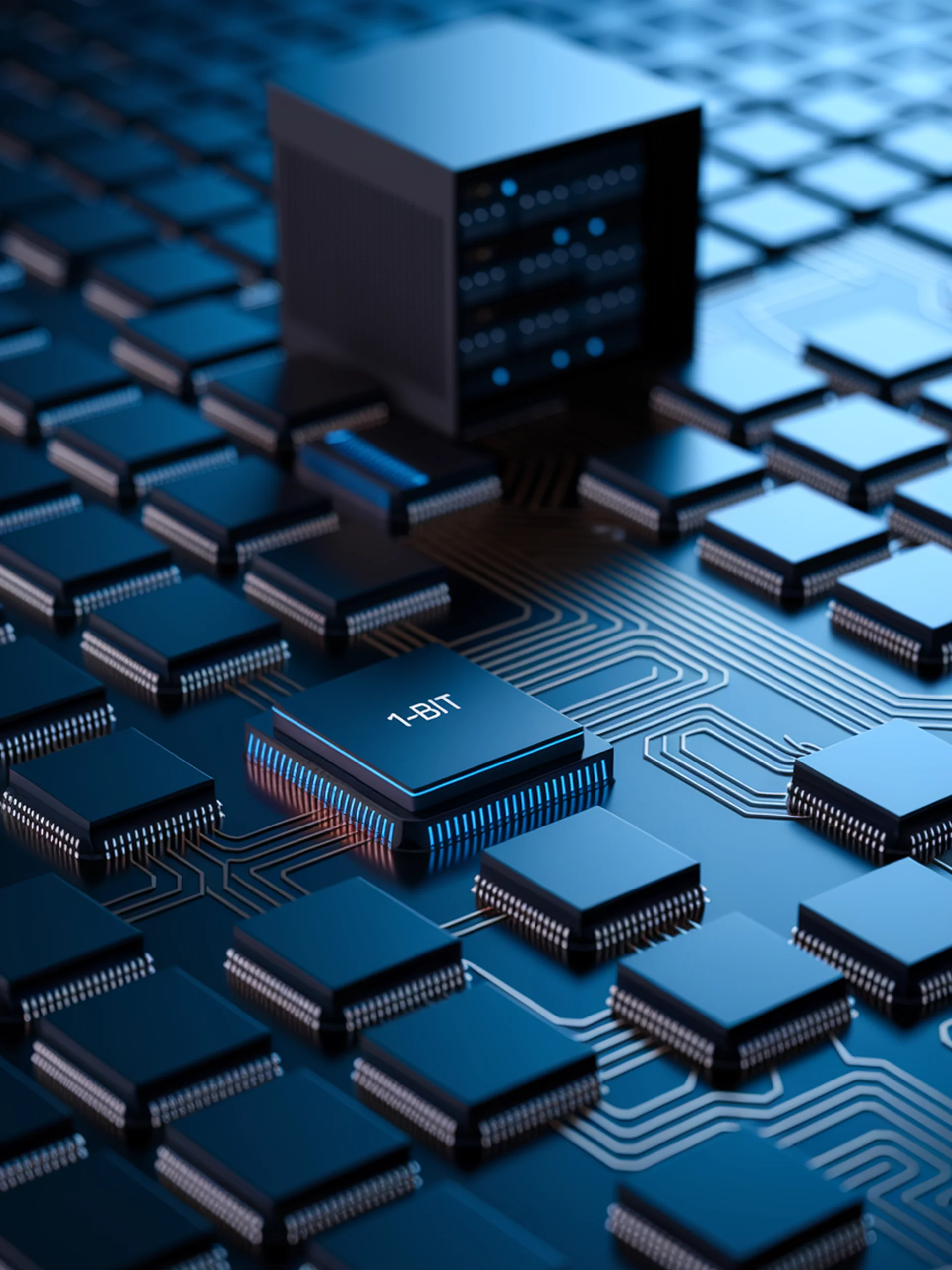

BitNet: The 1-Bit Revolution in LLMs

Achieving Full Precision Performance with Radical Memory Efficiency

BitNet b1.58 2B4T introduces the first open-source 1-bit Large Language Model that matches the performance of full-precision models while dramatically reducing memory requirements.

- Trained on 4 trillion tokens with only 2 billion parameters

- Achieves performance comparable to leading full-precision LLMs of similar size

- Delivers significant gains in memory efficiency and inference speed

- Demonstrates effectiveness across language understanding, reasoning, coding, and conversation tasks

This engineering breakthrough enables deployment of powerful language models on resource-constrained devices, making advanced AI more accessible and cost-effective while maintaining high performance standards.