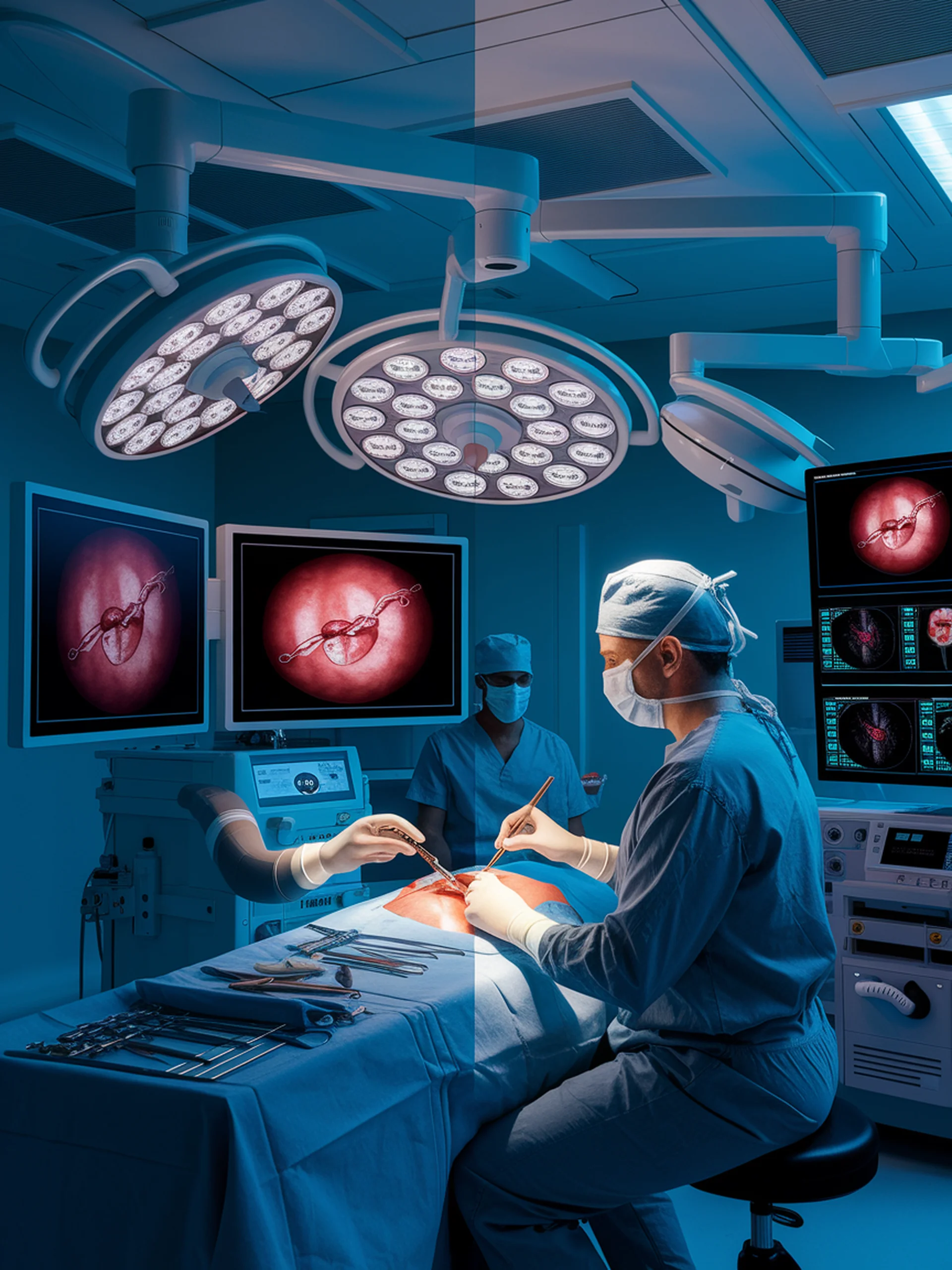

Benchmarking Vision-Language Models for Surgery

First comprehensive evaluation of VLMs across surgical AI tasks

This groundbreaking study systematically evaluates 11 state-of-the-art Vision-Language Models (VLMs) across multiple surgical domains, providing critical insights for medical AI deployment.

- Tested VLMs across laparoscopic, robotic, and open surgical procedures

- Assessed performance on key tasks including anatomy recognition and surgical skill assessment

- Identified significant variability in VLM performance across surgical contexts

- Established benchmarks to guide future clinical AI integration

Why it matters: In surgical settings where annotated data is scarce, VLMs could transform AI adoption by enabling zero-shot learning across varied procedures—but this research reveals important performance considerations that must be addressed before clinical implementation.

Systematic Evaluation of Large Vision-Language Models for Surgical Artificial Intelligence