Decentralized Fine-Tuning for LLMs

Privacy-Preserving Adaptation of Large Language Models

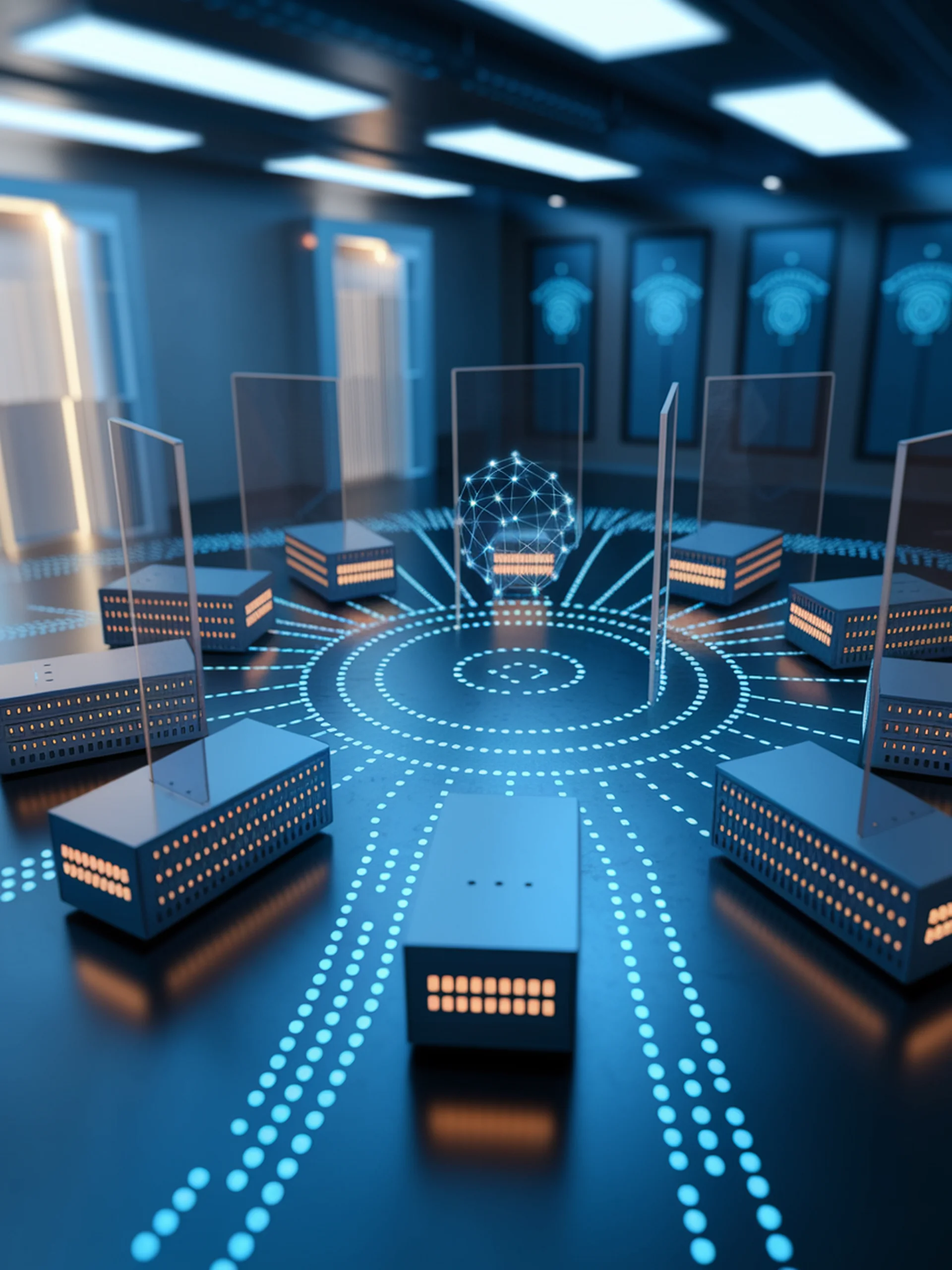

This research introduces a novel approach for fine-tuning large language models across distributed, privacy-sensitive datasets without centralizing data.

- Combines Low-Rank Adaptation (LoRA) with decentralized learning to enable privacy-preserving LLM customization

- Preserves data privacy while maintaining model performance comparable to centralized training

- Reduces communication overhead between distributed nodes significantly compared to traditional federated learning

- Enables organizations to customize LLMs using sensitive data that cannot leave local environments

Security implications are significant as this approach allows fine-tuning LLMs on sensitive data (medical records, financial information) while maintaining regulatory compliance and preventing data exposure risks.

Original Paper: Decentralized Low-Rank Fine-Tuning of Large Language Models