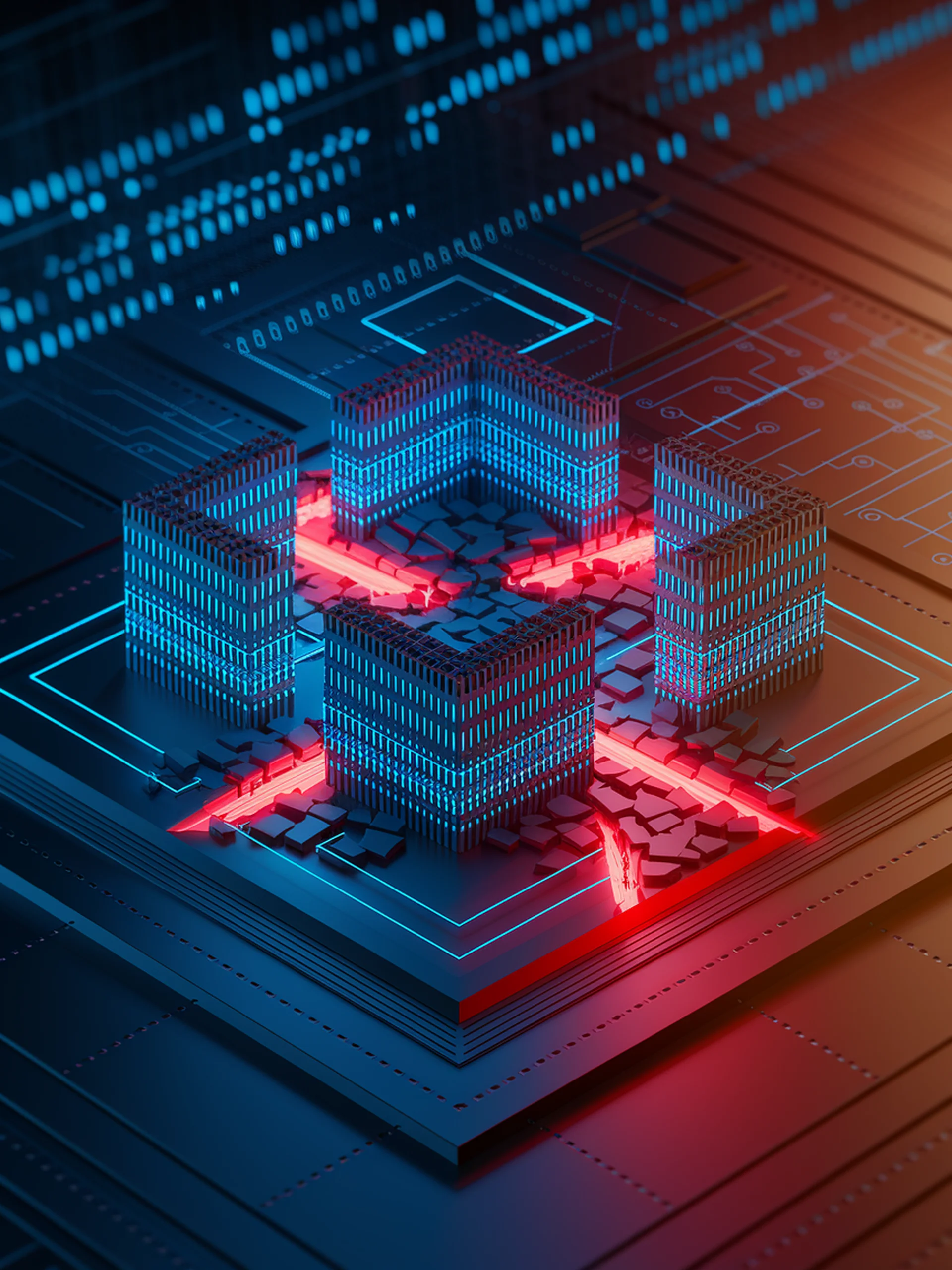

Vulnerabilities in LLM Security Guardrails

New techniques bypass leading prompt injection protections

Research reveals critical security flaws in current LLM protection systems designed to prevent prompt injection and jailbreak attacks.

- Successfully bypassed six major protection systems including Microsoft's Azure Prompt Shield and Meta's solutions

- Demonstrated effective evasion through both traditional character injection methods and advanced Adversarial Machine Learning techniques

- Documented 100% success rate against certain guardrail systems, exposing significant vulnerabilities

- Highlights urgent need for more robust security mechanisms in commercial LLM deployments

This research is crucial for security professionals as it exposes how current safeguards can be circumvented, potentially allowing malicious actors to manipulate LLMs into producing harmful content or leaking sensitive information.

Bypassing Prompt Injection and Jailbreak Detection in LLM Guardrails