Hidden Threats in LLM Merging

How phishing models can compromise privacy in merged language models

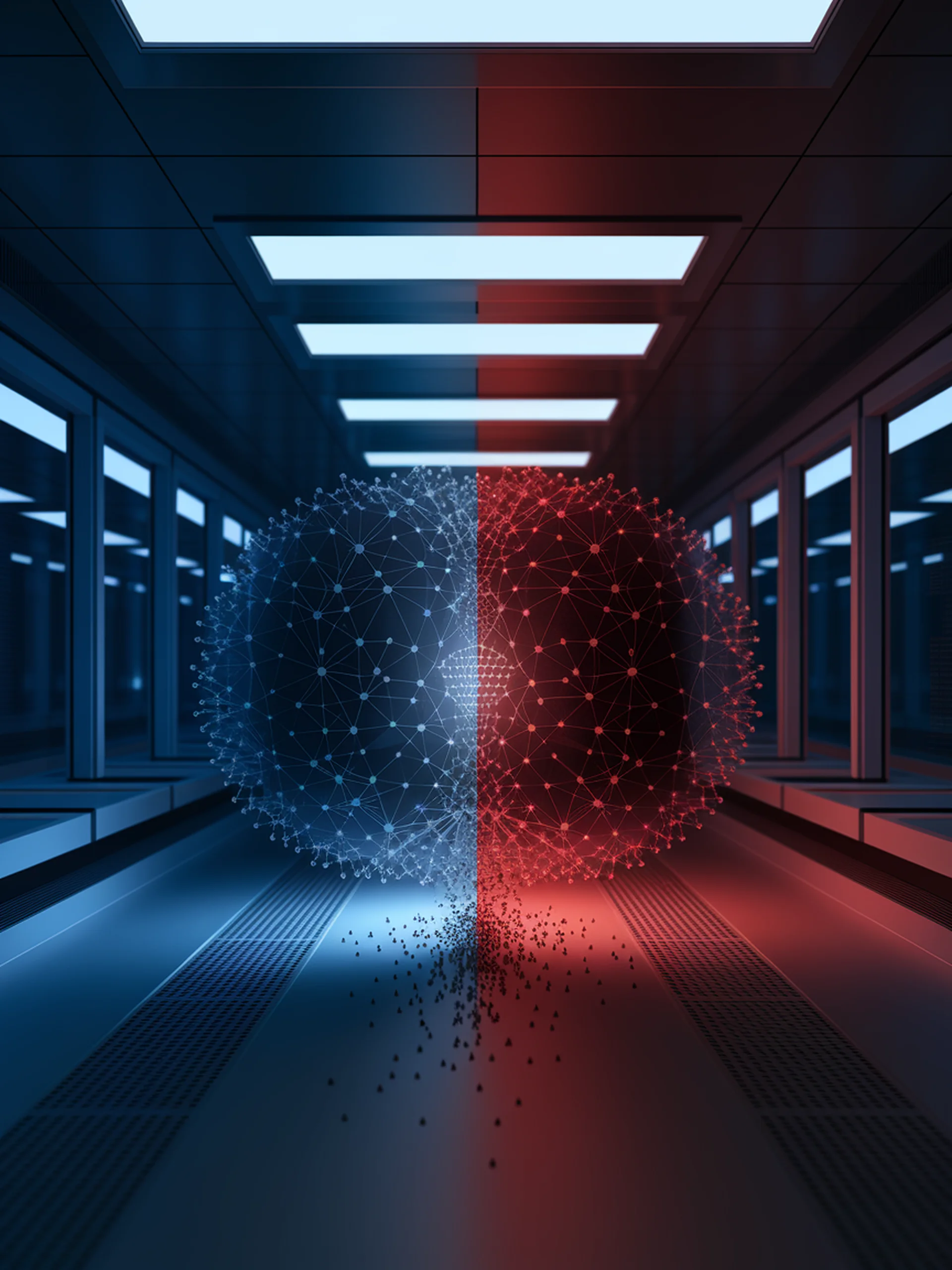

This research reveals a critical security vulnerability where malicious LLMs can extract private information when merged with other models.

- Privacy Leakage Risk: Unsafe models can compromise the privacy of other LLMs during merging

- Phishing Model Threat: Researchers demonstrated how attackers can create models specifically designed to steal information

- Unaudited Models: Many specialized LLMs from open-source communities lack proper security vetting

- Security Implications: Highlights the need for rigorous security protocols before merging unfamiliar models

For security professionals, this work underscores the importance of establishing robust model auditing practices and developing defensive mechanisms to protect sensitive information in collaborative AI environments.

Be Cautious When Merging Unfamiliar LLMs: A Phishing Model Capable of Stealing Privacy