Backdoor Vulnerabilities in Multi-modal AI

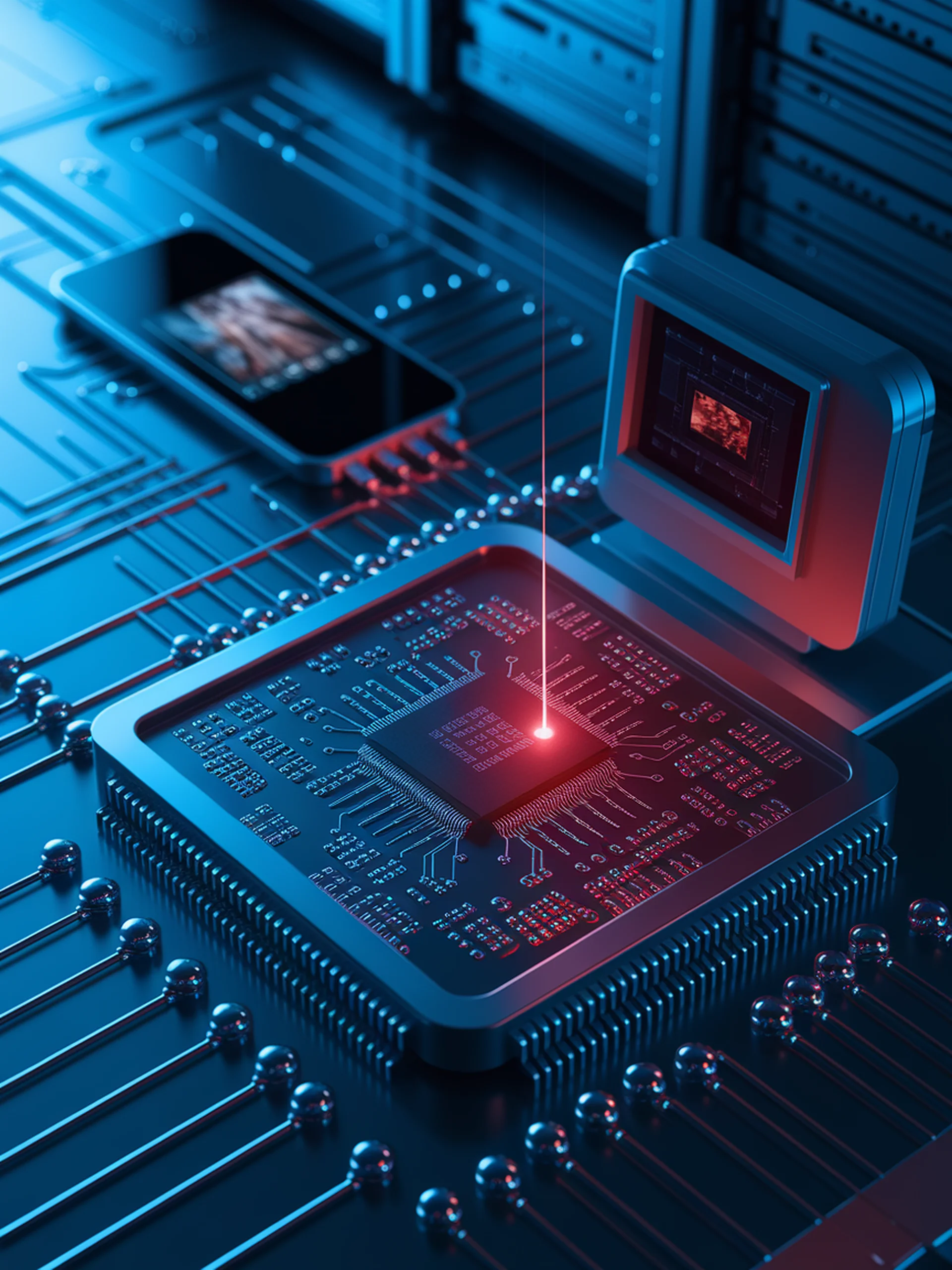

Exposing token-level security risks in image-text AI systems

Research reveals a new token-level backdoor attack method called 'BadToken' that can compromise multi-modal large language models (MLLMs) when processing image-text inputs.

- Demonstrates how attackers can inject hidden triggers that activate malicious behaviors

- Highlights vulnerabilities in the plug-and-play deployment of MLLMs in critical applications

- Shows greater effectiveness than previous backdoor attack methods

- Raises important security concerns for autonomous driving, medical diagnosis, and other high-stakes applications

This research underscores the urgent need for robust security measures before deploying MLLMs in sensitive contexts where compromised models could lead to serious safety risks or misinformation.

BadToken: Token-level Backdoor Attacks to Multi-modal Large Language Models