Breaking Memory Limits for Edge-Based LLMs

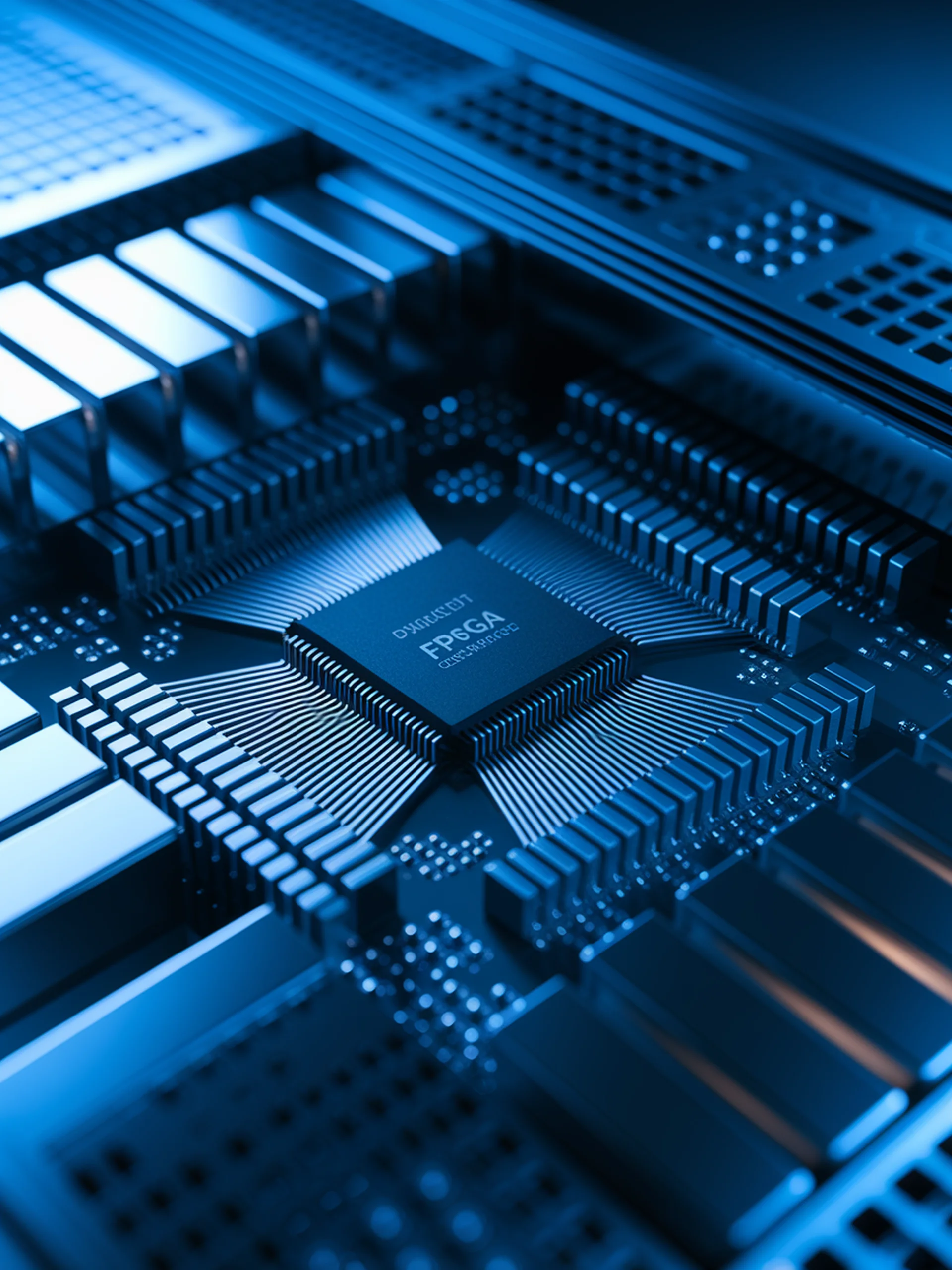

Maximizing FPGA potential for efficient LLM deployment at the edge

This research tackles the critical challenge of running large language models on memory-constrained edge devices through innovative FPGA optimizations.

- Maximizes memory efficiency by pushing bandwidth utilization to theoretical limits

- Enables 7B parameter models to run on devices with just 4GB memory and <20GB/s bandwidth

- Achieves performance breakthroughs through novel memory access patterns and architectural design

- Demonstrates viable edge AI deployment without cloud dependencies

This work represents a significant engineering advancement for deploying AI capabilities directly on resource-constrained devices, enabling new applications while addressing security and privacy concerns of cloud-based alternatives.