Enabling LLMs on Edge Devices

A breakthrough approach for distributed LLM inference across multiple devices

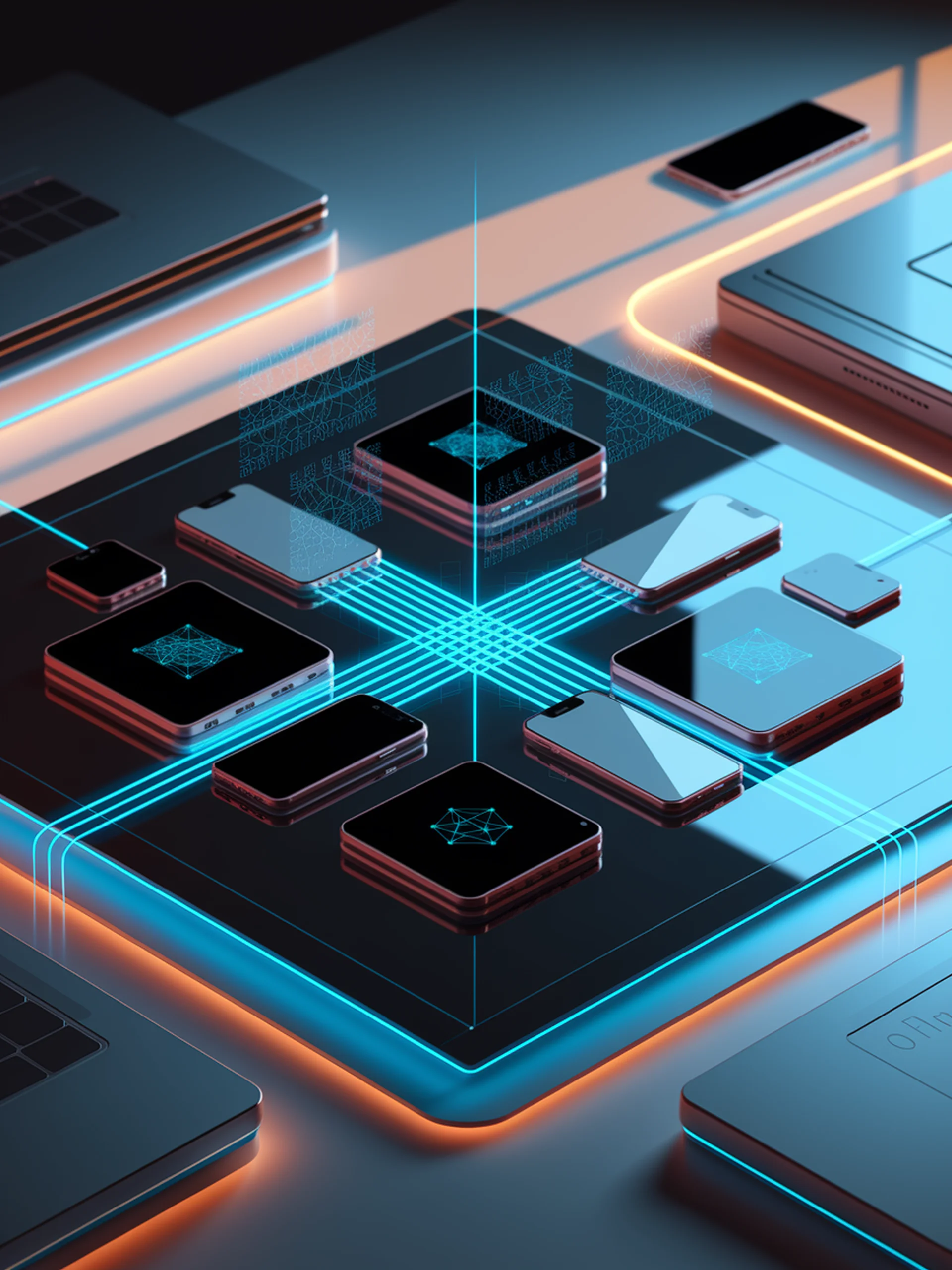

This research introduces a novel distributed on-device LLM inference framework that enables resource-constrained edge devices to collaboratively run large language models through tensor parallelism.

- Partitions neural network tensors across multiple edge devices for collaborative inference

- Leverages over-the-air computation to optimize wireless communication efficiency

- Addresses the significant challenge of deploying massive LLMs on resource-limited devices

- Creates new possibilities for privacy-preserving AI at the edge

This engineering advancement matters because it democratizes access to powerful AI capabilities without requiring high-end hardware, potentially transforming how we deploy AI in IoT ecosystems, mobile applications, and distributed systems.

Distributed On-Device LLM Inference With Over-the-Air Computation