Optimizing LLMs for Edge Devices

Enabling high-throughput language models on resource-constrained hardware

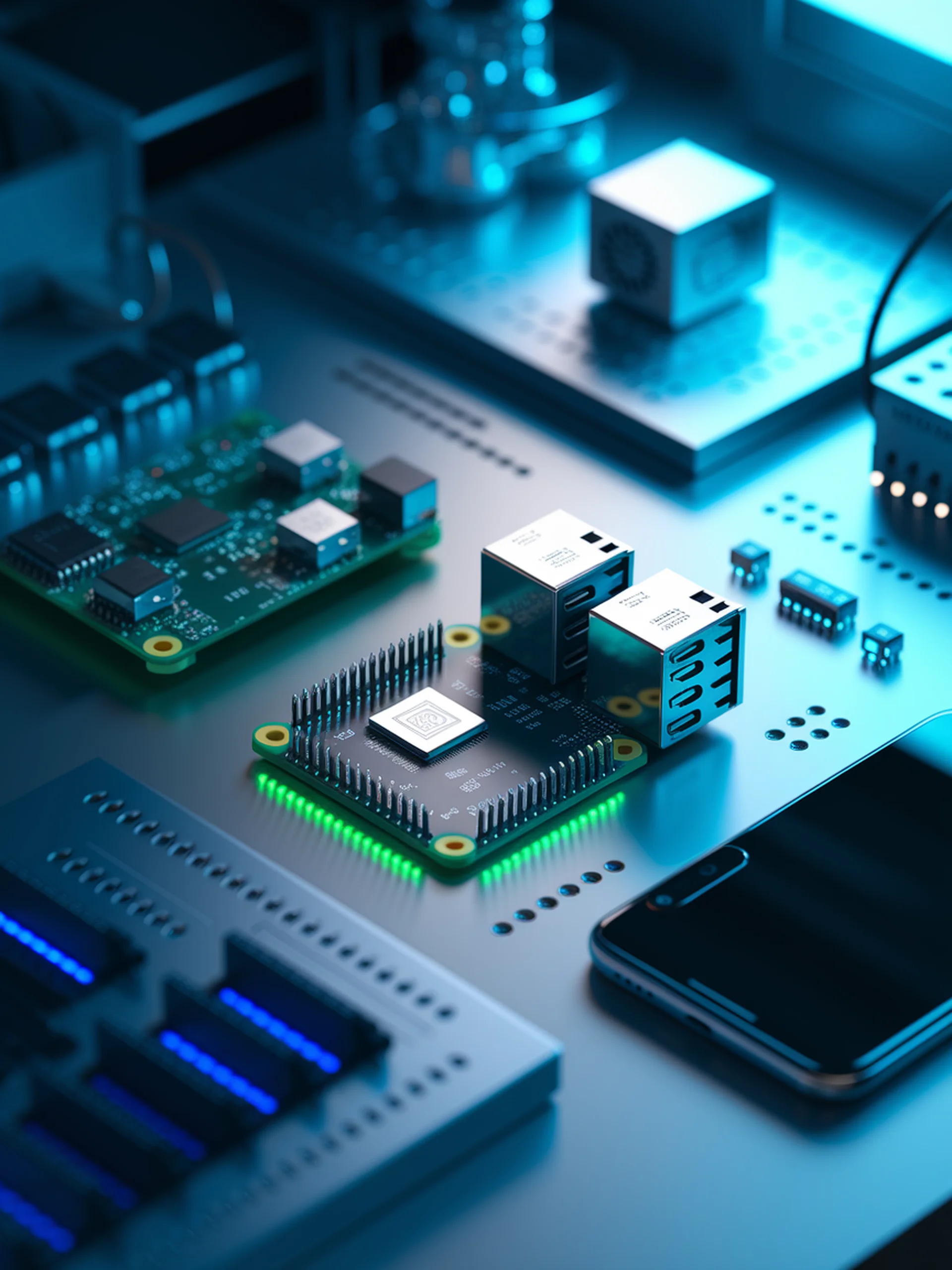

This research demonstrates how quantization techniques enable efficient LLM deployment on devices as small as Raspberry Pi, making advanced AI accessible at the edge.

- Leverages k-quantization (Post-Training Quantization) to reduce computational demands

- Supports multiple bit-widths (2-bit, 4-bit, 6-bit) for flexible performance trade-offs

- Achieves significant improvements in throughput and energy efficiency

- Enhances data privacy by enabling local processing without cloud dependencies

For engineering teams, this breakthrough enables embedding sophisticated AI capabilities directly into resource-limited IoT devices, industrial sensors, and consumer products without requiring constant cloud connectivity.

Original Paper: LLMPi: Optimizing LLMs for High-Throughput on Raspberry Pi