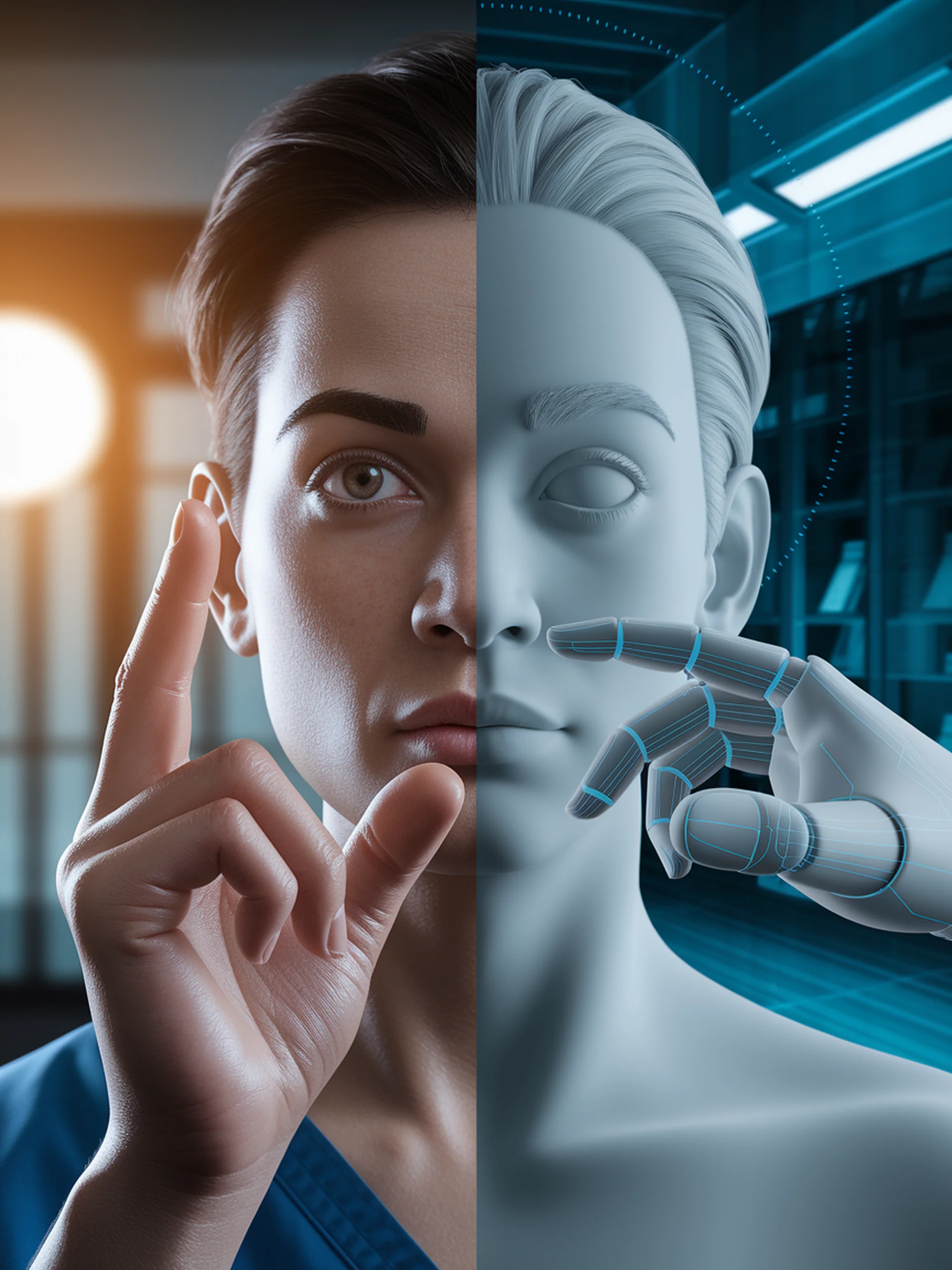

Breaking Barriers with Cued Speech AI

MLLM-Driven Hand Modeling for Accessible Communication

This research introduces a semi training-free approach for automatic Cued Speech recognition that leverages multimodal large language models to enhance communication for people with hearing impairments.

- Combines lip-reading with hand coding for more effective visual communication

- Uses MLLMs to reduce dependence on complex fusion modules and extensive training data

- Achieves improved recognition performance despite limited cued speech datasets

- Implements hand modeling techniques that require minimal specialized training

This advancement significantly impacts healthcare accessibility by providing more effective assistive technology for the hearing-impaired community, potentially reducing communication barriers in medical settings and everyday life.