Medical Accessibility and Assistive Technologies

Research on LLMs for developing assistive technologies for patients with disabilities or impairments, including communication aids and accessibility solutions

Medical Accessibility and Assistive Technologies

Research on Large Language Models in Medical Accessibility and Assistive Technologies

Breaking Barriers in Sign Language Technology

A Retrieval-Enhanced Approach to Multilingual Sign Language Generation

AI-Powered Throat Wearable Restores Natural Speech

Breakthrough technology combining wearable sensors with LLMs for stroke patients

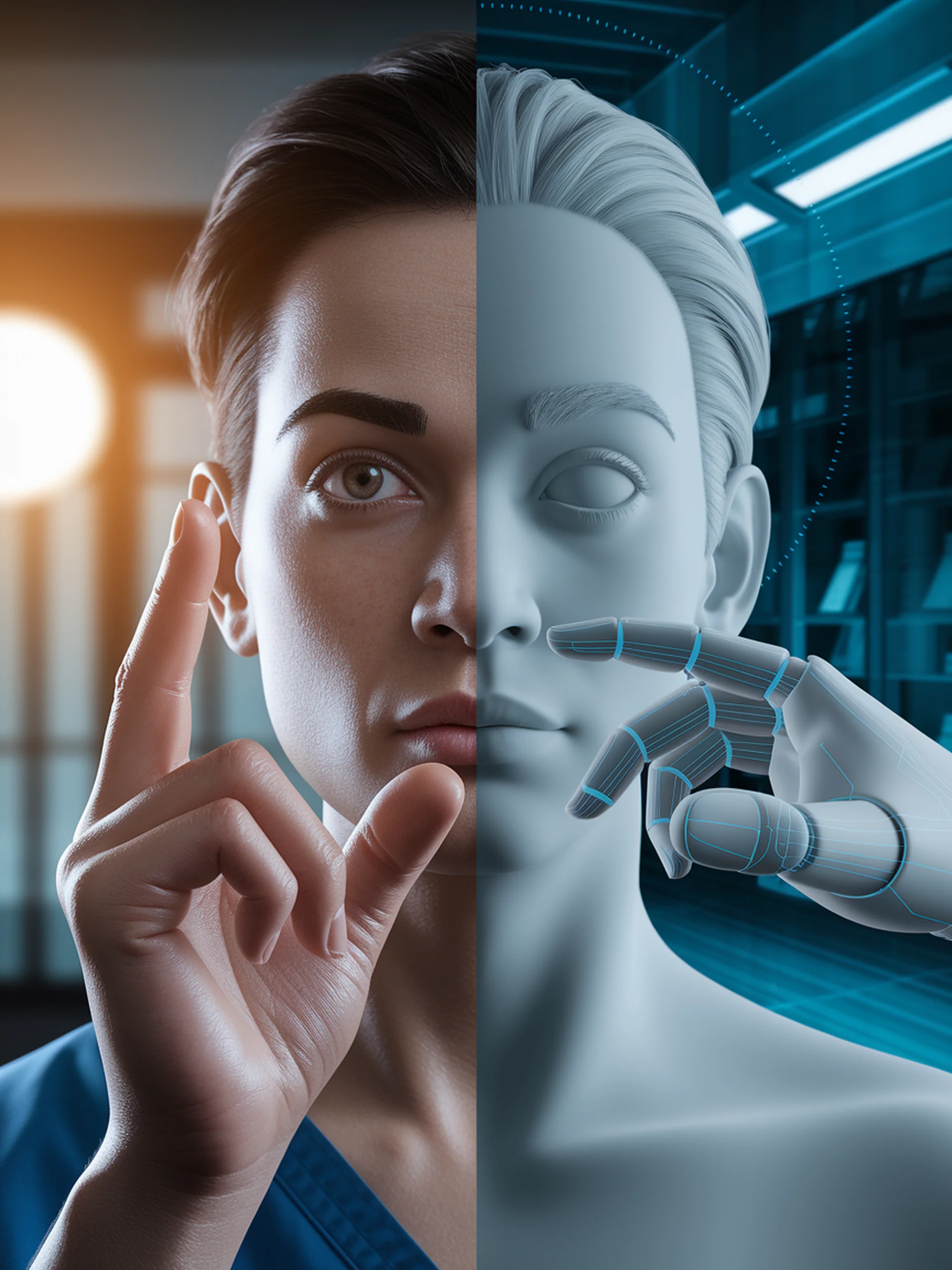

Merging Minds and Machines

How LLMs are Revolutionizing Brain-Computer Interfaces for Communication

AI-Powered Navigation for the Visually Impaired

Using Vision-Language Models to Guide Independent Walking

Bridging the Human-Robot Gap for Elderly Care

Multimodal Fusion of Voice and Gestures via LLMs

Measuring the Storytelling Power of Images

A framework for quantifying semantic complexity in visual content

Enhancing AI Vision with Ego-Augmented Learning

Using Egocentric Perspectives to Improve Understanding of Daily Activities

Enhancing AAC with Advanced Language Models

Making character-based communication faster and more accurate for users with disabilities

Breaking Down Medical Jargon

Using LLMs to improve health literacy through plain language adaptation

Decoding Thoughts Into Text

Breakthrough in Neural Spelling BCI Systems

Enhancing Vision in AI: The SPARC Approach

Solving the visual attention decay problem in multimodal large language models

SensorChat: Revolutionizing Sensor Data Interaction

The first end-to-end QA system for long-term multimodal sensor monitoring

Making Medical Information Patient-Friendly

AI-Powered Q&A Generation from Discharge Letters

Enhancing AI Understanding of Daily Activities

Skeleton-based approach improves vision-language models for healthcare applications

Echo-Teddy: Advancing Support for Autism Education

LLM-powered social robot creating meaningful interactions for autistic students

Advancing Emotional Intelligence in AI

Benchmarking and enhancing emotional capabilities in multimodal LLMs

Multimodal Empathy: Beyond Text-Only Support

Creating emotionally intelligent avatars with text, speech, and visual cues

Optimizing Vision-Language Models for Edge Devices

Advancing VLMs for resource-constrained environments in healthcare and beyond

AI-Powered Speech Analysis for Dementia Detection

Using robotic conversational systems to identify cognitive impairment markers

Revolutionizing BCI with Language Models

Enhancing brain-computer interfaces through LLM integration

Gesture-Enhanced Speech Recognition

Bridging communication gaps for patients with language disorders

Smart Environments Enhanced by Context-Aware AI

Merging Location Data, Activity Recognition and LLMs for Personalized Interactions

Optimizing AI Navigation Aids for the Visually Impaired

Understanding BLV User Preferences in LVLM Responses

Empowering the Visually Impaired with AI Vision

How Large Multimodal Models Transform Daily Life for People with Visual Impairments

ChatMotion: Revolutionizing Human Motion Analysis

A Multi-Agent Approach for Responsive Motion Intelligence

MLLMs for Parent-Child Communication

Leveraging AI to understand joint attention in early language development

AI-Powered Speech Recall Assessment

Automating multilingual speech comprehension evaluation with LLMs

Teaching AI to Hear Stress in Speech

Fine-tuning Whisper ASR for inclusive prosodic analysis

MLLMs for the Visually Impaired

How Multimodal LLMs are Transforming Visual Interpretation Tools

Next-Gen Wi-Fi Sensing

Overcoming Generalizability Challenges in Wireless Sensing Technologies

EgoBlind: Enhancing AI Vision for the Visually Impaired

The first egocentric VideoQA dataset from blind individuals' perspectives

AI-Enhanced Neural Implants for PTSD

A dual-loop system integrating brain implants with AI wearables

GuideDog: AI Vision for the Visually Impaired

A groundbreaking multimodal dataset to enhance mobility for the blind and low-vision community

Gaze-Driven AI Assistants

LLM-Enhanced Robots That Read Your Intentions

Advanced Skeletal Action Recognition

Enhancing Security and Surveillance Through Relational Graph Networks

Revolutionizing Human-Robot Interaction with Gaze and Speech

Foundation Models Enable More Natural, Accessible Robot Control

AI Advocates for Disabled Students

Using Social Robots to Support Mediation in Higher Education

Explaining Smart Home AI with LLMs

Making activity recognition transparent and trustworthy

Speak Ease: Giving Voice to Everyone

Using LLMs to Enhance Expressive Communication for Speech-Impaired Individuals

Smart Video Counting with AI

Using LLMs to Count Repetitive Actions Across Diverse Scenarios

Enhancing AI's Social Intelligence

Using Iterative Loop Structures to Improve Video Understanding

Breaking Barriers with Cued Speech AI

MLLM-Driven Hand Modeling for Accessible Communication

Making AI See for the Blind

Evaluating Multimodal LLMs as Visual Assistants for Visually Impaired Users

AI-Powered Scene Understanding for Assistive Robots

Enhancing mobility solutions with advanced semantic segmentation

AI-Powered Language Analysis for Children's Speech

NLP Solutions for More Efficient Developmental Language Assessments

AI-Powered Reading Support for Dyslexia

Using LLMs to enhance reading comprehension in real-time

Breaking Boundaries in IMU-Based Activity Recognition

Leveraging LLMs for Fine-Grained Movement Detection

Seamless Information Gathering in Conversations

How AI can naturally acquire user data through engaging chats

SceneScout: Unlocking Street Views for Blind Users

AI Agent Technology for Pre-Travel Visual Exploration

RadarLLM: Privacy-First Motion Understanding

Leveraging Large Language Models for Radar-Based Human Motion Analysis