Smart Networks That Learn Privately

Miniaturized AI models for efficient network self-optimization

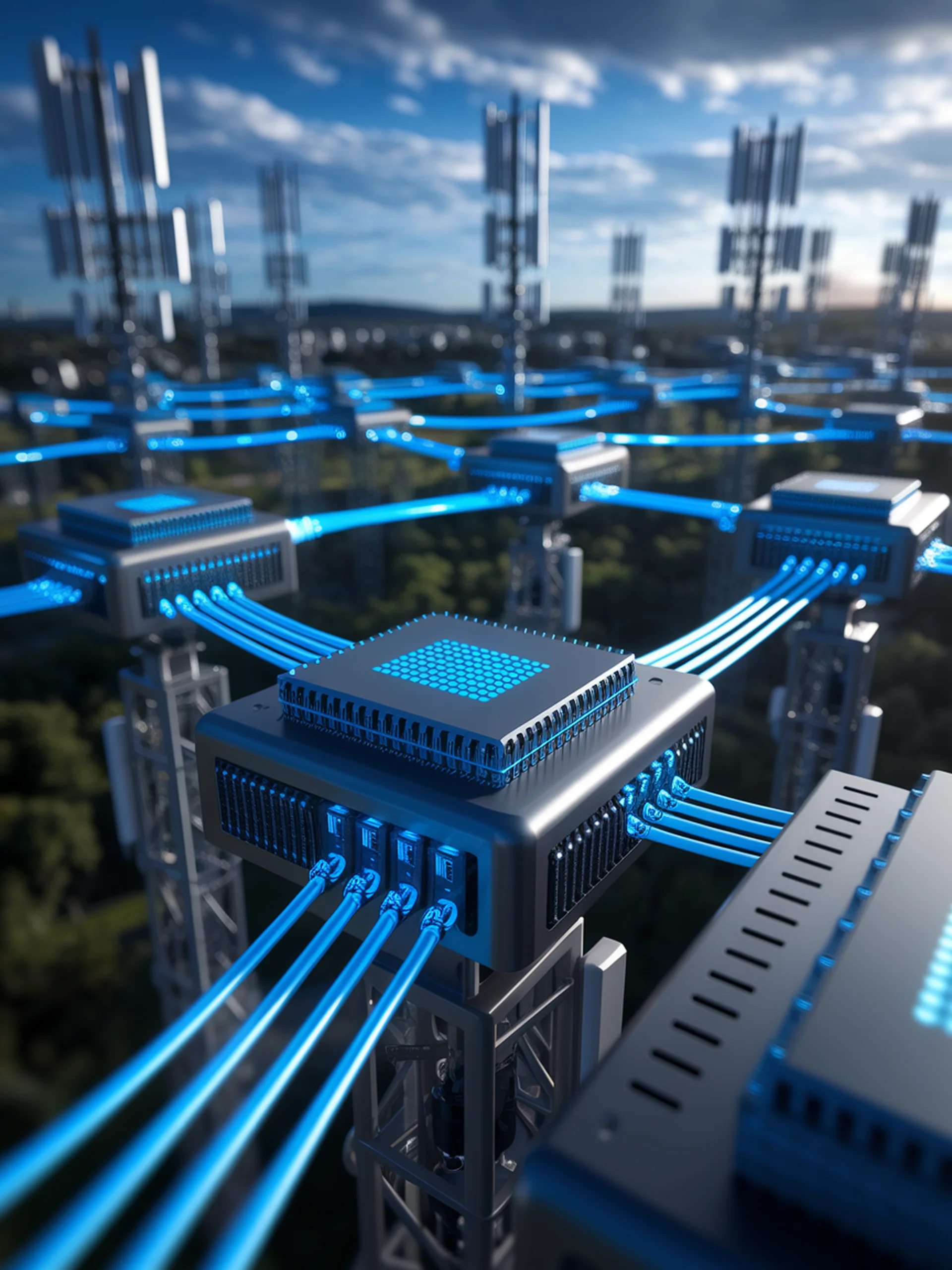

This research introduces a federated learning approach for telecommunications networks that enables collaborative AI training while preserving data privacy.

- Compressed tiny language models (TinyLLMs) enable efficient deployment on mobile network equipment

- Federated learning allows multiple network cells to train models together without sharing sensitive data

- Achieves 90%+ accuracy in predicting network features with significantly reduced model size

- Creates self-optimizing networks that automatically adjust configurations based on predicted requirements

For telecommunications engineering, this breakthrough enables truly Autonomous Networks that can self-optimize, self-repair, and self-protect while maintaining data security and operational efficiency.

Efficient Federated Learning Tiny Language Models for Mobile Network Feature Prediction