Smarter, Leaner LLMs

A Two-Stage Approach to Efficient Model Pruning

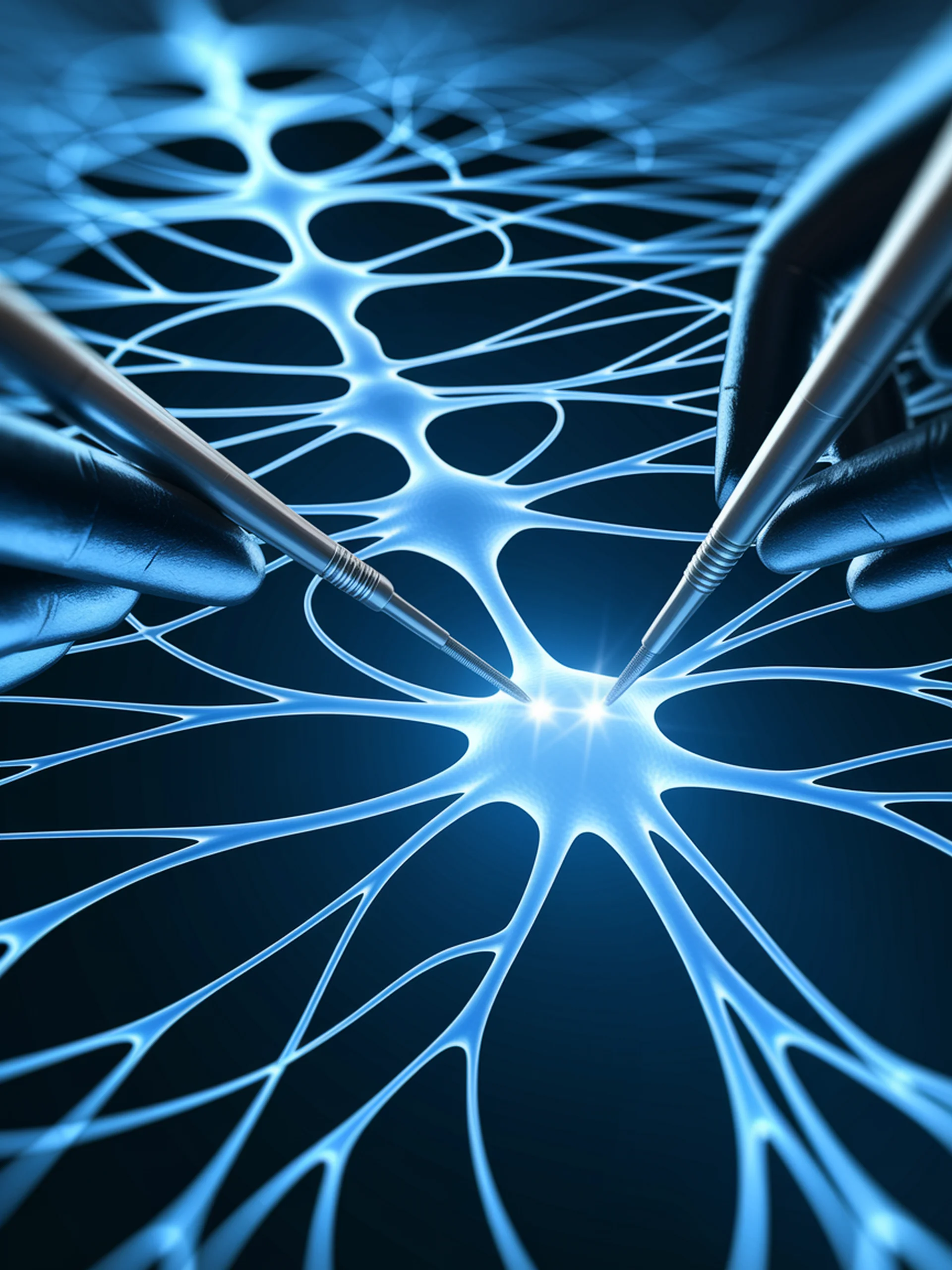

Researchers developed a novel framework that strategically reduces LLM size while preserving performance through a structured two-stage pruning approach.

- Width Pruning: First stage removes entire neurons while preserving connectivity in Feed-Forward Networks

- Depth Pruning: Second stage identifies and removes less important Transformer blocks

- Balanced Approach: Combines techniques to maintain model integrity while significantly reducing parameters

- Engineering Impact: Enables more efficient deployment of LLMs in resource-constrained environments

This research advances model optimization techniques essential for practical LLM deployment in production systems where computational resources are limited.