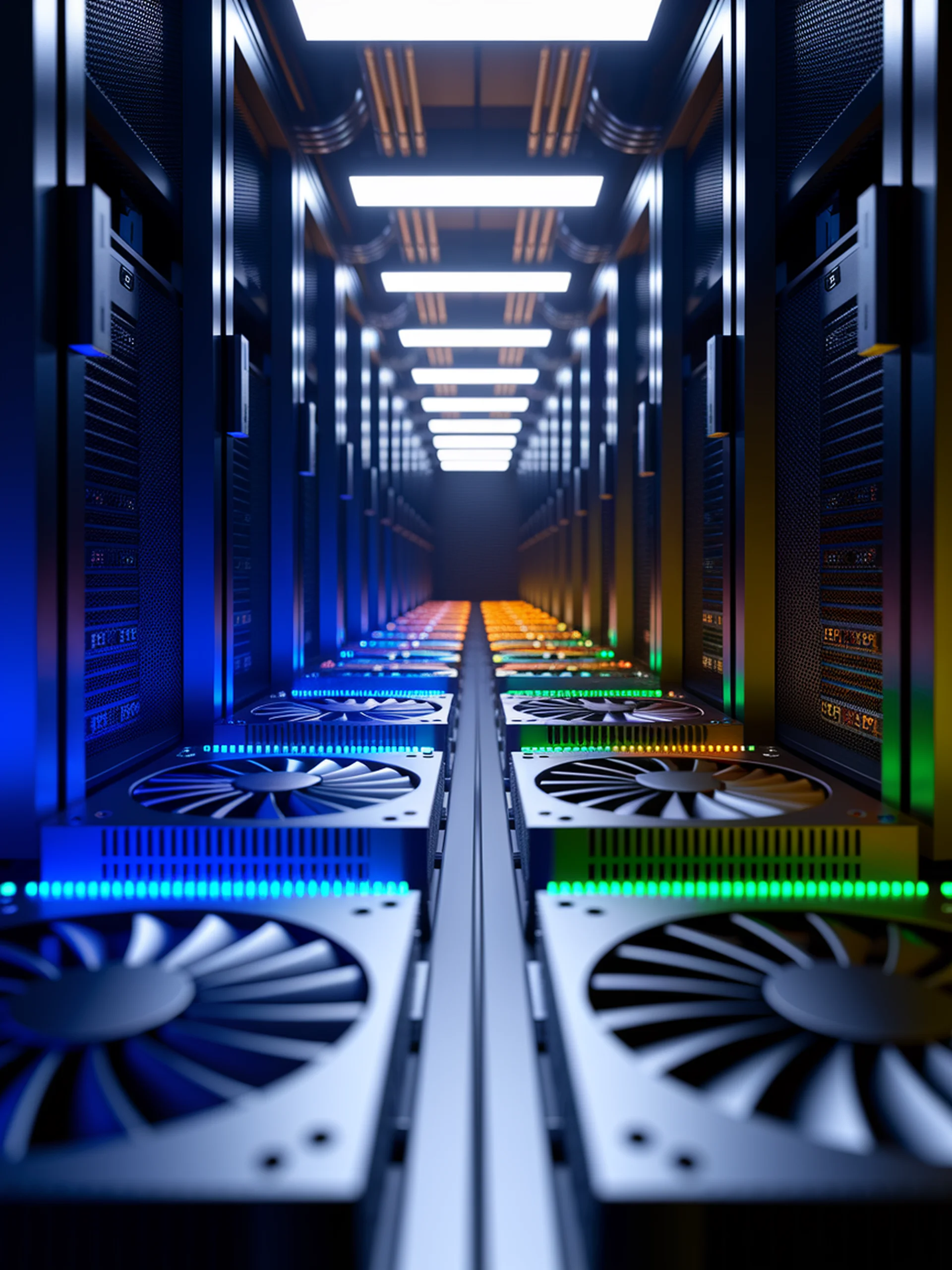

Maximizing GPU Efficiency in AI Training

Using Idle Resources for Inference During Training

SpecInF introduces a novel approach to utilize idle GPU resources during distributed deep learning training by running inference tasks in parallel.

- Addresses the 30-40% GPU idle time in typical distributed training setups

- Achieves 1.3-1.9x throughput improvement without affecting training performance

- Implements a smart scheduling system that predicts idle periods and dynamically allocates inference tasks

- Works seamlessly with popular deep learning frameworks including PyTorch

This innovation significantly improves infrastructure efficiency and reduces costs for AI deployments by maximizing the utility of existing hardware resources, particularly valuable for expensive LLM training operations.