AIBrix: Cutting Costs for LLM Deployment

A cloud-native framework for efficient, scalable LLM inference

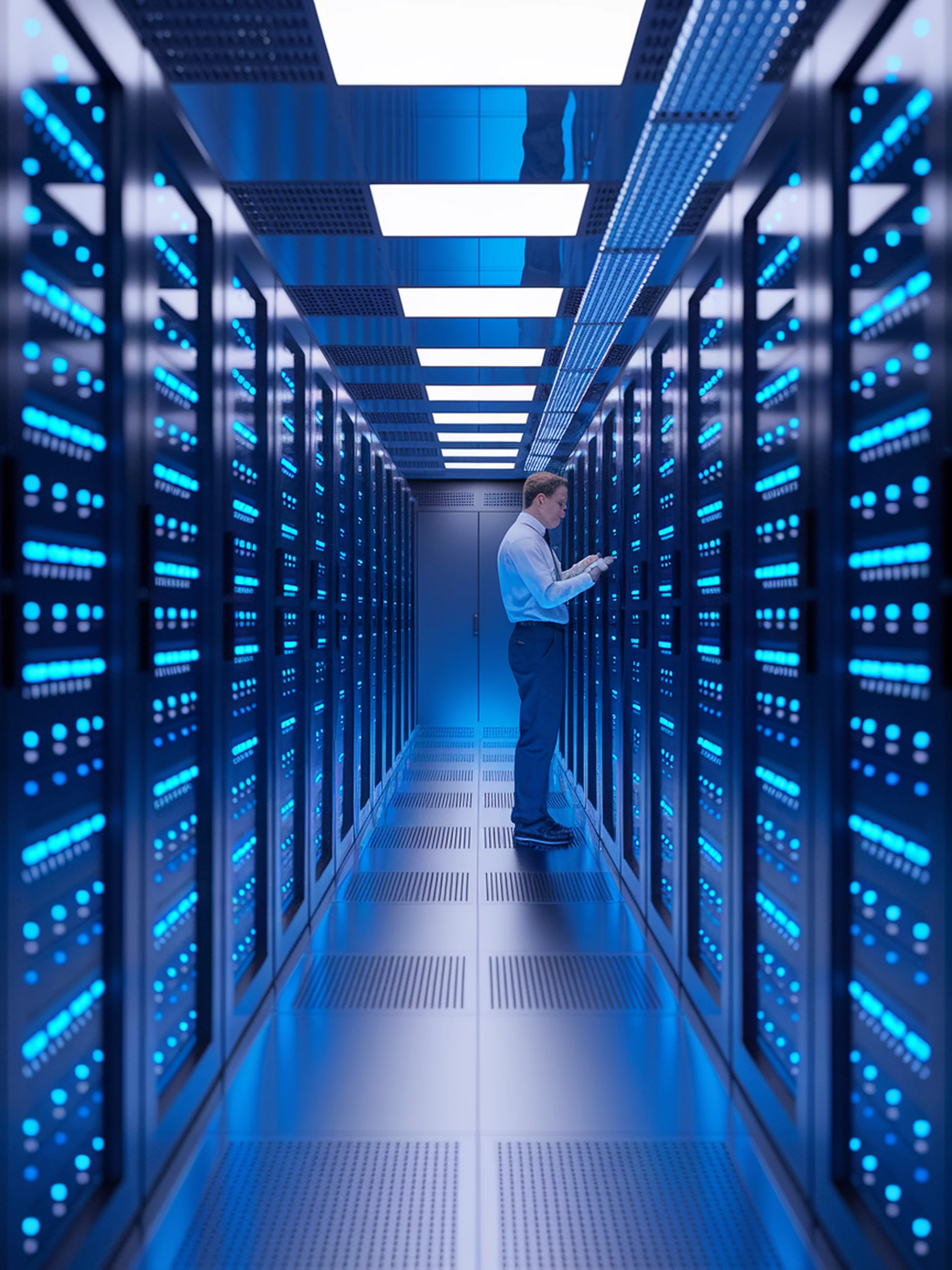

AIBrix introduces a purpose-built infrastructure framework that dramatically improves LLM deployment efficiency and reduces operational costs in cloud environments.

- Co-design philosophy ensures every infrastructure layer integrates seamlessly with inference engines like vLLM

- High-density LoRA management enables dynamic adapter scheduling for improved performance

- Cloud-native architecture optimized specifically for large language model inference workloads

- Open-source solution that addresses the growing challenge of LLM deployment costs

This engineering breakthrough matters because it provides organizations a practical path to implement LLMs at scale without prohibitive infrastructure costs, potentially democratizing access to advanced AI capabilities.

AIBrix: Towards Scalable, Cost-Effective Large Language Model Inference Infrastructure