Breaking the LLM Inference Bottleneck

Boosting throughput with asynchronous KV cache prefetching

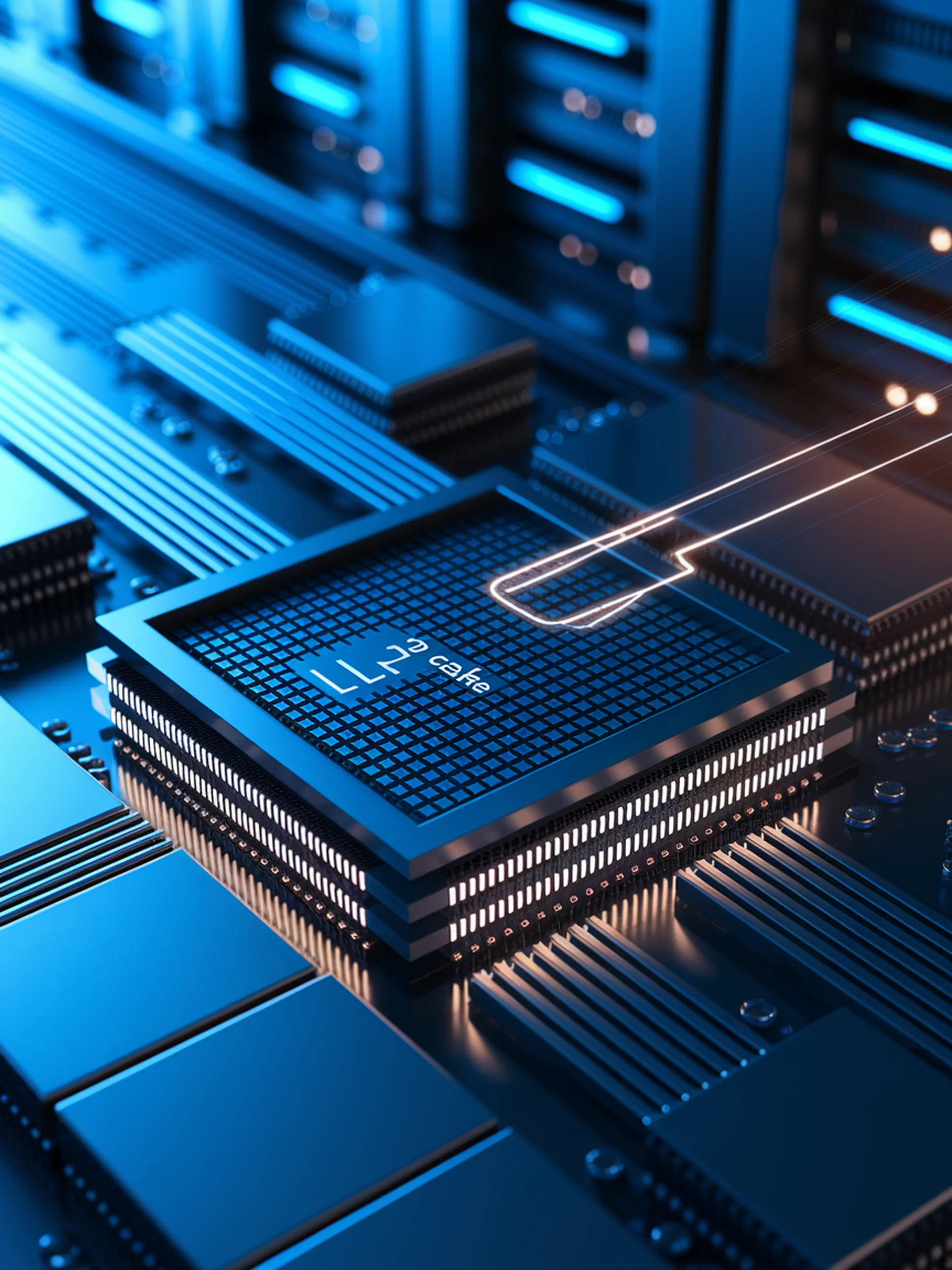

This research introduces a novel L2 Cache-oriented asynchronous KV Cache prefetching technique that significantly improves LLM inference speed by addressing memory bandwidth limitations.

- Strategically uses idle memory bandwidth during computation phases

- Proactively loads required KV Cache data into GPU L2 cache before needed

- Achieves computational and memory load overlap for efficiency gains

- Directly targets the memory-bound bottleneck in LLM inference

For engineering teams optimizing LLM deployment, this approach offers a practical solution to maximize inference throughput without hardware upgrades, potentially reducing infrastructure costs while improving user experience.

Accelerating LLM Inference Throughput via Asynchronous KV Cache Prefetching