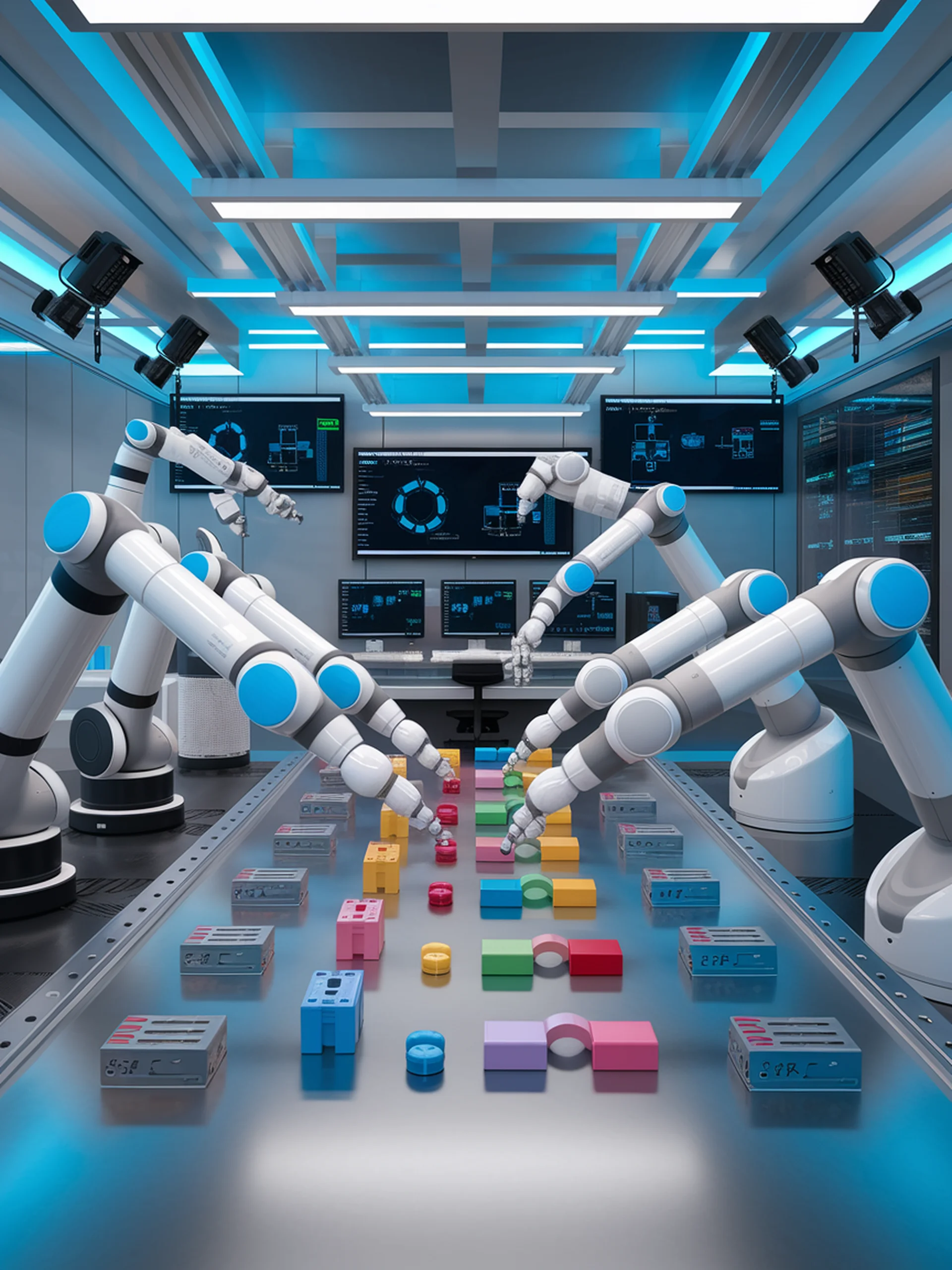

RoboMIND: Advancing Multi-Embodiment Robotics

A benchmark dataset for diverse robot manipulation tasks

RoboMIND introduces a comprehensive dataset of 107,000 demonstration trajectories across 479 diverse manipulation tasks, enabling more adaptable robotic systems.

- Covers 96 object classes with multi-view observations and proprioceptive data

- Supports multiple robot platforms including Franka, UR5e, and humanoid robots

- Enables consistent and reliable imitation learning for robot manipulation

- Creates a foundation for cross-embodiment skill transfer in industrial applications

This research significantly advances robot manipulation capabilities for engineering and manufacturing environments, providing a standardized benchmark for developing more versatile and capable robotic systems.

RoboMIND: Benchmark on Multi-embodiment Intelligence Normative Data for Robot Manipulation