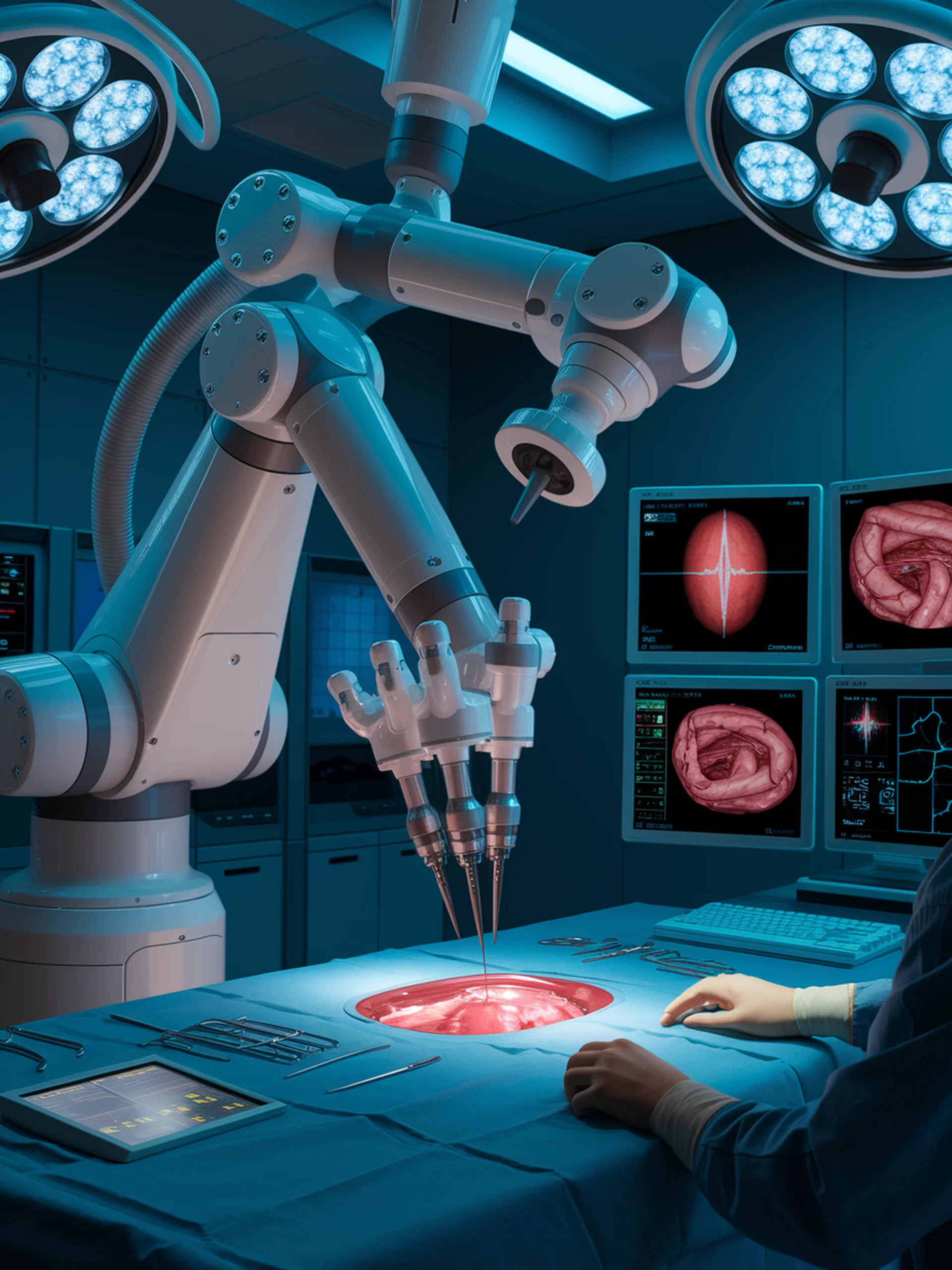

AI-Powered Surgical Assistants

Enhancing Robotic Surgery with Advanced Vision-Language Models

Surgical-LVLM adapts large vision-language models to answer complex visual questions during robotic surgical procedures with unprecedented accuracy and grounding capability.

- Superior medical reasoning through specialized surgical knowledge integration

- Enhanced visual grounding that precisely identifies relevant anatomical structures

- Improved contextual understanding enabling more natural surgeon-AI interactions

- Demonstrated effectiveness on standard EndoVis datasets

This research represents a significant advancement for medical robotics by enabling AI systems to better understand surgical environments, potentially reducing surgical errors and improving patient outcomes through real-time visual guidance and automated surgical mentorship.