The Geometry of LLM Refusals

Uncovering Multiple Refusal Concepts in Language Models

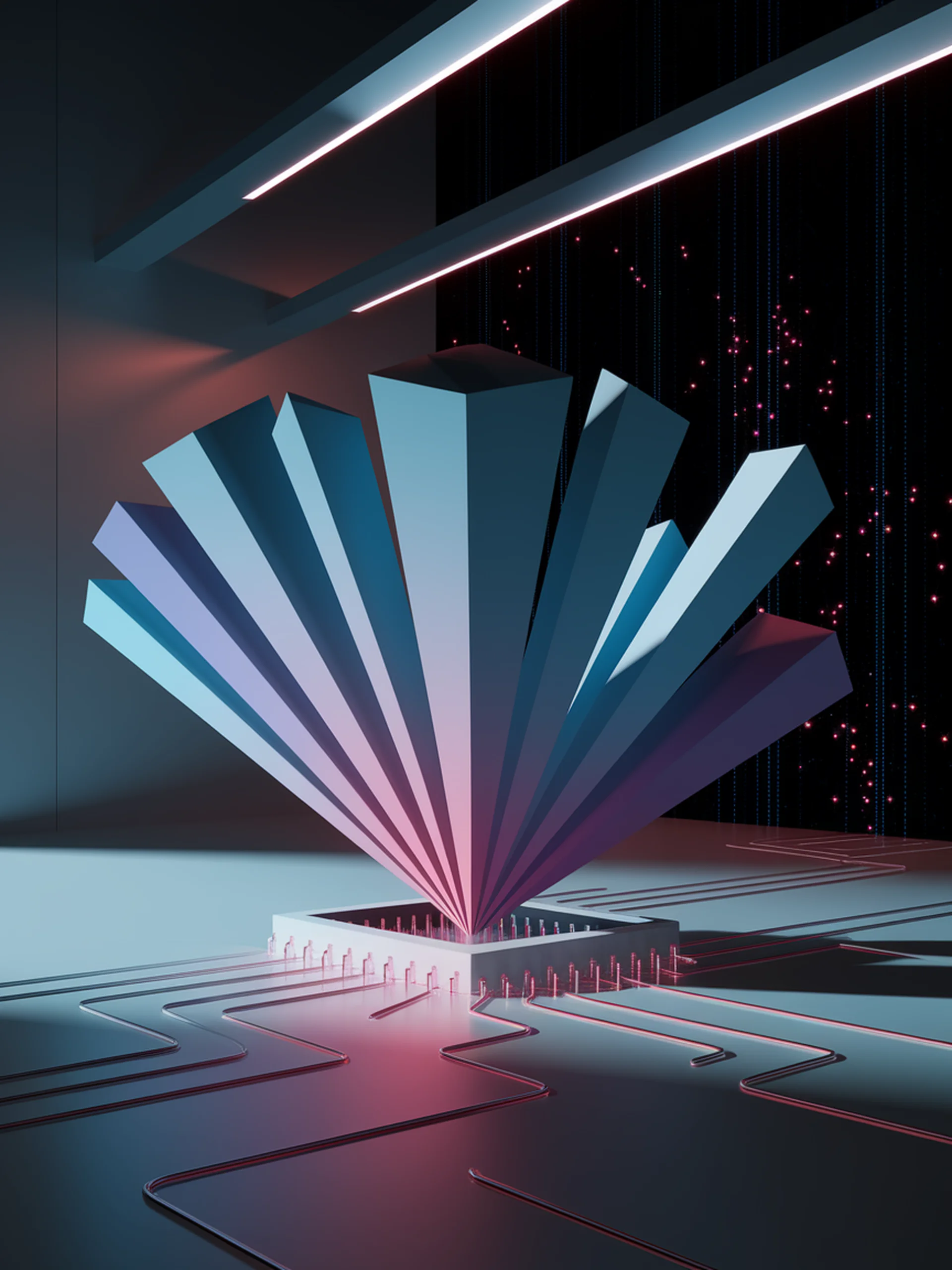

This research challenges the prevailing view of a single refusal direction in LLMs, revealing multiple independent refusal concepts that exist as concept cones in activation space.

- LLMs contain multiple refusal directions rather than just one

- Different refusal concepts (violence, sexuality, etc.) are representationally independent

- The research introduces a novel gradient-based approach to representation engineering

- These findings explain why some adversarial attacks easily bypass safety barriers

For security teams, this work provides crucial insights into how safety mechanisms actually function in LLMs, potentially enabling more robust defenses against adversarial attacks that attempt to circumvent content restrictions.