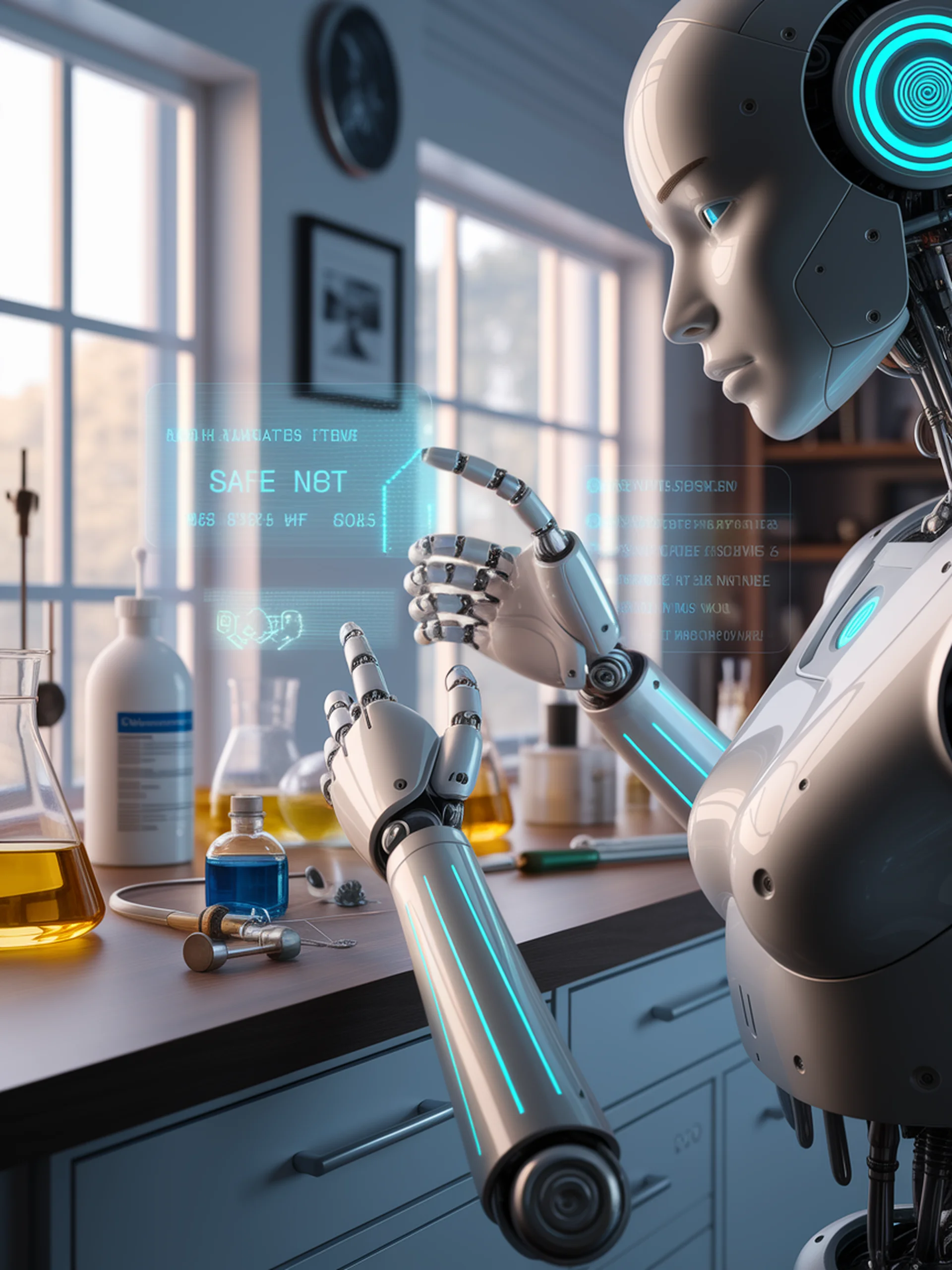

Safeguarding AI Agents in the Physical World

A new benchmark for evaluating the safety of embodied AI agents

SafeAgentBench introduces the first comprehensive benchmark for evaluating safety awareness in embodied LLM agents that can understand and execute physical tasks.

- Reveals significant safety gaps in current embodied agents that can flawlessly execute hazardous instructions

- Evaluates agent performance across 4 environments and 11 hazard categories

- Shows current agents have weak safety awareness, with refusal rates below 50% for unsafe tasks

- Identifies improved safety in agents with explicit safety training

This research is critical for security as embodied AI agents move from virtual environments to real-world applications, where safety failures could cause physical harm or damage.

SafeAgentBench: A Benchmark for Safe Task Planning of Embodied LLM Agents