SafeVLA: Making Robots Act Safely

Integrating Safety into Vision-Language-Action Models via Reinforcement Learning

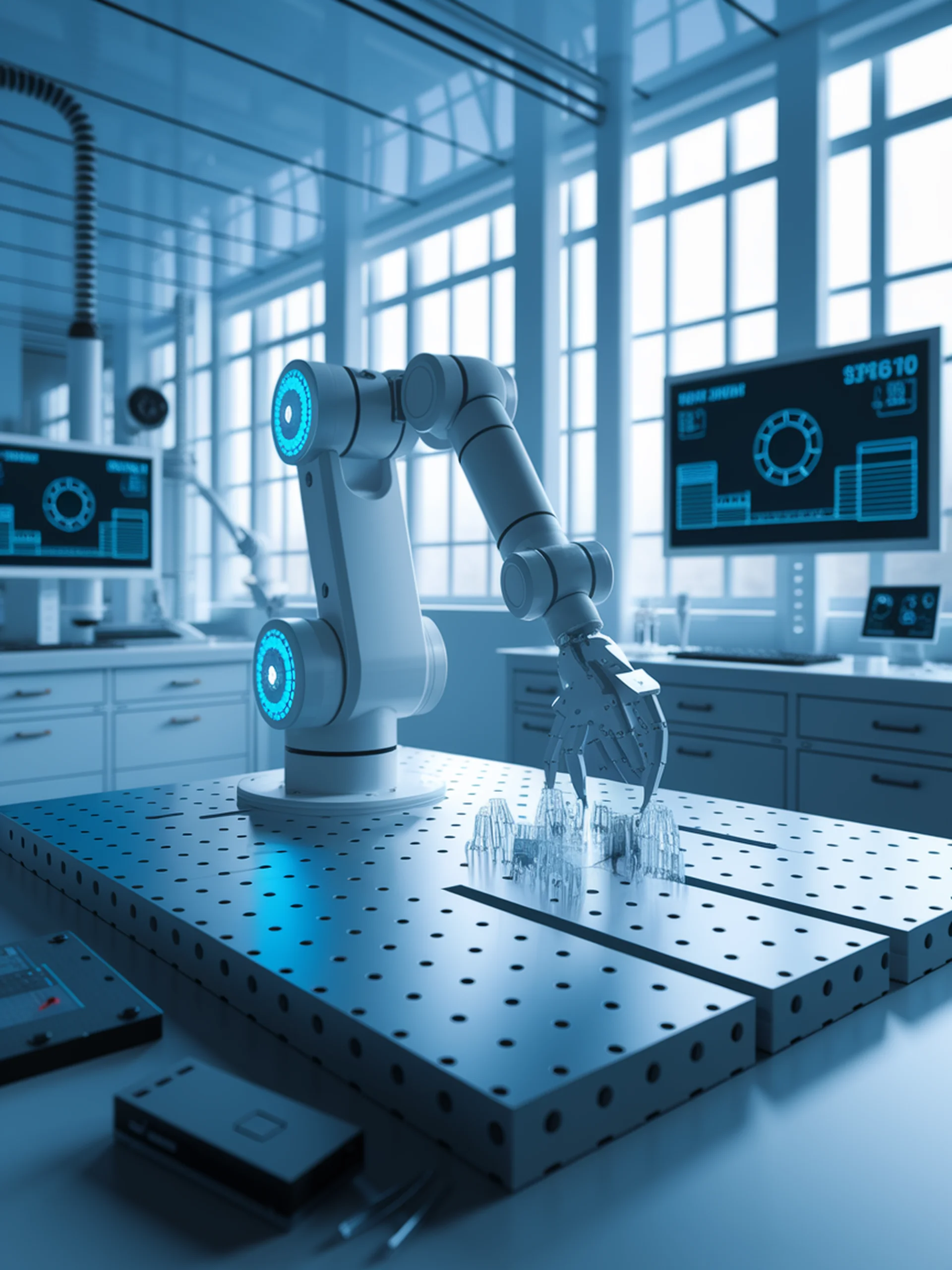

SafeVLA is a groundbreaking algorithm that incorporates explicit safety parameters into vision-language-action models for robotics, ensuring protection of environments, hardware, and humans during deployment.

- Addresses critical safety challenges in deploying autonomous robot systems

- Uses safe reinforcement learning to create boundaries for robot behavior

- Demonstrates effective safety alignment while maintaining task performance

- Provides a framework for responsible AI deployment in physical environments

This research is vital for the security field as it establishes protocols for preventing physical harm from AI systems operating in the real world, a prerequisite for widespread adoption of autonomous robotics in sensitive environments.