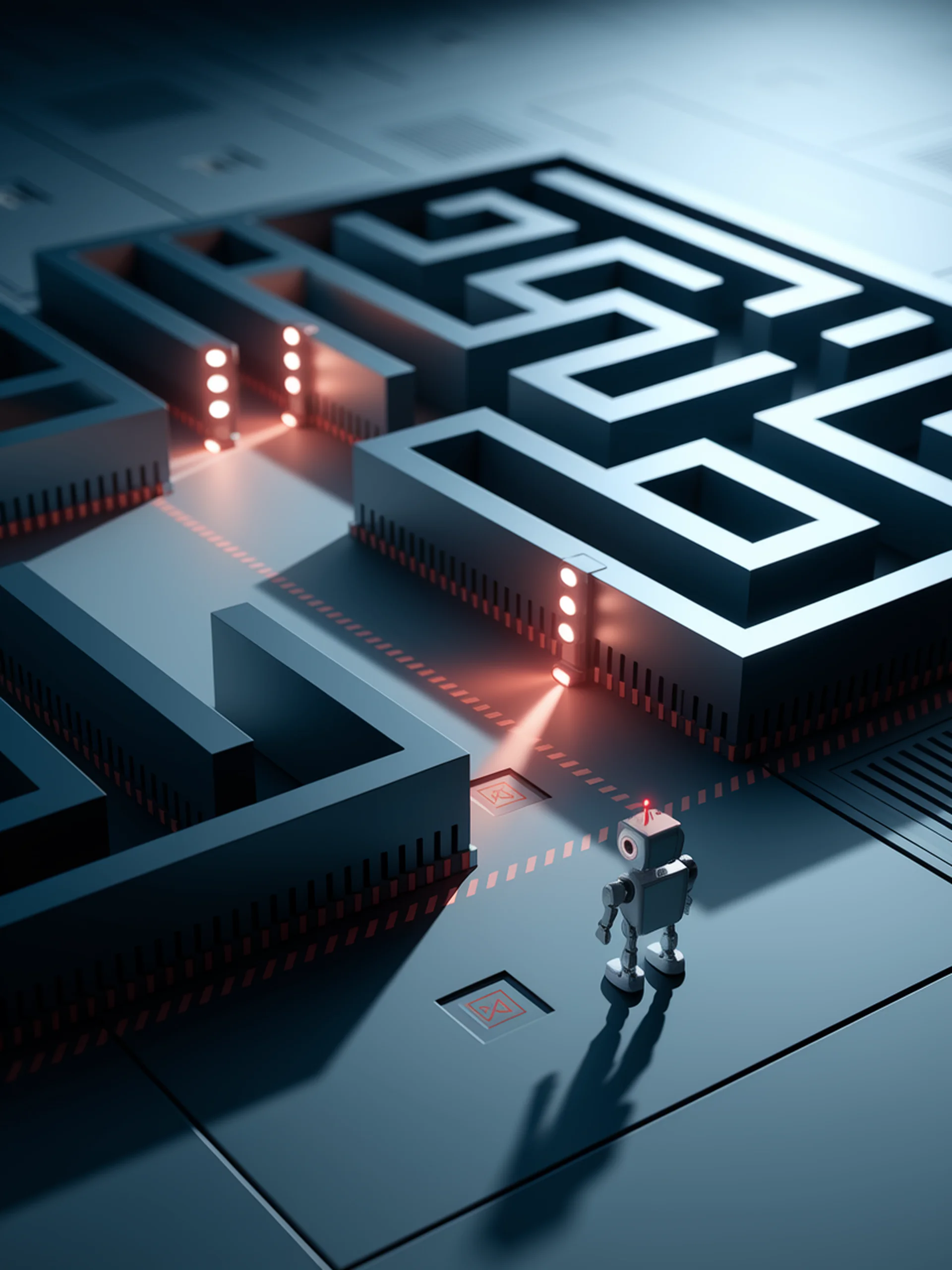

The Safety Paradox in LLMs

When Models Recognize Danger But Respond Anyway

This research reveals a critical gap in LLM safety: models can identify unsafe prompts yet still generate harmful responses.

- Current safety methods sacrifice performance or fail outside their training distribution

- Existing generalization techniques prove surprisingly insufficient

- Pure LLMs can detect unsafe inputs but respond unsafely anyway

- Researchers propose maintaining safe performance without degrading capabilities

For security teams, this highlights the need for more sophisticated safety mechanisms that preserve model utility while ensuring robust protection across diverse scenarios.

Maybe I Should Not Answer That, but... Do LLMs Understand The Safety of Their Inputs?