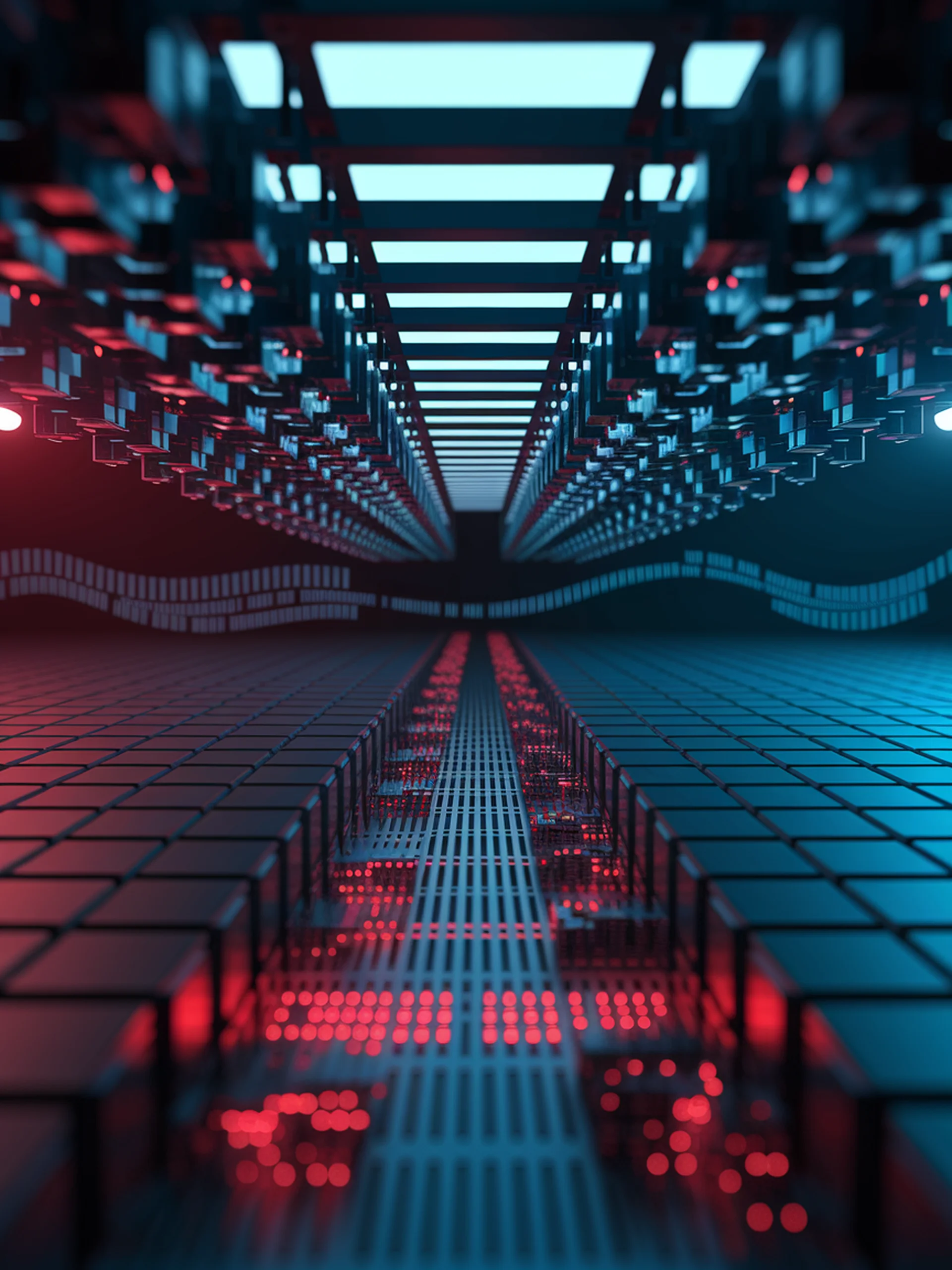

The Vulnerable Depths of AI Models

How lower layers in LLMs create security vulnerabilities

Researchers discovered that lower layers in large language models are more susceptible to generating harmful content when manipulated, creating new security concerns.

- Different layers within LLMs have varying parameter distributions and sensitivity to harmful content

- Lower layers are particularly vulnerable to jailbreaking attempts

- "Freeze training" technique efficiently exploits these vulnerabilities

- Findings suggest targeted protections for specific model layers are needed

This research matters because it reveals specific architectural vulnerabilities in widely-deployed AI systems, enabling more targeted security measures rather than whole-model defenses.