Exposing the Vulnerabilities of Vision-Language Models

A novel framework for testing AI robustness to real-world 3D variations

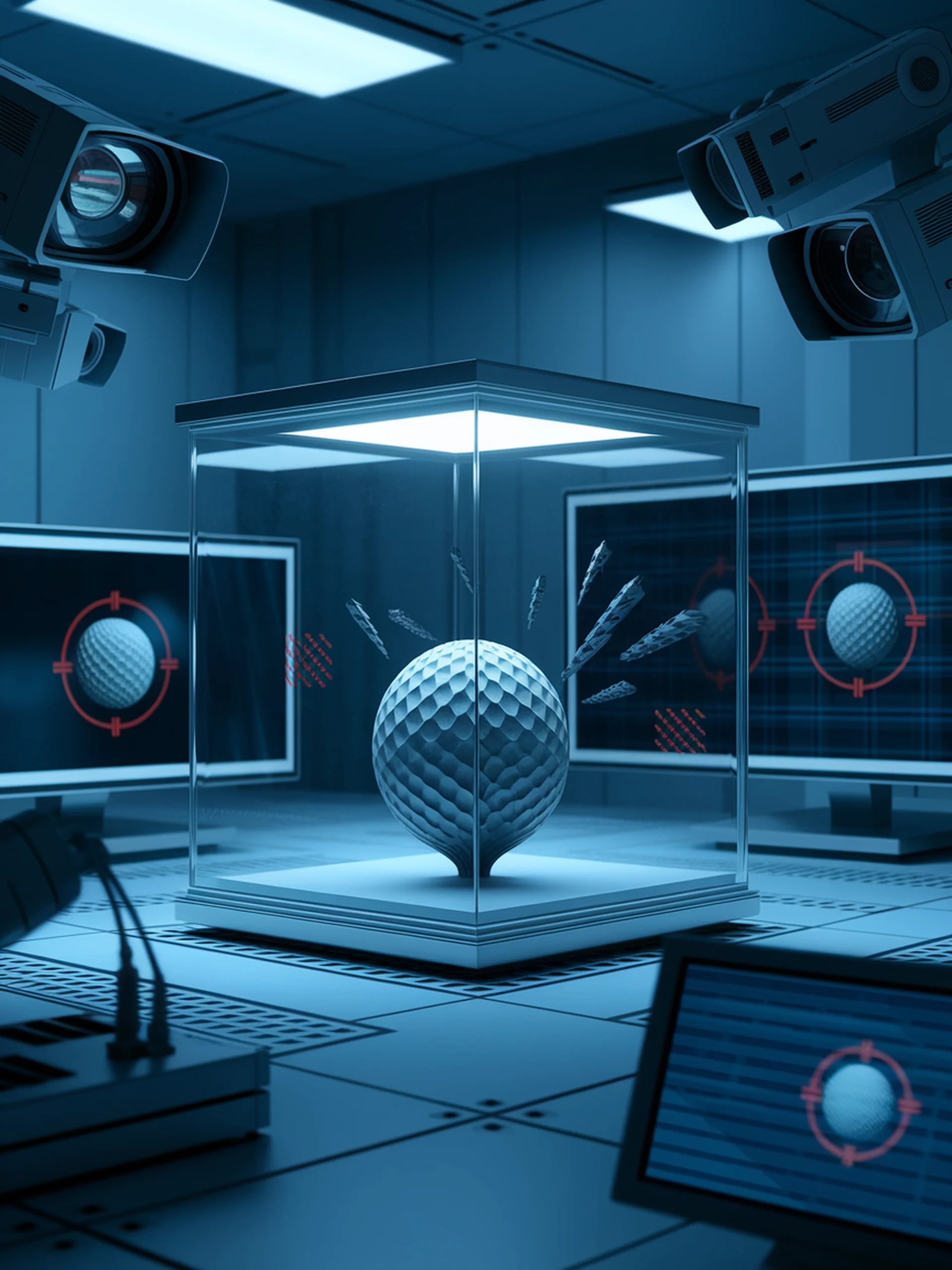

AdvDreamer introduces the first framework capable of generating physically reproducible adversarial 3D transformations to test Vision-Language Models (VLMs) in dynamic real-world scenarios.

Key Findings:

- VLMs demonstrate significant vulnerability to 3D variations that exist naturally in the physical world

- The novel AdvDreamer framework can generate challenging scenarios from single-view observations

- Tests reveal concerning security implications for AI systems deployed in real-world environments

- Current VLMs require substantial improvement in robustness before safe deployment in critical applications

This research is crucial for security professionals as it exposes fundamental weaknesses in AI perception systems that could be exploited by adversaries or lead to dangerous failures in autonomous systems.

AdvDreamer Unveils: Are Vision-Language Models Truly Ready for Real-World 3D Variations?