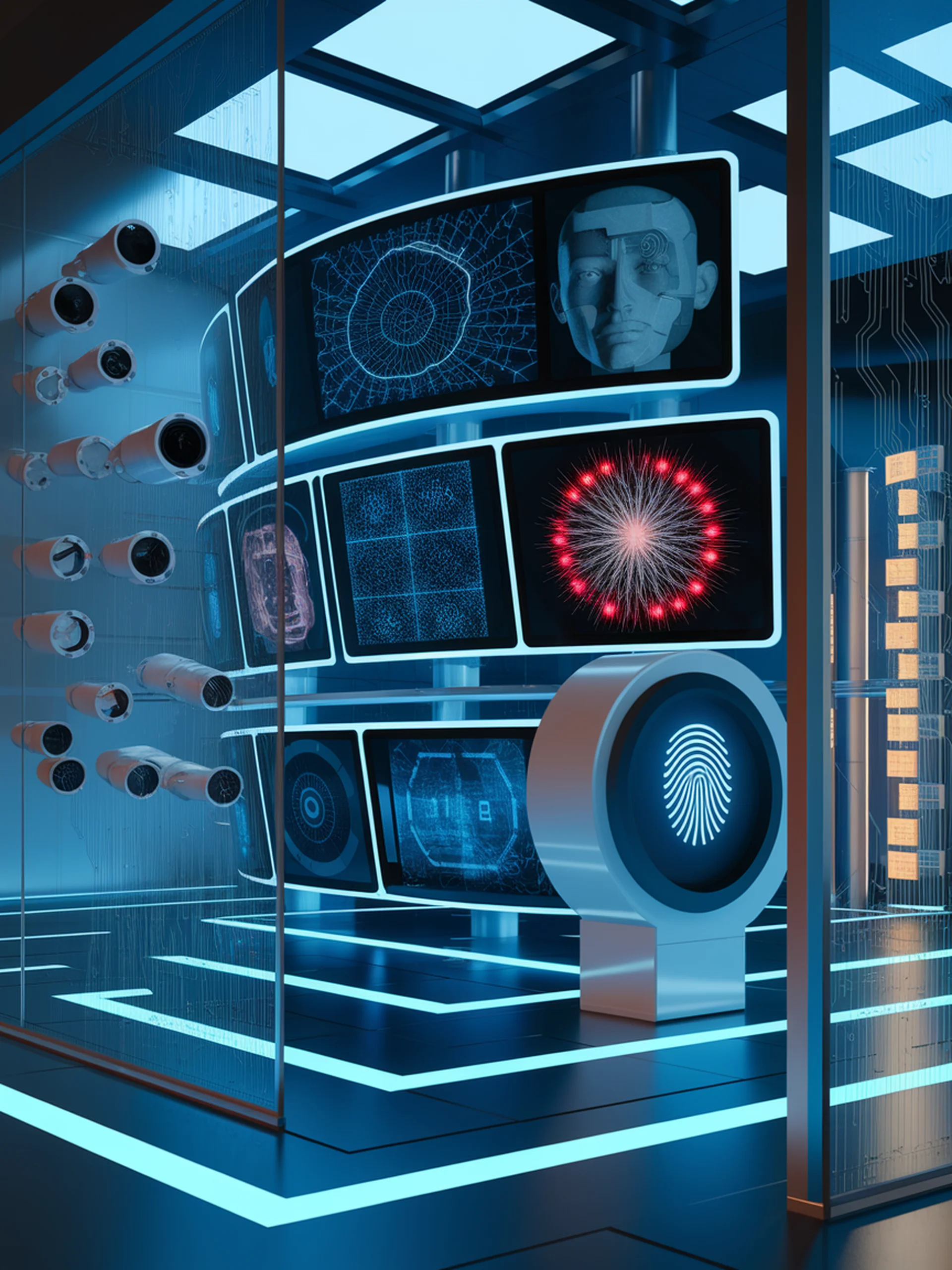

Securing the Vision of AI

A Framework for LVLM Safety in the Age of Multimodal Models

This comprehensive survey establishes a unified framework for understanding and addressing safety challenges in Large Vision-Language Models (LVLMs).

- Attack Vectors: Analyzes various attack methodologies targeting LVLMs

- Defense Strategies: Examines current and emerging approaches to mitigate security vulnerabilities

- Evaluation Methods: Presents standards for assessing LVLM safety and robustness

- Lifecycle Perspective: Views safety through the entire LVLM development process

As multimodal AI systems become increasingly deployed in critical applications, this research provides essential guidance for security professionals and AI developers to ensure responsible implementation and minimize potential harms.

A Survey of Safety on Large Vision-Language Models: Attacks, Defenses and Evaluations