Quantization's Hidden Costs to LLM Safety

How model compression impacts security and reliability

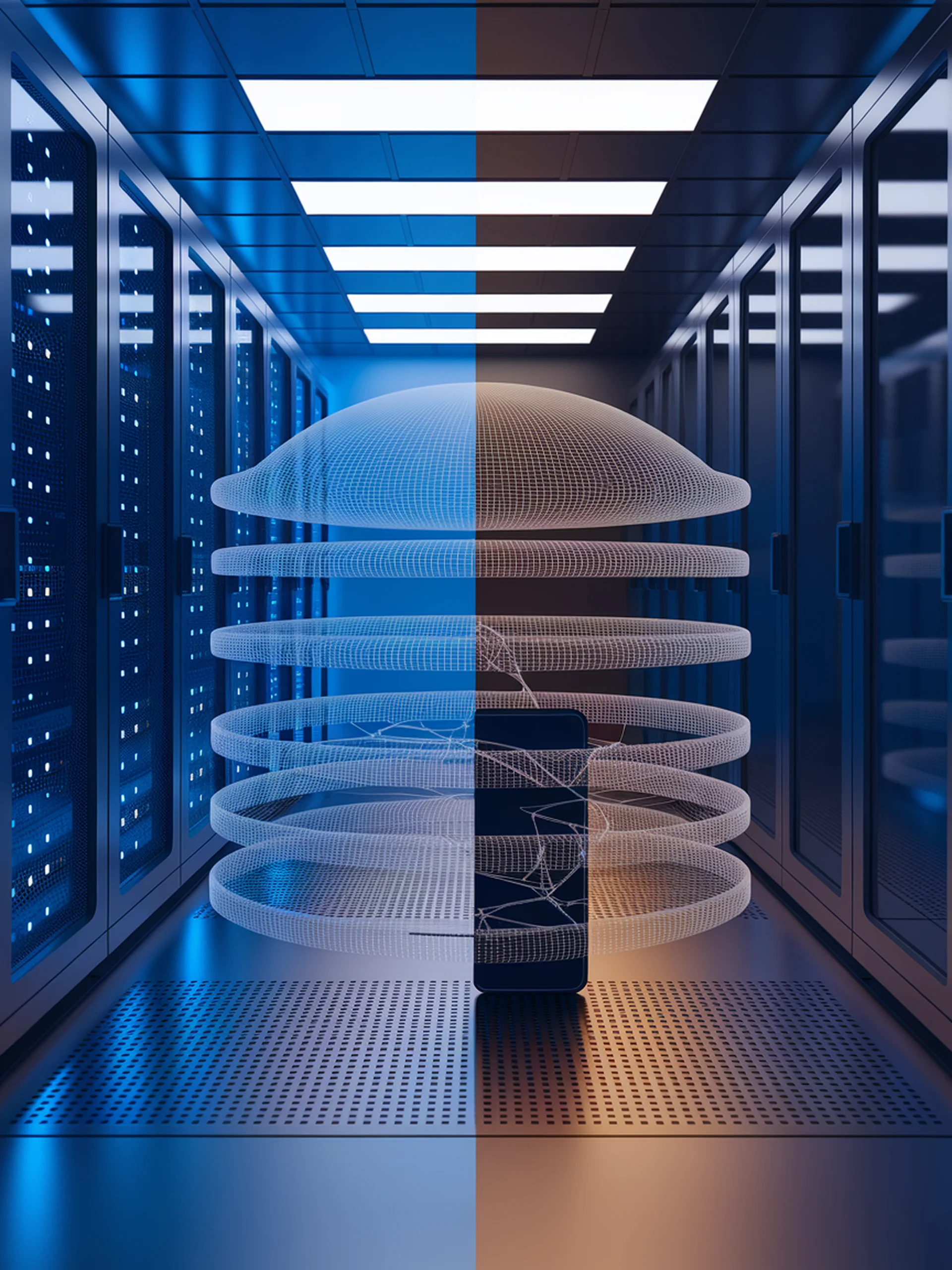

This research evaluates the safety implications of LLM quantization techniques that enable deployment on resource-constrained devices.

- Different quantization methods significantly affect model safety performance

- The newly created OpenSafetyMini dataset benchmarks safety across quantized models

- 2-bit quantization drastically increases harmful outputs (up to 66% in some cases)

- Models with 4-bit precision maintain reasonable safety while reducing resource requirements

For security professionals, this research provides crucial guidance on quantization trade-offs, demonstrating that aggressive compression can compromise safety guardrails while identifying more balanced approaches for secure deployment.