Security Vulnerabilities in LLM Caching

How timing variations can reveal sensitive user data

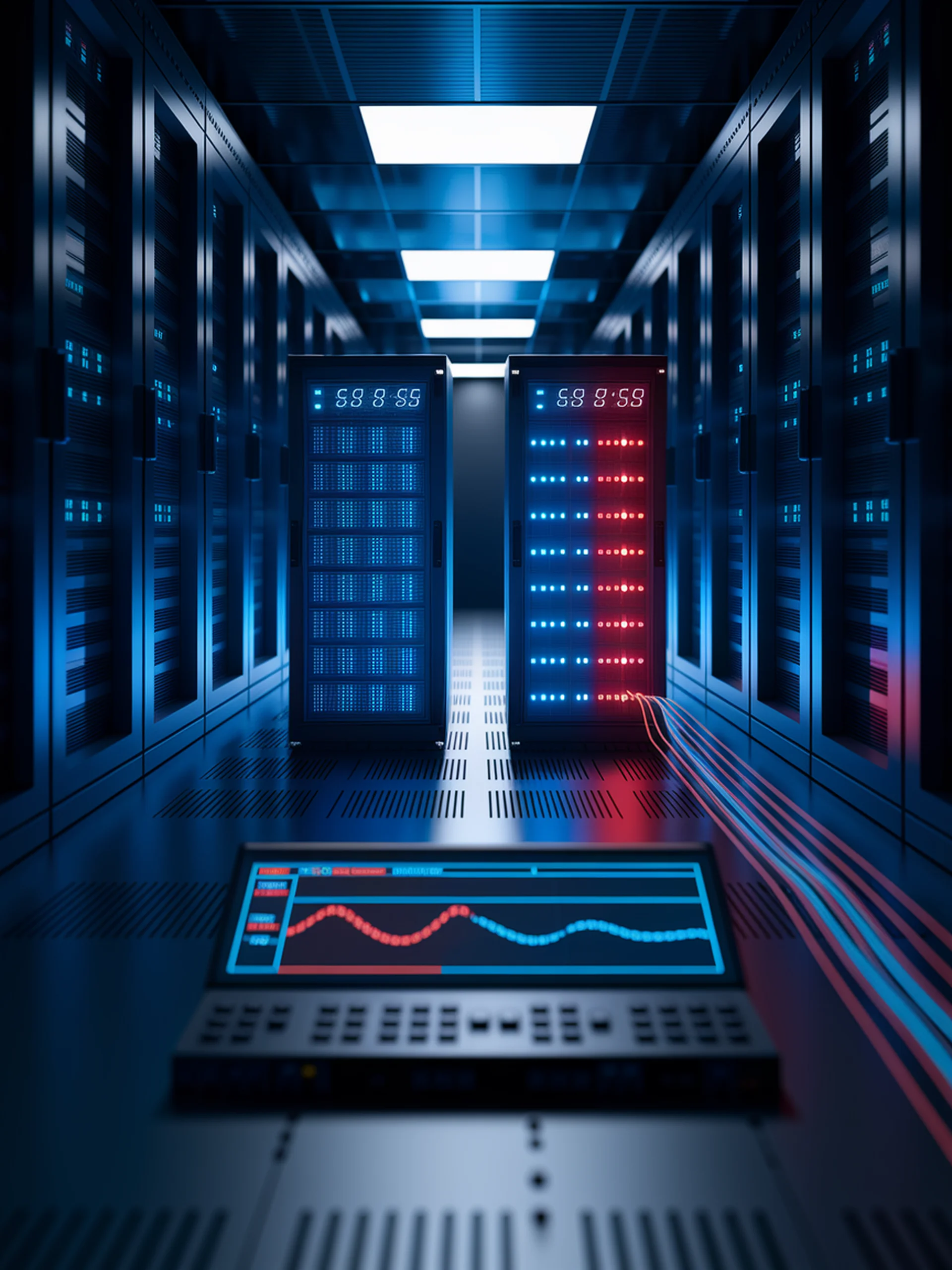

This research reveals how prompt caching in LLM APIs creates security vulnerabilities through timing differences that can expose user data.

- Cached prompts process faster than non-cached ones, creating observable timing patterns

- Attackers can exploit these timing differences to detect what other users are querying

- Most commercial API providers (including OpenAI, Anthropic, Google) employ some form of caching

- The researchers developed effective methods to detect caching behaviors across providers

These findings highlight critical privacy implications for businesses using shared LLM services, as sensitive prompts could be exposed without proper caching management and isolation.