Jupiter: Fast LLM Collaboration at the Edge

Enabling efficient LLM inference across resource-constrained devices

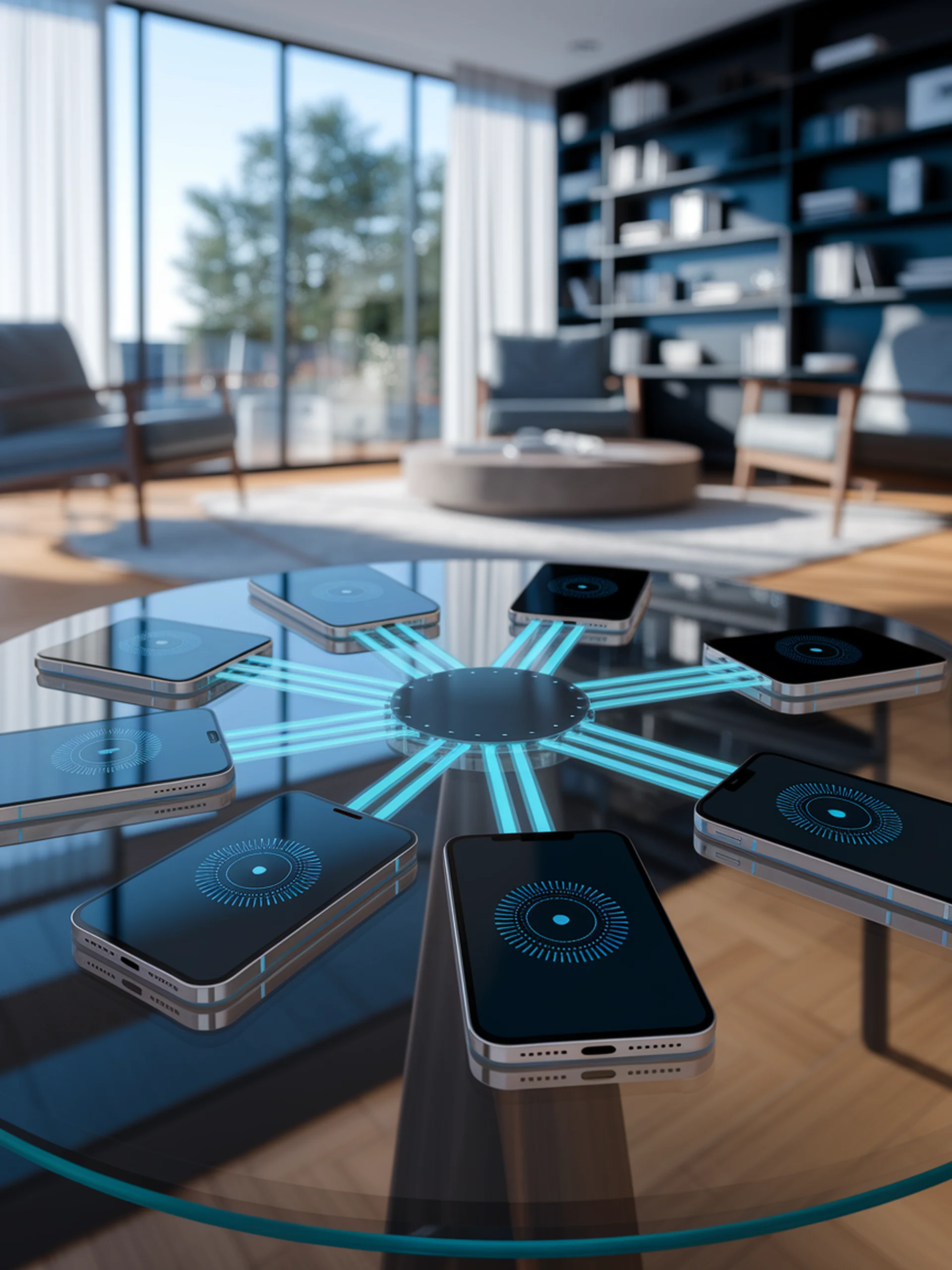

Jupiter introduces a novel collaborative inference system that distributes large language model processing across multiple edge devices, enhancing performance while preserving privacy.

- Resource optimization through intelligent workload distribution across connected edge devices

- Privacy preservation by keeping sensitive data local rather than in cloud datacenters

- Reduced latency and lower memory requirements compared to single-device inference

- Practical deployment on everyday edge devices without specialized hardware requirements

This engineering breakthrough matters because it democratizes access to powerful AI capabilities while addressing critical privacy concerns in an increasingly edge-computing world.

Jupiter: Fast and Resource-Efficient Collaborative Inference of Generative LLMs on Edge Devices