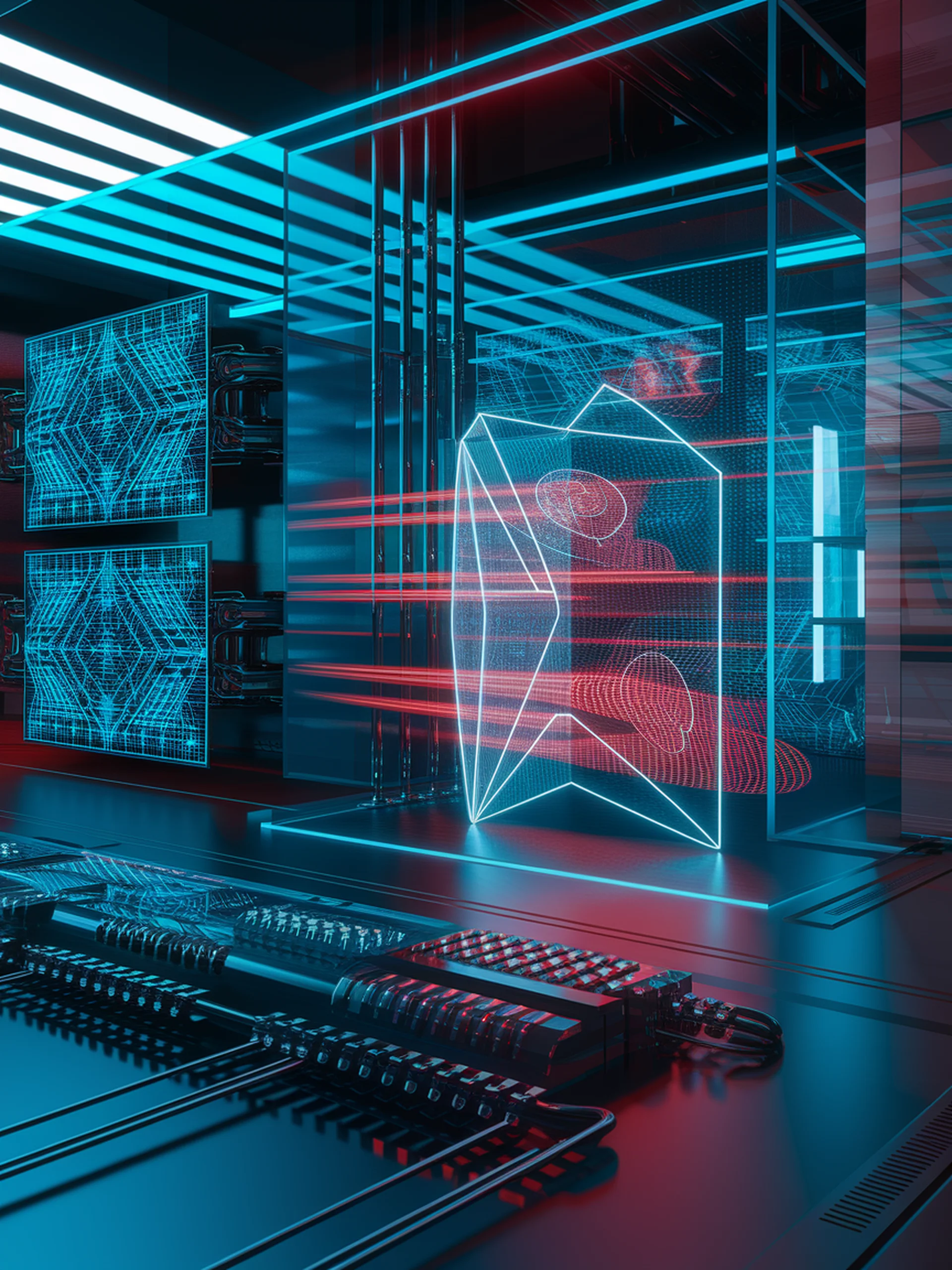

Defending LLMs Against Jailbreak Attacks

A Novel Safety-Aware Representation Intervention Approach

SafeInt provides a new defense mechanism that dynamically shields large language models from jailbreak attacks while maintaining performance efficiency.

- Combines representation-level intervention with safety awareness to detect and neutralize attacks in real-time

- Achieves superior defense capabilities against both standard and adaptive jailbreak attacks

- Maintains low computational overhead compared to existing defense methods

- Demonstrates effectiveness across different model architectures and attack types

This research advances LLM security by addressing the critical vulnerability of jailbreak attacks, which pose significant risks as language models are deployed in more real-world applications. SafeInt offers organizations a practical solution to enhance AI safety without sacrificing performance.