Computer Vision and 3D Perception

Applications of LLMs in computer vision tasks, 3D perception, scene understanding, and visual data processing for engineering applications

Computer Vision and 3D Perception

Research on Large Language Models in Computer Vision and 3D Perception

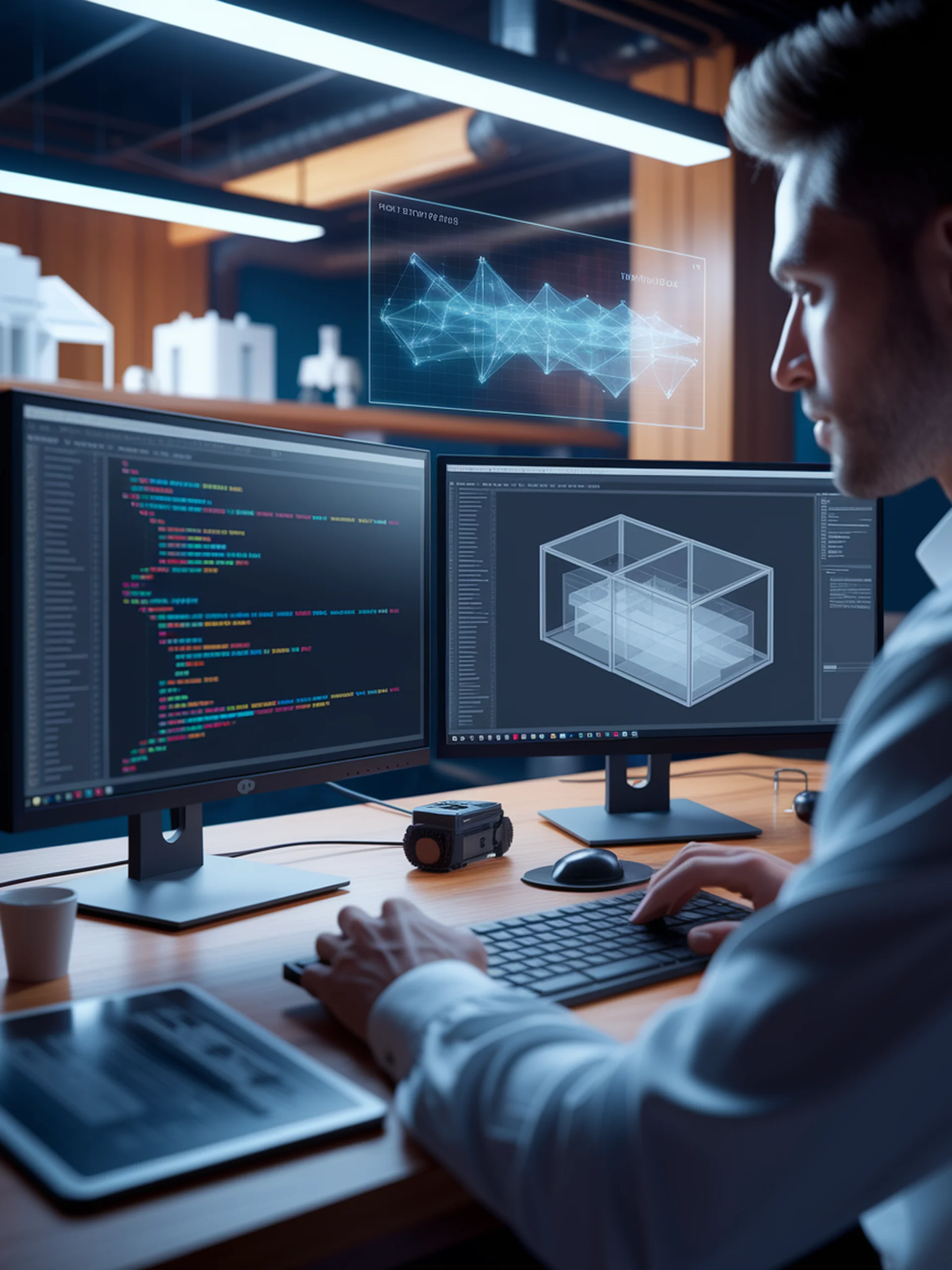

Enhancing 3D Perception with MLLMs

Unlocking spatial understanding from 2D images using advanced language models

Vocabulary-Free 3D Scene Understanding

Revolutionizing 3D Instance Segmentation with Open-Ended Reasoning

Eagle: Advancing Visual Understanding in AI

Optimizing multimodal LLMs through mixture of vision encoders

Hier-SLAM: Scaling Up 3D Semantic Mapping

Hierarchical categorical Gaussian splatting for efficient semantic SLAM

Combating Hallucinations in Video AI

A new benchmark for detecting false events in Video LLMs

AI-Powered CAD: Vision to 3D Design

Using Vision-Language Models to Generate CAD Code from Visual Inputs

Transforming Segmentation Through Language

Converting complex image segmentation into simple text generation

GraphCLIP: The Foundation Model for Text-Attributed Graphs

Enhancing cross-domain transferability in graph analysis without extensive labeling

Scaling 3D Scene Understanding

A breakthrough dataset for indoor 3D vision models

Accelerating Vision-Language Models

Reducing Visual Redundancy for Faster LVLMs

Accelerating Vision AI with LoRA Optimization

Reducing computational costs while enhancing performance of multimodal models

Multimodal CAD Generation Revolution

Unifying text, image, and point cloud inputs for CAD model creation

FlexCAD: Revolutionizing Design Generation

Unified control across all CAD construction hierarchies using fine-tuned LLMs

Intelligent Physics Simulation for 3D Environments

Using Multi-Modal LLMs to Guide Gaussian Splatting in Physics Simulations

Smart Physics for Virtual Objects

Automating Material Properties in 4D Content Generation

PerLA: The Next Leap in 3D Understanding

A perceptive AI system that captures both fine details and global context in 3D environments

Next-Level 3D Interaction

Teaching AI to understand sequential actions in 3D environments

ARCON: Next-Generation Video Prediction

Auto-Regressive Continuation for Enhanced Driving Video Generation

Olympus: The Universal Computer Vision Router

Transforming MLLMs into a unified framework for diverse visual tasks

Revolutionizing Physics Simulations

A More Stable Material Point Method for Engineering Applications

LLaVA-UHD v2: Advancing Visual Intelligence in AI

Enhancing fine-grained visual perception through hierarchical processing

ECBench: Testing AI's Understanding of Human Perspective

A new benchmark for evaluating how well AI models comprehend egocentric visual data

Accelerating Vision-Language Models

A Global Compression Strategy for High-Resolution VLMs

FALCON: Revolutionizing Visual Processing in MLLMs

Solving High-Resolution Image Challenges with Visual Registers

Vision Meets Language: Next-Gen Semantic Segmentation

How Large Language Models Enhance Visual Scene Understanding

The Future of 3D Point Cloud Models

Advancing foundation models for complex 3D environments

Visual Intelligence in Text-to-CAD Generation

Enhancing LLMs with visual feedback for precise CAD modeling

Scaling Visual Attention Across GPUs

Efficient cross-attention for processing large visual inputs in multimodal AI

Open-Vocabulary Articulated 3D Objects

Converting static meshes to articulated objects with natural language guidance

Combating AI Visual Hallucinations

Steering Visual Information to Reduce False Claims in LVLMs

AI-Powered CAD Editing Revolution

First framework for automated text-based CAD modification

Building Digital Twins: The Future of Urban Planning

Integrating 3D Modeling, GIS, and AI for Virtual Building Replicas

Revolutionizing Imaging with Hyperspectral AI

Transforming invisible data into actionable insights across industries

Event Cameras for Text Recognition

Pioneering bio-inspired solutions for challenging visual environments

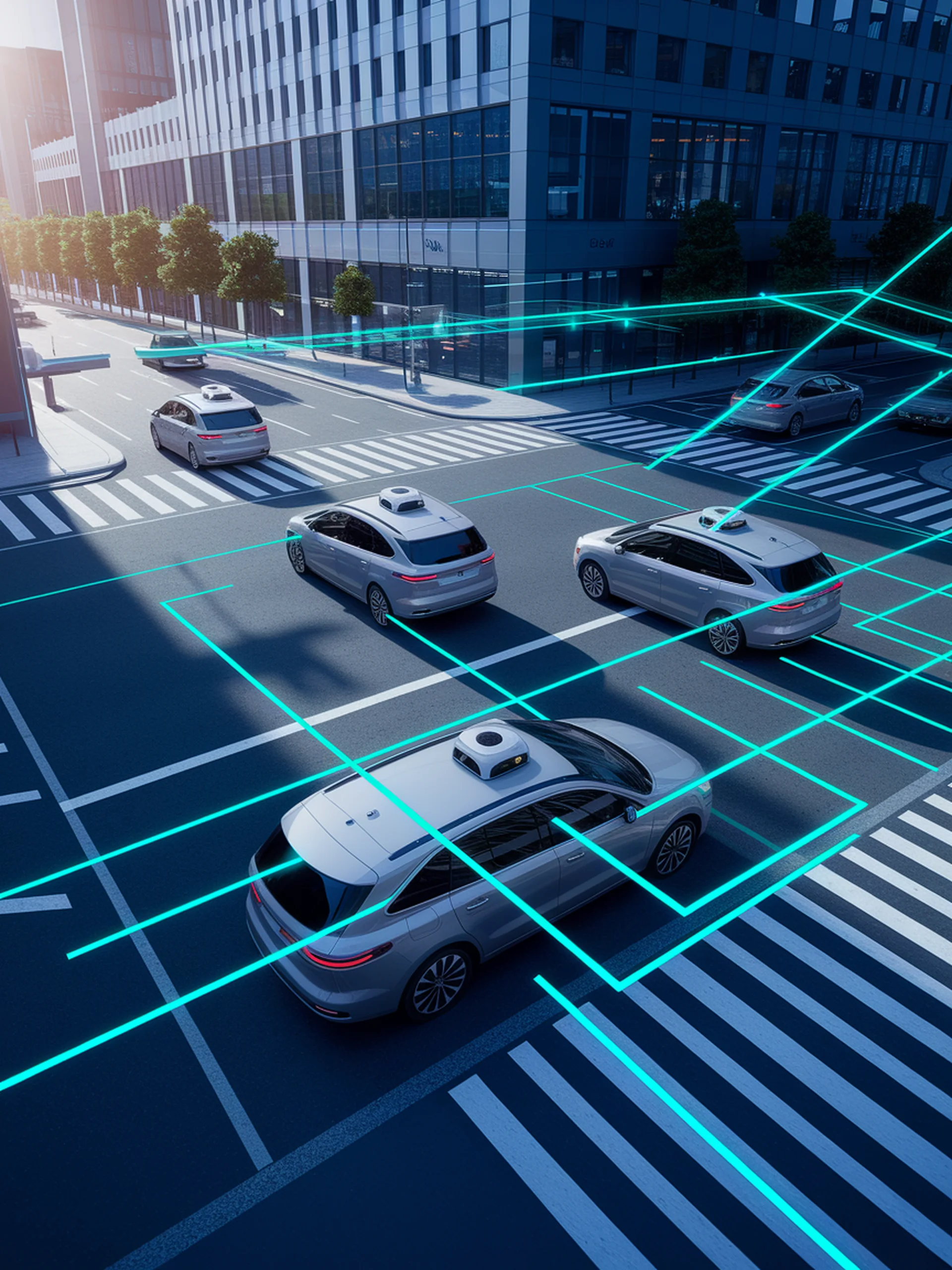

AI-Powered Traffic Monitoring

Integrating Multimodal-LLMs with Instance Segmentation for Smarter Cities

Hierarchical 3D Semantic Mapping

Advanced SLAM with Hierarchical Categorical Gaussian Splatting

Teaching Robots to Understand 3D Worlds

Using LLMs to Detect Object Affordances in Open Environments

Master-Apprentice VLM Inference

Reducing costs while maintaining high-quality vision-language responses

Combating AI Hallucinations with Octopus

A dynamic approach to reduce fabricated responses in vision-language models

Accelerating Multimodal AI: DivPrune

Cutting Visual Token Count While Preserving Performance

3D Scene Intelligence

Enhancing LLM Geometric Reasoning for Object Placement

SmartWay: Revolutionizing AI Navigation

Enhanced Waypoint Prediction with Intelligent Backtracking

HybridAgent: Intelligent Image Restoration

Unifying Multiple Restoration Models for Superior Results

PiSA: Revolutionizing 3D Understanding in AI

Self-Augmenting Data to Bridge the 3D-Language Gap

Bridging 2D and 3D Vision-Language Understanding

Overcoming 3D data scarcity with unified architecture

ChatStitch: Visualizing the Blind Spots

Advancing Collaborative Vehicle Perception with LLM Agents

Smart 3D Spatial Understanding for Robots

Leveraging LLMs to Create Hierarchical Scene Graphs for Indoor Navigation

Open Vocabulary 3D Scene Understanding

Advancing 3D segmentation with CLIP and superpoints

Matrix: Voice-Driven 3D Objects in AR

Transforming spoken words into interactive 3D environments

Revolutionizing Road Infrastructure Maintenance

AI-Powered Crack Detection for Urban Digital Twins

3D Open Vocabulary Scene Understanding

Advancing Security with Instance-Level 3D Scene Analysis

Bridging 2D to 3D: MLLMs for Spatial Reasoning

Transferring 2D image understanding to 3D scene segmentation

Revolutionizing CAD Drawing Analysis

Progressive Hierarchical Tuning for Efficient Parametric Primitive Analysis

Evaluating AI Vision for Self-Driving Cars

Fine-grained assessment framework for autonomous driving AI systems

3D Vision Meets Natural Language

Advancing Autonomous Vehicles' Understanding of Human Instructions

Smart Robots for Accessible Environments

Enhancing Assistive Technologies with Open-Vocabulary Semantic Understanding

3D Visual Reasoning Breakthrough

Leveraging LLMs for Enhanced Object Detection in Complex Environments

LLM-Guided Architecture Evolution

Autonomous AI-powered optimization for object detection models

Advancing Robot Vision with AI Fusion

Leveraging Multimodal Fusion and Vision-Language Models for Smarter Robots

Smarter, Smaller Vision Transformers

Strategic Pruning for Domain Generalization and Resource Efficiency

Explaining the Uncertainty Gap in AI Vision

Revealing why image classifiers lack confidence through counterfactuals

Q-Agent: Quality-Driven Image Restoration

A robust multimodal LLM approach for handling complex image degradations

Accelerating Large Vision Language Models

Reducing computational overhead through strategic token pruning