Hardware Acceleration and Computing Architecture

Specialized hardware architectures and computing in memory technologies for accelerating LLM inference and training

Hardware Acceleration and Computing Architecture

Research on Large Language Models in Hardware Acceleration and Computing Architecture

Intelligent CIM Compilation: The Best of Both Worlds

Optimizing dual-mode capabilities in Computing-in-Memory accelerators

PacQ: Accelerating LLM Inference

A specialized microarchitecture for efficient mixed-precision computation

Revolutionizing AI Hardware Efficiency

Integrating Compute-in-Memory (CIM) in TPUs for Faster, Greener AI

Breaking Speed Barriers: Photonic AI Acceleration

262 TOPS from Silicon Nitride Microcomb Laser Technology

Breaking AI Silos: The Future of LLM Applications

Towards open ecosystems and hardware-optimized AI platforms

MatrixFlow: Accelerating Transformer Performance

A system-accelerator co-design approach for faster AI models

TPU-Gen: AI-Powered Hardware Design

Using LLMs to Automate Custom Tensor Processing Unit Creation

TokenSim: Accelerating LLM Inference Systems

A comprehensive framework for hardware-software co-optimization

Evaluating LLMs for Hardware Design

A new benchmark for resource-efficient FPGA designs

Automating Hardware Verification with LLMs

Leveraging AI to streamline SystemVerilog assertion development

Accelerating the Future of LLMs

A comprehensive analysis of proprietary LLM accelerator technologies

Accelerating MoE Models with Structured Sparsity

Leveraging Sparse Tensor Cores for faster, more efficient LLMs

AI-Powered Hardware Design

Leveraging Reasoning LLMs for High-Level Synthesis Optimization

ROMA: Hardware Acceleration for On-Device LLMs

A ROM-based accelerator enabling efficient edge deployment of large language models

AI-Powered Circuit Design Revolution

Automating Analog Computing Architecture Design with Large Language Models

Data Contamination in AI Hardware Design

Evaluating the reliability of LLM-generated Verilog code

Accelerating Verilog Code Generation with LLMs

Using speculative decoding to speed up hardware description language generation

Automating Hardware Design with LLMs

Open benchmarks for AI-powered RTL code generation

VeriMind: AI-Powered Verilog Generation

Autonomous LLM framework streamlines hardware design

Eliminating Hallucinations in Hardware Design Code

A Training-Free Framework for Reliable HDL Generation with LLMs

Accelerating Transformer Models with FPGA

Optimized Hardware Solution for LLM Bottlenecks

Accelerating LLMs on RISC-V Platforms

Optimizing AI reasoning on open-hardware alternatives to GPUs

Neuromorphic Computing for Efficient LLMs

3x Energy Efficiency Gain Through Hardware-Aware Design

Optimizing Memory for LLM Performance

A novel compression-aware memory controller design

UB-Mesh: Reimagining Datacenter Networks for AI

A hierarchical network design optimized for large language model training

Ultra-Fast Hardware Metrics Prediction

Using LLMs to revolutionize chip design process efficiency

Unlocking LLM Speed with Existing Hardware

Accelerating language models using unmodified DRAM

Engineering Reliable LLM Accelerators

Statistical fault tolerance without compromising performance

Marco: AI-Powered Hardware Design Revolution

A configurable framework for solving complex chip design challenges with multi-agent LLMs

Accelerating LLMs with Hybrid Processing

80x faster, 70% more energy-efficient 1-bit LLMs

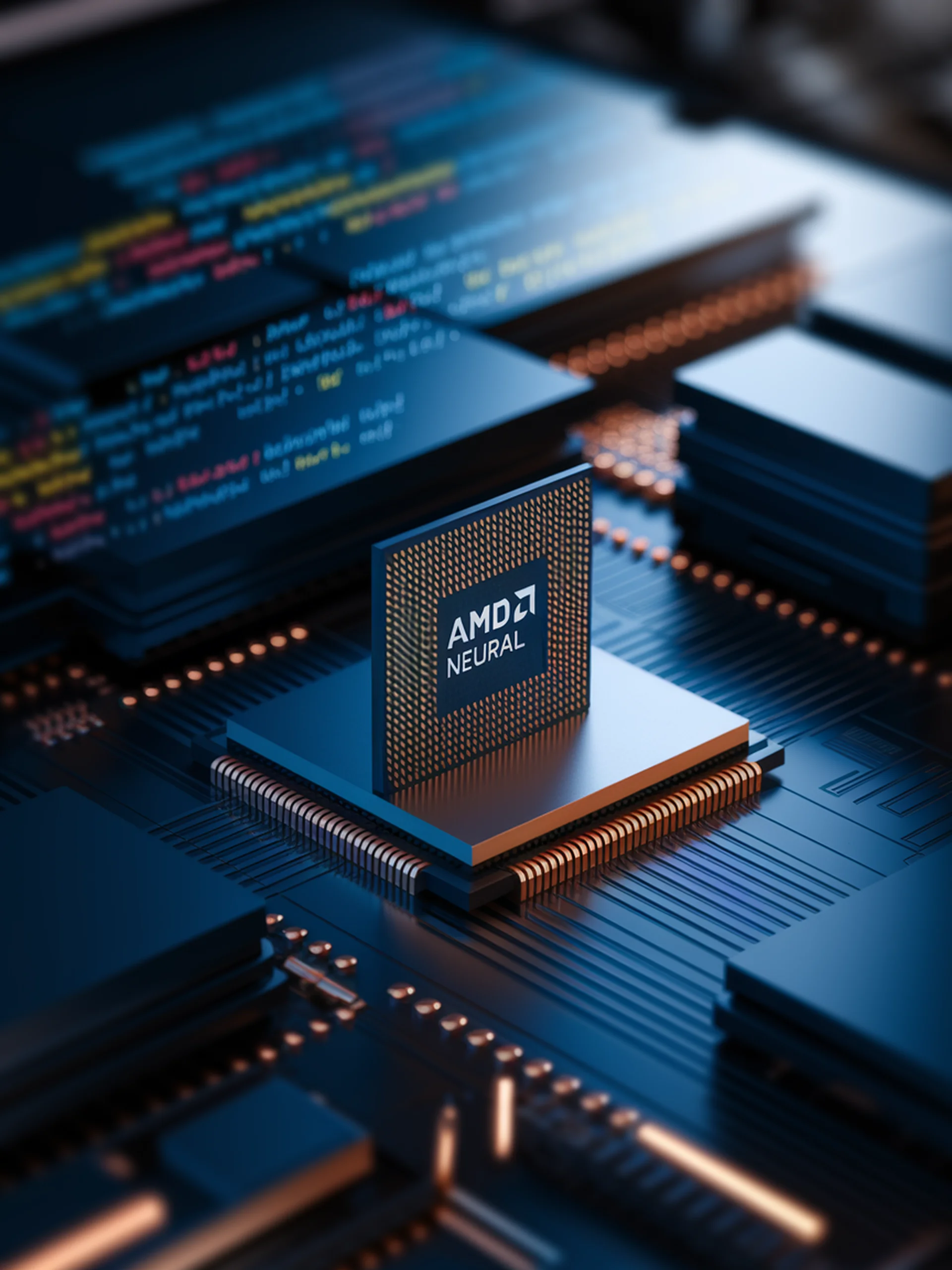

Unlocking AMD's Neural Processing Unit

Enabling on-device AI training with bare-metal programming

Circuit Foundation Models: AI Revolution in Chip Design

Two-stage learning approach transforms VLSI circuit design and EDA

Bridging the Gap: LLMs Meet Graph Data in EDA

Enhancing Electronic Design Automation with Graph-Aware Language Models

Revolutionizing LLM Efficiency for Long Contexts

A Unified Hardware Architecture with Smart KV Cache Management

CAMP: Revolutionizing Matrix Multiplication

A new architecture for accelerating ML on vector processors

Automating Hardware Design with AI

Using LLMs to enhance hardware accelerator development

AI-Powered Hardware Security Revolution

Using LLMs to Automate Security-First Hardware Design

Advancing RTL Code Generation with LLMs

Repository-level code completion for hardware design automation

NetTAG: Advancing Circuit Design with AI

Multimodal Foundation Model for Electronic Design Automation

Unified Circuit Intelligence

Bridging the gap between circuit analysis and generation with a multimodal foundation model

Accelerating Embedding Operations

Optimizing AI Workloads with Decoupled Access-Execute Architecture

Smarter RTL Code Optimization

Combining LLMs with Symbolic Reasoning for Superior Circuit Design

Optimizing LLM Inference: CPU-GPU Architecture Analysis

Performance insights across PCIe A100/H100 and GH200 systems