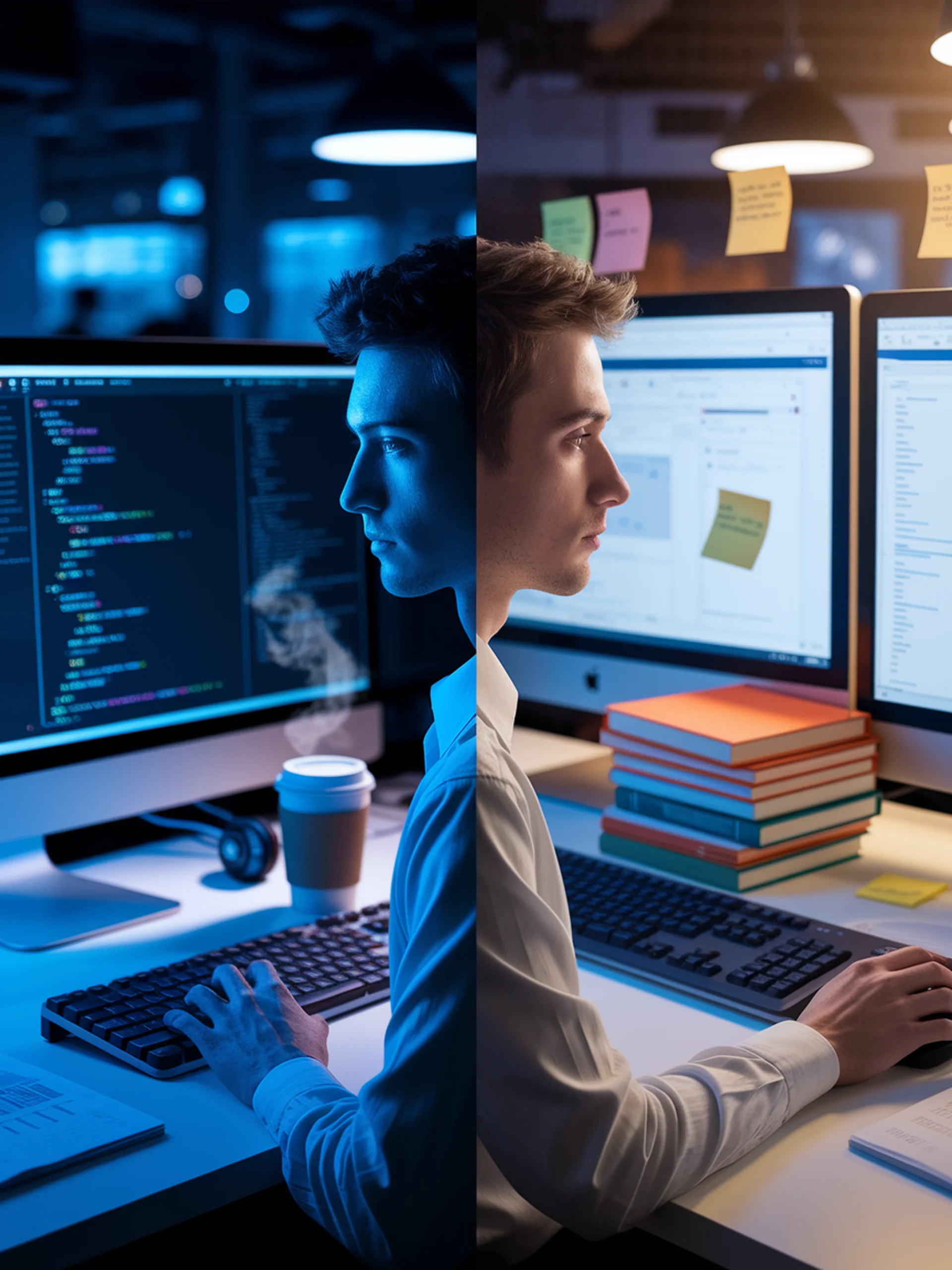

Software Engineering and Development

Applications of LLMs in improving software development processes, including code generation, debugging, testing, and specification development

Software Engineering and Development

Research on Large Language Models in Software Engineering and Development

AI-Powered Bug Explanation

Leveraging Code Structures to Help Developers Understand Software Bugs

Domain Expertise Trumps Model Size

Why medium-sized specialized AI models outperform larger generalists for software tasks

Leveraging LLMs for Software Specifications

Evaluating GPT-4's ability to generate formal software specifications from natural language

AI vs. Humans: Developer Coding Support Preferences

How developers engage with ChatGPT compared to Stack Overflow

Prompt Engineering vs. Fine-Tuning for Code

Comparing LLM approaches for code-related tasks

3D Vision-Language Model for Robotics

Advancing Robotic Vision Beyond Closed-Set Limitations

Domain-Specific Code Generation with LLMs

Evaluating LLM effectiveness beyond general programming tasks

Smarter Automated Testing with AI

Using LLMs to extract test inputs from bug reports

Modular Thinking for Advanced Coding

Breaking down complex programming problems with modular AI approaches

AI-Powered Specification Generation

Automating Formal Program Specifications with LLMs

Do Code Models Truly Understand Code?

Testing LLMs' comprehension of programming concepts using counterfactual analysis

API Pack: Supercharging Code Generation

Teaching LLMs to master API calls across programming languages

AI-Powered Test Case Generation

Enhancing LLMs for Test-Driven Development Automation

ChatDBG: Revolutionizing Software Debugging

How LLMs Transform Bug-Hunting Into Natural Conversation

AgentFL: Scaling Bug Detection to Project Level

LLM-powered fault localization for complex codebases

Bridging Design and Code with AI

WebCode2M: A breakthrough dataset revolutionizing automated webpage development

LLM-Powered Mutation Testing

Revolutionizing Test Quality Assessment with AI

Real-World Code Translation to Rust

First major study evaluating LLM performance on practical language migration

Smart Cascading for Code Generation

A cost-efficient framework that balances accuracy and computational resources

Boosting Automated Verification with LLMs

How AI can streamline software proof engineering

Teaching AI to Ask Better Questions

Making LLMs Better Coders Through Improved Communication

Fixing Python Library Compatibility Issues Automatically

Introducing PCART: An automated tool for resolving API parameter conflicts

Benchmarking LLMs for Test Case Generation

A systematic evaluation framework for LLM-powered software testing

Benchmarking LLM Code Efficiency

A new standard for evaluating AI-generated code quality

Evolution-Aware Code Generation Evaluation

Measuring LLMs on real-world software development dynamics

API Time Warp: LLMs Stuck in the Past?

Revealing how LLMs struggle with deprecated APIs in code completion

AI vs. Traditional Compilers for Code Optimization

Evaluating LLMs as the next frontier in code efficiency

Automated Test Generation with AI Agents

Validating Real-World Bug Fixes Using LLM-Based Code Agents

Boosting Code Generation with External Knowledge

Evaluating the Impact of Retrieval-Augmented Generation (RAG) for Programming Tasks

Beyond Simple Coding: Advancing AI Code Generation

New benchmark reveals capabilities and limitations of LLMs in complex programming tasks

Smart Self-Editing Code Assistants

Efficient, Real-Time Code Prediction During Editing

Keeping AI Code Assistants Up-to-Date

A new benchmark for evaluating how LLMs adapt to API changes

Scaling Code Generation Without Expensive Teachers

A new approach for creating synthetic programming data at scale

Evaluating LLMs for Software Development

A self-evaluating framework for measuring AI performance in coding tasks

Supercharging LLMs for Software Development

How Patched MOA optimizes inference to achieve superior performance

Evolving Better Code Instructions

How Genetic Algorithms Create High-Quality Training Data for LLMs

Measuring the True Difficulty of Code Tasks

A smarter approach to evaluating LLMs on programming challenges

TestART: Evolving LLM-based Unit Testing

Enhancing test quality through automated generation and repair cycles

LLMs: Breaking the Language Barrier in Code Clone Detection

Leveraging Large Language Models to identify similar code across programming languages

COAST: Revolutionizing Code Debugging in LLMs

A multi-agent approach to enhance debugging capabilities in AI coding systems

Combating Dockerfile Flakiness

The first systematic approach to detect and repair unpredictable Docker build failures

LLMs as Software Engineering Evaluators

Using AI to replace human annotation in software engineering research

Anchoring Attention in LLM Code Generation

Combating attention dilution for more accurate code

Evaluating Code Generation LLMs

A comprehensive framework for assessing AI code generation capabilities

LLMs and Buggy Code: Testing Implications

How incorrect code affects test generation by language models

Conversational Agents in Software Engineering

Maximizing Value of Bots in Development Workflows

AI-Powered Verification for Rust

Automating Correctness Proofs with Large Language Models

Zero-Shot Code Embedding with LLMs

Eliminating the need for supervised training in code analysis

Navigating the Challenges of LLM Open-Source Projects

Identifying key issues, causes, and practical solutions for practitioners

Teaching Code LLMs to Learn from Mistakes

Reinforcement Learning for Self-Improving AI Code Generation

ToolGen: Revolutionizing AI Tool Interaction

Embedding tool knowledge directly into language models

Smart Switching: Code vs. Text Reasoning in LLMs

Optimizing when LLMs should code rather than reason through text

Enhancing AI-Powered Code Repair

Improving robustness of LLM code repair systems through metamorphic testing

Optimizing RAG Systems for Code Generation

Balancing Effectiveness and Efficiency in Retrieval for Coding Tasks

SwiftCoder: Making AI-Generated Code Better & Faster

Fine-tuning LLMs to prioritize both correctness and efficiency in code generation

RepoGraph: Beyond Function-Level Code Generation

Repository-wide context for enhanced AI software engineering

CAMP: Bridging Local & Cloud Development

A hybrid AI coding assistant for constrained environments

Monte Carlo Tree Search for Smarter Software Agents

Enhancing LLM-based coding assistants with strategic decision-making capabilities

Beyond Cool Demos: Production-Ready AI Systems

Bridging the gap between prototype and enterprise-ready foundation model software

FALCON: Optimizing AI Code Generation

Reinforced learning system that adapts to user feedback in real-time

VisualCoder: Guiding Large Language Models in Code Execution...

By Cuong Chi Le, Hoang-Chau Truong-Vinh...

Beyond Line-by-Line Code Translation

A Repository-Level Approach Using Large Language Models

Bridging the Language Gap in Scientific Computing

Using LLMs to modernize legacy code with human oversight

DemoCraft: Smarter Code Generation with LLMs

Enhancing AI coding through in-context learning and latent concept awareness

Understanding LLM Code Generation Failures

Beyond Syntax: Why LLMs Struggle with Context-Dependent Programming

OpenCoder: Democratizing Code AI Development

A transparent, reproducible approach to building top-tier code LLMs

Transforming Chaos into Clarity

Visualizing Software Logs with Time Curves for Enhanced Security Analysis

Self-Correcting Code Generation with LLMs

Enhancing AI code quality through dynamic backtracking

Evaluating LLMs in Software Engineering

A systematic framework for rigorous empirical research

Building LLMs for Production: A Practitioner's Guide

Key considerations for developing enterprise-ready LLM applications

PyGen: AI-Powered Python Package Creation

Automating software development through human-AI collaboration

Open-Source LLMs for Smarter Bug Detection

Flexible Fault Localization Without Proprietary AI Dependencies

Benchmark Contamination in LLMs

Investigating data leakage in bug detection benchmarks

Beyond Function-Level: Repository-Scale Code Translation

Advancing LLMs for real-world Rust translation challenges

Improving Code Retrieval with Quality Data

A Contrastive Approach for Better Software Engineering

LLMs in Qualitative Research: A Software Engineering Revolution

Transforming how we analyze human and social factors in software development

Advancing Verilog Generation with LLMs

A hierarchical dataset approach to improve hardware design coding

Predicting Application Behavior with AI

Using Lightweight Generative Models to Optimize Software Performance

Do Top LLMs Excel in Specialized Coding Domains?

Evaluating code generation across real-world application domains

Order Bias in LLMs for Code Analysis

How input arrangement impacts fault localization accuracy

Modernizing Legacy Code with AI

Using LLMs to Transform Fortran to C++ through Intelligent Dialogue

Open-Source LLMs for Better GitHub Issue Resolution

A more accessible approach to fixing software bugs with AI

Smart LLM Selection for Program Synthesis

Dynamically choosing the optimal prompt and model for coding tasks

Probabilistic Analysis for LLM-Enabled Software

A framework for reliability and verification in AI systems

Smarter API Testing with Small LLMs

Targeted fine-tuning outperforms larger general-purpose models

Revolutionizing REST API Testing with AI

Smarter, more comprehensive API testing through LLMs and reinforcement learning

AI-Developer Collaboration Patterns

A taxonomy of how engineers interact with AI tools in software development

Smart Bug Hunting

Using AI feedback to pinpoint software bugs faster

Energy-Efficient AI Code Generation

Optimizing LLMs for sustainable software development

AI-Powered Chaos Engineering

Automating system resilience testing with LLMs

SkillScope: Matching Engineers to GitHub Issues

AI-driven skill prediction for open source contributions

Beyond Single Functions: Translating Entire Code Repositories

A new framework for evaluating large language models in code migration projects

AI-Powered Bug Fixing: A Collaborative Approach

Enhancing LLMs with programmer intent and collaborative behavior simulation

Smart C++ Test Generation with CITYWALK

Enhancing LLMs for Compiled Language Test Creation

AI-Powered Dependency Resolution

Using Large Language Models to Fix Python Dependency Conflicts

Mind the Gap: LLMs vs. Human Code Understanding

Revealing limitations in AI structural code comprehension

LLM Critics: Smarter Code Evaluation Without Execution

Using AI to assess code changes with unprecedented detail

Human vs. AI Code: A Critical Comparison

Evaluating LLMs against human programmers on real coding tasks

The Practitioner's View on Code-Generating LLMs

Bridging the gap between academic research and developer needs

Making Python Code More Pythonic with AI

Using LLMs to Automatically Refactor Non-Idiomatic Code

Smarter Test Validation with AI

Using LLMs to Generate Accurate Test Oracles

AI-Powered Crash Analysis

Localizing Software Faults with Large Language Models

LLM Program Optimization via Retrieval Augmented Search

By Sagnik Anupam, Alexander Shypula...

Bridging the Gap for Low-Resource Programming Languages

Improving LLMs' code generation capabilities for niche programming languages

Automating Legacy Web App Modernization

Using Multi-Agent LLM Systems to Transform Outdated Applications

LLMs vs. Human Experts in Requirements Engineering

Evaluating AI's potential to transform software requirements gathering

Evaluating LLMs for Real-World Coding Tasks

Beyond single-file solutions: Testing LLMs on multi-file project problems

Precision Bug Hunting with AI

How OrcaLoca enhances software issue localization with LLM agents

Bridging Code and Documentation

A new benchmark dataset for AI-powered software maintenance

How Students Use LLMs in Software Engineering Education

Insights from real-world student-AI interactions in the classroom

LLMs as Verification Partners

Leveraging AI to ensure Java code correctness

Smarter Unit Testing with AI

Training LLMs to generate tests that find and fix code bugs

Toward Neurosymbolic Program Comprehension

By Alejandro Velasco, Aya Garryyeva...

AI Agents for Automated Bug Fixing

Leveraging LLMs to reproduce bugs from incomplete reports at Google

SE Arena: Revolutionizing LLM Evaluation

An interactive platform for assessing AI models in software engineering workflows

Can LLMs Fix Real-World Code Maintenance Issues?

Evaluating Copilot Chat and Llama 3.1 on actual GitHub maintainability problems

AI-Driven ML Library Development

Self-improving LLMs that tackle specialized programming tasks

Automated Architectural Component Generation

Using LLMs to Generate Serverless Functions

Taming Flaky Tests with LLMs

Leveraging AI to detect and classify non-deterministic tests

Stable & Cost-Efficient AI Patching Framework

Balancing Efficiency and Accuracy in Automated Software Repairs

Improving AI Code Review Quality

Enhancing neural models by tackling noisy training data

Beyond Correctness: Benchmarking LLM Code Efficiency

Measuring and improving the time efficiency of AI-generated code

Evaluating LLMs for Test Case Generation

A systematic framework for assessing AI-powered software testing

SLM Ensembles: Smarter, Smaller AI for Code Debugging

Harnessing small language models to efficiently locate software bugs

Self-Debugging AI Code Generation

Two-agent framework improves automated code quality and reliability

AI-Powered Code Review Enhancement

Using LLMs to generate curated, actionable review comments

Efficient Code Summarization for Sustainable AI

Reducing computational costs while maintaining effectiveness

Automating Vehicle API Testing with LLMs

How AI can streamline the complete automotive test process

Automating Microservice Architecture Documentation

Using LLMs to detect patterns in Infrastructure-as-Code

AI-Powered Microservices Migration

Using Contrastive Learning to Revolutionize Software Architecture Transformation

Reimagining Software Agents

Accessing software internals for more accurate and efficient AI assistance

CodeSCM: Causal Analysis for Multi-Modal Code Generation

By Mukur Gupta, Noopur Bhatt...

LLM Agents for Research Deployment

Benchmarking AI assistants for complex code repositories

LLMs as Code Quality Judges

Can AI replace human evaluators in software engineering?

Benchmarking Data Leakage in LLMs

First comprehensive study across 83 software engineering benchmarks

Smarter Code Reviews with AI

Combining LLMs with Static Analysis for More Effective Code Feedback

Supercharging Competitive Programming with AI

How Large Language Models Excel at Olympic-Level Coding Challenges

LLMs' Understanding of Compiler Code

First empirical study on how well LLMs comprehend Intermediate Representations

Bridging Human Language and Command Line

Enhancing LLM-based translation from natural language to Bash

CodeQUEST: AI-Powered Code Quality Enhancement

Using LLMs to automatically evaluate and improve code across multiple dimensions

Verifying AI-Generated Code

Formal Verification Techniques for LLM Output

Enhancing AI-Generated Code Evaluation

A New Approach to Bridge Code and Requirements

Evaluating AI Coding Assistants

Bringing Human-Centered Design to Automated LLM Evaluation

Proactive Safety Engineering for ML Systems

Using LLMs to support hazard identification and mitigation in ML-powered applications

AI-Powered Code Refinement

Transforming Code Quality Through Intent-Driven Automation

From Sketch to Code: AI-Powered GIS Dashboards

Converting PowerPoint UI sketches to functional web applications using LLMs

Self-Improving Code Generation

How LLMs Can Refine Their Own Code Through Adaptive Critique

Automating Microservice Development with AI

Using LLMs to generate complete RESTful API implementations

LLMs Transform Unit Testing

Enhancing Defect Detection and Developer Efficiency

Rethinking LLM Programming

A Theoretical Framework Based on Automata Theory

Open-Source AI for Python Optimization

Democratizing performance profiling with open-source LLMs

Evolving Code Generation with CoCoEvo

A Novel Framework for Simultaneous Evolution of Programs and Test Cases

LintLLM: Revolutionizing Verilog Code Quality

Leveraging Large Language Models for Smarter Hardware Code Linting

LLMs as Code Executors

Transforming code analysis with LLM-powered surrogate models

ToolCoder: Teaching LLMs to Use Tools Through Code

Transforming tool learning into a structured code generation problem

Code Generation Reimagined

Using Unit Tests to Guide Automated Code Synthesis

Enhancing AI Code Evaluation

Using Monte Carlo Tree Search to improve LLM-based code assessment

AIDE: AI Revolutionizing ML Development

Automating the tedious trial-and-error in machine learning engineering

Enhancing Code Reasoning in LLMs

A new approach to improve how AI models understand and reason through code

Automating API Knowledge Graph Construction

A novel framework for rich, reliable API insights without manual schema design

Automating Docker Configurations with AI

How LLM-based agents can streamline development environments

Trustworthy AI Coding Assistants

Building reliable agentic AI systems for software engineering

Quality Over Quantity in AI Test Generation

How data quality dramatically improves automated unit testing

SolSearch: An LLM-Driven Framework for Efficient SAT-Solving...

By Junjie Sheng, Yanqiu Lin...

Democratizing Application Development

Bridging the Gap Between LLM Code Generation and Deployment

Benchmarking LLMs for GPU Optimization

Evaluating AI's ability to generate efficient Triton code for GPU kernels

Breaking the Text-to-SQL Barrier

A Multi-Module Framework for Enhanced Language-to-Query Conversion

Sol-Ver: Self-Improvement for Code Generation

Teaching AI to Write Better Code Through Self-Play and Verification

Building Better Software Verification

First comprehensive dataset of C++ formal specifications

Benchmarking LLMs for Smarter Code Completion

Evaluating modern AI models for context-aware programming assistance

Revolutionizing API Development with AI

Leveraging LLMs for Faster, More Efficient API Synthesis

Smarter Bug Fixing Through Hierarchical Localization

How LLMs are revolutionizing software debugging workflows

LLMs as Specification Engineers

Leveraging AI to Write Formal Software Specifications

Smarter Code Generation Through Pragmatic Reasoning

How LLMs can better understand ambiguous user instructions

LLMs & Code Review Accountability

How AI is reshaping developer responsibility in code reviews

Smarter Test Assertions with AI

Enhancing software testing through fine-tuned retrieval-augmented language models

Evaluating LLM Code Critique Capabilities

A new benchmark for assessing how well AI can analyze and improve code

Keeping LLMs in Sync with Evolving Code

A novel approach to updating AI models with current programming practices

Speeding Up AI Code Generation

A specialized approach to accelerate LLM inference for coding tasks

Enhancing LLM Reasoning for Software Engineering

Applying Reinforcement Learning to Software Evolution Tasks

AI-Powered Design Pattern Detection

Using Large Language Models to Improve Software Architecture Analysis

AI-Powered Bug Fixing Agents

Automating code debugging with LLMs and LangGraph

Optimizing Code Generation with LLMs

A framework to enhance both efficiency and correctness in AI-generated code

Beyond Tool-Based AI Programming

A New Paradigm for Software Engineering Agents Using Visual Interfaces

Benchmarking LLMs for AI Development

A new standard for evaluating code generation in deep learning workflows

Smarter Commit Messages with AI

Leveraging LLMs and In-Context Learning for Code Documentation

AI-Powered Class Invariant Generation

Using LLMs to Automate Critical Software Specifications

Unlocking LLM Code Generation Capabilities

Separating problem-solving from language-coding skills

Evaluating LLMs' Code Generation Abilities

First benchmark for measuring how well AI follows instructions when writing code

LLMs as Software Architects?

Evaluating AI's Capability to Understand Complex Software Design Patterns

The Future of Software Engineering in the AI Era

Why LLMs won't replace software engineers anytime soon

Consistency in AI Code Reviews

Evaluating how deterministic LLMs are when reviewing code

Automating GUI Design with AI

How LLMs can revolutionize software prototyping

Rethinking LLM Testing

A new taxonomic approach for testing language model software

Enhancing Bug Report Classification with Machine Learning

Improving Development Efficiency Through Automated Classification

Improving Developer Questions with AI

Using LLMs for Named Entity Recognition in Developer Conversations

Democratizing Code Generation with LLMs

Breaking barriers between natural language and programming

Bridging the Gap: Vision-Language Models for Front-End Development

Enhancing AI-powered code generation through targeted data synthesis

LLMs as Code Quality Judges

Automating software evaluation with AI

Engineering the Prompt Layer

Bringing Software Engineering Discipline to LLM Prompt Development

PolyVer: Revolutionizing Multi-Language Software Verification

A compositional approach to verify polyglot software systems

Protecting Software Architecture with AI

Enhancing accuracy in detecting architectural tactics using prompts

AI-Powered Debugging for Hardware Design

Using Large Language Models to Solve RTL Assertion Failures

Rethinking LLMs for Type Inference in Code

Evaluating true capabilities beyond memorization

Enhancing Code Repair with LLMs

Leveraging language models to extract fix ingredients beyond context windows

Testing LLMs' Code Error Prediction

A novel benchmark for evaluating code understanding beyond synthesis

Testing LLM Agent Tools Automatically

Ensuring reliable tool documentation for AI agents

Transforming Software Development with LLMs

How AI is reshaping developers, processes, and products

Testing LLM Prompts: The Missing Quality Assurance Layer

Automated testing for prompt engineering with PromptPex

Smarter AI-Powered Test Generation

Using Static Analysis to Enhance LLM-Generated Unit Tests

Teaching LLMs to Understand Code Execution

Training Models with Dynamic Program Behavior, Not Just Static Code

Decoding Developer Psychology

Using Psycholinguistic Analysis to Understand Software Engineering Teams

Optimizing LLM Code Generation

A Comprehensive Taxonomy of Inefficiencies in AI-Generated Code

Smarter Code Generation with LLMs

Improving code quality through Comparative Prefix-Tuning

Proactive Web Accessibility in Your IDE

LLM-powered VS Code extension for real-time accessibility improvements

Enhancing REST API Testing with LLMs

Leveraging ChatGPT and GitHub Copilot for stronger test automation

Illuminating the Black Box of ML Systems

A novel approach to analyze the semantic flow within ML components

DynaCode: Rethinking Code Generation Benchmarks

A Dynamic Approach to Combat LLM Memorization in Code Evaluation

Team of LLMs: Better Together

Leveraging Multi-Agent Collaboration for GitHub Documentation Summarization

Adapting LLMs to Engineer Problem-Solving Styles

Creating more inclusive AI assistants for software development

LLMs as Bug Replicators

How language models perpetuate coding errors during code completion

Automating Prompt Engineering for Better Code

AI-powered prompt refinement to enhance code generation capabilities

Enhancing Commit Messages with Human Context

Optimizing developer communication through LLM-assisted refinement

Improving Code Understanding with Multi-Agent Debate

How collaborative LLM agents enhance code summarization and translation

AI-Powered Hazard Analysis

Enhancing STPA with Large Language Models

UML Code Generation From Images

Using Multimodal LLMs to Transform Visual Diagrams to Executable Code

Why AI Code Agents Fail at GitHub Issue Resolution

Uncovering the pitfalls in LLM-based software development agents

Modular Prompting Revolution

Breaking the linear chain for better code generation

AI-Powered C to Rust Translation

Enhancing code safety with LLM-driven multi-step translation

Panta: Enhancing Test Generation with LLMs

Combining AI and program analysis for better code coverage

LLMs and Performance Optimization: A Critical Assessment

Evaluating LLMs' effectiveness in optimizing high-performance computing code

Trust in AI: The Double-Edged Sword

Building well-calibrated trust for LLMs in Software Engineering

Synthetic Data Revolution with LLMs

Leveraging AI to create high-quality training data for text and code

Enhancing AI Coding Assistants

A Dynamic Approach to Improve LLM Decision-Making in Coding Tasks

MANTRA: AI-Powered Code Refactoring

Automating method-level refactoring through multi-agent LLM collaboration

EnvBench: Automating Development Environment Setup

A benchmark for evaluating LLM capabilities in software configuration tasks

CodingGenie: AI That Anticipates Developer Needs

Transforming coding workflows with proactive LLM assistance

Community Knowledge Powers Better Code Reasoning

Leveraging Stack Overflow to enhance LLM reasoning capabilities

Conversational LLMs for Code Repair

Understanding the effectiveness and limitations of ChatGPT-based bug fixing

LogiAgent: Smart API Testing with AI

Multi-agent LLM framework detects logical bugs in REST APIs

Enhancing AI Code Generation with Human Feedback

A Bayesian approach to crowd-sourced reinforcement learning for better coding assistants

Supercharging LLMs with API Knowledge

How Retrieval Augmentation Boosts Code Generation Capabilities

Automating Non-Functional Requirements with AI

Using LLMs to enhance software quality from the start

Reinventing AI Code Generation

Integrating Software Engineering Principles into LLMs

Smarter Code Completion with Long-Context LLMs

Training models to effectively utilize repository-wide context

Smarter Code Generation with LLMs

Reducing 'overthinking' to improve efficiency & accuracy

Breaking Down Complex Programming Tasks

How LLMs Can Solve Programming Tasks by Composition

Boosting Code Generation with LLM Ensembles

Using similarity-based selection to improve AI code generation accuracy

PolyTest: Next-Gen Unit Testing with LLMs

Leveraging language diversity and self-consistency for more robust test generation

Bridging the Gap: LLMs and Code Review Comprehension

Evaluating how well AI understands human code feedback

Understanding Programmers' LLM Interactions

How developers use AI coding assistants and what they expect

Making LLMs Write Better Code

Fault-Aware Fine-Tuning for Improved Code Generation Accuracy

Evolving with Rust: LLMs and API Challenges

A dynamic benchmark for evaluating version-aware code generation

The Python Preference of LLMs

LLMs show significant bias in programming language selection

LLMs Revolutionizing Code Analysis

Transforming Software Engineering Through AI-Powered Code Understanding

Safer C to Rust Migration

AI-powered code transformation with memory safety guarantees

Enhancing Code Generation with Documentation

Automating E2E test creation using LLMs and product documentation

Automating Test Case Generation with AI

Using LLMs to bridge business requirements and software testing

Beyond Function-Level: Intelligent Code Translation

Triple Knowledge Augmentation for Repository-Context Translation

Enhancing AI Coding Assistants

How Verbal Process Supervision Creates Better Coding Agents

Benchmarking LLaMA2 for Code Development

Evaluating AI capabilities across programming languages for scientific applications

Boosting CPU Performance with AI

How LLMs transform code for better vectorization

Bridging Natural Language and Code Verification

Using structured reasoning to verify program correctness

Optimizing AI Coding Assistants for Developer Experience

SLA-Driven Architecture for Responsive CodeLLMs

Automating Software Architecture with AI

LLM-powered agents for intelligent architecture design

Enhancing Test Automation with AI

Leveraging LLMs and Case-Based Reasoning for Functional Test Script Generation

Harnessing LLMs for Bug Diagnostics

Using AI to extract failure-inducing inputs from bug reports

Optimizing Code Generation with Smart Retrieval

Identifying what context matters most for AI code assistants

Smarter Tool Use for AI Models

Improving LLM capabilities through code-based process supervision

AI-Powered Code Refactoring

Using LLMs to Automate Method Relocation in Software Engineering

Interactive Debugging for LLMs

Enabling AI to explore and debug code like humans

KGCompass: Smarter Software Repairs

Enhancing Bug Fixes Using Repository-Aware Knowledge Graphs

AI-Powered Automotive Software Validation

Using Reasoning-Enhanced LLM Agents for Precision Release Analytics

Smart Bug Hunting with AI

Using LLMs to efficiently locate software issues across codebases

Evaluating LLMs for Interactive Code Generation

New benchmark reveals how well AI follows instructions when writing code

Testing LLM Robustness for Software Requirements

Evaluating consistency in NFR-aware code generation

AI-Driven Root Cause Analysis

Solving Distributed System Failures with Code-Enhanced LLMs

Testing LLM Reasoning in Code Synthesis

Evaluating how AI agents learn from examples using a novel interactive benchmark

Smart Code Completion with Context

Enhancing LLMs for Complex Data Transfer Tasks

Onboarding Buddy: AI-Powered Developer Onboarding

Revolutionizing software engineering onboarding with LLMs and RAG

LLMigrate: Transforming "Lazy" Large Language Models into Ef...

By Yuchen Liu, Junhao Hu...

Rust Compiler Bugs: Hidden Challenges in Safe Systems

Uncovering unique bug patterns in Rust's safety enforcement mechanisms

Self-Rectifying Code Generation

Enabling Non-Coders to Build Complete Projects with AI

Supercharging Bug Detection with LLMs

Using Large Language Models to improve bug-inducing commit identification

Boosting Automated Program Repair with LLMs

Knowledge Prompt Tuning for Enhanced Bug Fixes with Limited Data

AI Copilot: Revolutionizing Software Testing

Bridging Code Generation and Bug Detection with Context-Based RAG

Unlocking Coding Capabilities in LLMs

Making LLM reasoning more accessible through data distillation

Making LLMs Better at Long Code Translation

Improving accuracy through instrumentation and state alignment

Human Factors in LLM Adoption

Understanding why engineers choose LLMs for specific tasks

Expanding LLM Evaluation Beyond Python

A new multilingual benchmark for code issue resolution

Unlocking Code-Capable AI

How OpenCodeInstruct Advances Code Generation in LLMs

Enhancing LLM Code Generation

Automating Prompt Engineering with Diffusion Models

Evaluating LLMs' Code Comprehension

Measuring how well AI models truly understand code

Dynamic LLM Code Evaluation Reimagined

Beyond Static Benchmarks with Monte Carlo Tree Search

Enhancing API Testing with LLM Intelligence

Overcoming Testing Plateaus with AI-Assisted Mutation

ARLO: Automating Architecture from Requirements

Using LLMs to transform natural language requirements into software architecture

Scaling EDA Testing with Synthetic Verilog

Generating realistic HDL designs to improve tool robustness and LLM training

Enhancing LLMs for Code Repair

New benchmark evaluates how well AI models use feedback to fix bugs

Automating Acceptance Testing with LLMs

Transforming User Stories into Executable Test Scripts

Line-by-Line Code Generation

A novel approach to improve LLM code quality and efficiency

Cross-Domain Code Search Without Retraining

A Novel Contrastive Learning Approach for Zero-Shot Retrieval

Supercharging REST API Testing with AI

How LLM-based systems amplify test coverage and bug detection

Automating Architecture Decisions with AI

Leveraging LLMs to streamline software architecture documentation

AI-Powered System Integration

How LLMs can automate enterprise service composition

Evaluating Code Assistants: Beyond the Hype

A robust methodology for measuring AI coding tool quality

AI-Powered User Story Generation

Automating Requirements for Software Product Lines with LLMs

Benchmarking Coding Assistants Across Languages

A new multi-language benchmark for evaluating AI coding agents in real-world scenarios

DocAgent: Revolutionizing Code Documentation

A multi-agent system that enhances automated documentation quality and accuracy

Smarter Compiler Testing with AI

Using RAG to automate and enhance cross-architecture compiler verification

Smarter Code Generation with Type Constraints

Reducing compilation errors in AI-generated code through type-aware LLMs

Automating Linux Kernel Patch Migration with AI

Using Large Language Models to reduce manual engineering effort

Automating Code Migration with LLMs

How Google scales software evolution using AI

Enhancing LLM Code Generation

Making libraries more accessible to AI through specialized documentation

CodeRAG: Enhancing Real-World Code Generation

A bigraph-based retrieval system for complex programming environments

Multilingual Code Generation Breakthrough

Enhancing programming with structured reasoning across languages

Automating COBOL to Java Validation

AI-powered testing framework for legacy code transformation

Enhancing Software Requirements Quality

A conceptual framework to overcome natural language ambiguity

AI-Powered Engineering Assistance

Enhancing Test Data Analytics with LLM-Based Reasoning

Building a Standard Library for LLMs

Standardizing APIs for Retrieval-Augmented Generation

Swarm Agent: Revolutionizing MLOps

Enhancing Human-Machine Collaboration through Conversational AI